Adobe Community

Adobe Community

- Home

- ColdFusion

- Discussions

- CF2018 treads TIMED_WAITING grow out of control

- CF2018 treads TIMED_WAITING grow out of control

Copy link to clipboard

Copied

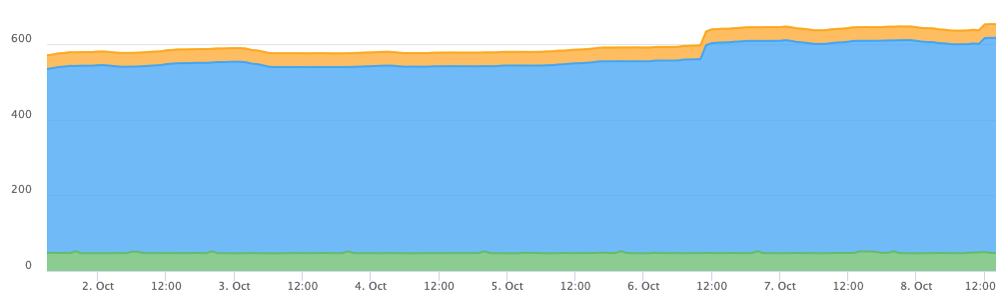

I've a big issue with my new server:

Windows Server 2016 , IIS 10 , CF 2018 standard, FusionReactor

All works with good performance and without errors, but after 2 or 3 hours CF become slow and unresponsive.

I've noted that the number of threads in TIMED_WAITING status grows up continuosly, from the initial 100 to 5000 in about 2 hour, then CF begin crashing.

When IIS is offline, threds sto to grow up.

.png)

.png)

Here is a sample from a thred dump:

name : pool-702-thread-1 priority : 5 state : TIMED_WAITING (PARKING) group : pool

at "pool-702-thread-1" #1755 prio=5 os_prio=0 tid=0x000001742eac0000 nid=0xa3d0 waiting on condition [0x000000541c8ff000]

java.lang.Thread.State: TIMED_WAITING (parking)

at jdk.internal.misc.Unsafe.park(java.base@10.0.1/Native Method)

- parking to wait for <0x000000074a15b238> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.parkNanos(java.base@10.0.1/LockSupport.java:234)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(java.base@10.0.1/AbstractQueuedSynchronizer.java:2117)

at java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(java.base@10.0.1/ScheduledThreadPoolExecutor.java:1182)

at java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(java.base@10.0.1/ScheduledThreadPoolExecutor.java:899)

at java.util.concurrent.ThreadPoolExecutor.getTask(java.base@10.0.1/ThreadPoolExecutor.java:1061)

at java.util.concurrent.ThreadPoolExecutor.runWorker(java.base@10.0.1/ThreadPoolExecutor.java:1121)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(java.base@10.0.1/ThreadPoolExecutor.java:635)

at java.lang.Thread.run(java.base@10.0.1/Thread.java:844)

name : pool-705-thread-1 priority : 5 state : TIMED_WAITING (PARKING) group : pool

at "pool-705-thread-1" #1761 prio=5 os_prio=0 tid=0x000001742eac4000 nid=0xa7e4 waiting on condition [0x000000541cbfe000]

java.lang.Thread.State: TIMED_WAITING (parking)

at jdk.internal.misc.Unsafe.park(java.base@10.0.1/Native Method)

- parking to wait for <0x000000074ad4b768> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.parkNanos(java.base@10.0.1/LockSupport.java:234)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(java.base@10.0.1/AbstractQueuedSynchronizer.java:2117)

at java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(java.base@10.0.1/ScheduledThreadPoolExecutor.java:1182)

at java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(java.base@10.0.1/ScheduledThreadPoolExecutor.java:899)

at java.util.concurrent.ThreadPoolExecutor.getTask(java.base@10.0.1/ThreadPoolExecutor.java:1061)

at java.util.concurrent.ThreadPoolExecutor.runWorker(java.base@10.0.1/ThreadPoolExecutor.java:1121)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(java.base@10.0.1/ThreadPoolExecutor.java:635)

at java.lang.Thread.run(java.base@10.0.1/Thread.java:844)

name : pool-706-thread-1 priority : 5 state : TIMED_WAITING (PARKING) group : pool

at "pool-706-thread-1" #1763 prio=5 os_prio=0 tid=0x000001742eab9000 nid=0xf238 waiting on condition [0x000000541ccfe000]

java.lang.Thread.State: TIMED_WAITING (parking)

at jdk.internal.misc.Unsafe.park(java.base@10.0.1/Native Method)

- parking to wait for <0x000000074ad4c0f8> (a java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject)

at java.util.concurrent.locks.LockSupport.parkNanos(java.base@10.0.1/LockSupport.java:234)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(java.base@10.0.1/AbstractQueuedSynchronizer.java:2117)

at java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(java.base@10.0.1/ScheduledThreadPoolExecutor.java:1182)

at java.util.concurrent.ScheduledThreadPoolExecutor$DelayedWorkQueue.take(java.base@10.0.1/ScheduledThreadPoolExecutor.java:899)

at java.util.concurrent.ThreadPoolExecutor.getTask(java.base@10.0.1/ThreadPoolExecutor.java:1061)

at java.util.concurrent.ThreadPoolExecutor.runWorker(java.base@10.0.1/ThreadPoolExecutor.java:1121)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(java.base@10.0.1/ThreadPoolExecutor.java:635)

at java.lang.Thread.run(java.base@10.0.1/Thread.java:844)

IIS Connector , and server.xml are properly configured, here is JVM args:

-server --add-opens=java.rmi/sun.rmi.transport=ALL-UNNAMED --add-opens=java.base/java.nio=ALL-UNNAMED --add-modules=java.xml.ws --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/sun.util.cldr=ALL-UNNAMED --add-opens=java.base/sun.util.locale.provider=ALL-UNNAMED -XX:MaxMetaspaceSize=512m -XX:+UseParallelGC -Xbatch -Djdk.attach.allowAttachSelf=true -Dcoldfusion.home={application.home} -Duser.language=en -Dcoldfusion.rootDir={application.home} -Dcom.sun.xml.bind.v2.bytecode.ClassTailor.noOptimize=true -Dcoldfusion.libPath={application.home}/lib -Dorg.apache.coyote.USE_CUSTOM_STATUS_MSG_IN_HEADER=true -Dcoldfusion.jsafe.defaultalgo=FIPS186Random -Dorg.eclipse.jetty.util.log.class=org.eclipse.jetty.util.log.JavaUtilLog -Djava.util.logging.config.file={application.home}/lib/logging.properties -Djava.locale.providers=COMPAT,SPI -Dsun.font.layoutengine=icu -javaagent:C:/FusionReactor/instance/cfusion.cf2018/fusionreactor.jar=name=cfusion.cf2018,address=8088,external=false -agentpath:C:/FusionReactor/instance/cfusion.cf2018/frjvmti_x64.dll

Tried to restart CF, IIS, the whole server, change connector config from 'all sites' to 'individual sites', nothing change thi behaviour. Anyone can help me?

2 Correct answers

2 Correct answers

The fix for this blossoming of pool threads seems to be in applying the hf201800-4207395.jar that I'd mentioned here recently. (At least until Adobe rolls that into a general update to CF, of course.)

To be clear, when Dirk said here that the fix "did not work for him", it was that he had been sent the wrong hotfix (for hf201800-4207069). He explained this in the "other thread" where he had first raised this whole pool thread issue. He then clarified that the new fix DID work.

So I raise thi

...We did have the same issue with pool threads count growing abnormally which cuased CPU spikes and server unresponisive eventually. We also got the same hot fix file from Adobe, hf201800-4207395.jar, and after applying the fix, the issue went away.

Thanks for Charlie and other members who provided the solution in this thread and another one, https://community.adobe.com/t5/coldfusion/coldfusion-2018-timed-waiting-threads-stacking-up-endlessly/m-p/11475127?page=1#M186610.

We never thought that cflo

...Copy link to clipboard

Copied

BKBK, those are messages I find in many (many) people's setups, and they are not "fatal". Again, not trying to pick a fight ,but you are throwing the kitchen sink at this problem, when it seems very clearly from the original post by M that they had mounting threads, and they showed pool- ones in their thread dump. All of that ties right in with the hotfix from Adobe that I mentioned (which for now, people must request from them).

So no, really, I don't think it's wise for us to wait for M to reply. As you note, they have not in two years. They may well have moved off of CF by now. I raised my observation about this available fix on Sep 28, now 8 days ago. If they were going to reply, it seems they would have.

But as I said, I was willing to wait a bit. I just don't think we should wait until they reply. Again, the goal here is to help others who find this thread (and they have, as I have heard from people on this matter). If we mark the news of that Adobe bugfix (which HAS now helped multiple people I am working with), and somehow M comes back and clarifies that it's NOT the answer for them, we can certainly change the answer.

/Charlie (troubleshooter, carehart.org)

Copy link to clipboard

Copied

> those are messages I find in many (many) people's setups, and they are not "fatal".

We shall have to disagree.

If Tomcat fails to recognize its own (AJP) connector then there is something amiss. On top of that, there are two same-named cachemanagers in the JVM, a recipe for race-conditions. In any case, the cachemanagers are generating errors and threads at a rate of up to 8 per second.

I do not claim that these are the cause of the problem. Just saying they might have something to do with it. From my experience with our own setup, lesser or more innocent-looking errors than these have been capable of triggering an explosive number of pool threads.

You mention "mounting threads" as if they are a root cause. They are not. They amount to a symptom. If A implies B, it does not necessarily mean that B implies A. Excessive pool threads could be caused by any of thousands of arbitrary processes that generate long object queues, of which the Hotfix handles just one or two.

In short, the Hotfix may well suppress the excessive thread pools. But the problem might persist if your application contained a wayward process that continued to generate them.

Copy link to clipboard

Copied

Well, I agree with some points you just said but do disagree with others. I hope this back and forth may be helpful to someone (and I'm sorry that our attempts to help folks here are in such seeming conflict).

First, Tomcat is NOT failing "to recognize its own (AJP) connector". It's simply a warning about some attributes that CF sets.

Indeed, you can see a couple of bug reports complaining about that as much as a couple of years ago: https://tracker.adobe.com/#/view/CF-4203917. BTW, note that Adobe indicates there that they plan to fix that issue in the next release (2021, which if that name for the next CF release surprises people, see my post here.) BTW, didn't we discuss in another threat that you are "A. B." there, and "BKBK" here? Sorry if I have that wrong.

As for the errors referring to two authcachemanagers, well yes, that could be a problem. And the frequency of the errors in the security.log is a concern, and may well be a clue. But M replied that showing his ehcache.xml, and there was no duplicate authcache there. Did you have another suggestion for him of wher to look? I see none offered there. In fact, the thread soon petered out then in 2018.

Then the news came in recent weeks of Adobe having this hotfix, which was SPECIFICALLY addressing the problem people were reporting with blossoming pool- threads. I never said they were the "cause" of this problem. They are indeed a symptom. As for what the Adobe patch fixes, I can't say. They won't say anything other than it resolves problems with use of CFLOGIN and blossoming pool- threads. The hotfix number was for a private bug, so we can't see the details. (I had asked a couple of weeks ago.)

FWIW, there is an aspect of CFLOGIN that by default leverages ehcache, so there may well be a problem in how CFLOGIN is implemented (under the covers by Adobe) that can LEADS TO these errors about the duplicate authcachemanager in the security.log. Again, only Adobe knows. Perhaps Dirk will see this and be willing to tell us if he saw a corresponding blossoming of those errors in his logs. That would seem to confirm these are all related.

So bottom line, yes: blossoming of pool threads is a specific problem, hit in CF2018 only (from my experience) by certain people. And those people (who I have helped) found it was solved either by stopping using CFLOGIN or by applying this patch. And of course, those who applied the patch did NOT have to change their code to stop using CFLOGIN.

So that's why I am asserting that for this specific problem, of blossoming pool- threads in CF2018, this hotfix seems to be "the answer". If after the several notes we've written and now several days, M is not jumping in to clarify things, I think we can presume he will not, or not for some time. So I do stand confident in saying that the Hotfix is the answer. And I only propose marking it as such because a) no one else has reported any OTHER pool- thread issues in CF2018 that I know of, and b) those who hit it may be led by Google to this post (or the other one).

And again, I want to save people having to wade through the several messages at the top (from 2018) and now these several messages at the bottom (in 2020) to see a ready "answer". Don't you want to help such people that way? Again, if something changes (M gets back to us with some conflicting observations or answer), we can "unmark" it as the answer. As always, I'm just trying to help.

/Charlie (troubleshooter, carehart.org)

Copy link to clipboard

Copied

I understand.

I indeed reported https://tracker.adobe.com/#/view/CF-4203917. I have also asked the Adobe Team a number of times if this is an innocuous warning. If not, how severe the consequences could be. In fact I again did so yesterday. Received no answer yet.

We all integrate ColdFusion differently with other technologies such as web servers, cache, ORM, and so on. Integration means you push ColdFusion that bit further outside its comfort zone. Which almost always means an increased risk of things going haywire. You can't afford to ignore persistent warnings at the heart of the server.

Copy link to clipboard

Copied

We installed the hotfix a couple of days ago. Basically I would say the fix stopped the surge of threads triggered tby the cflogin tag. But I am not sure if it solved the root cause. We found a rise of threads two days ago which have obviously something to to with the hibernate ORM:

When we deploy updates we have to execute an ORM reload, which we have to trigger manually for ~30 applications, all share the same codebase - here is the corresponding excerpt from the Application.cfc:

<!--- ORM CONFIG --->

<cfset THIS.datasource = THIS.cms_name />

<cfset THIS.ormenabled = true />

<cfset THIS.ormsettings.logSQL = false />

<cfset THIS.ormsettings.datasource = THIS.cms_name />

<cfset THIS.ormsettings.dialect = "org.hibernate.dialect.MySQL5InnoDBDialect" />

<cfset THIS.ormsettings.cfclocation = "orm2" />

<cfset THIS.ormsettings.savemapping = false />

<cfset THIS.ormsettings.useDBForMapping = false />

<cfif structkeyexists(URL, "ormreload")>

<cfset THIS.ormsettings.dbcreate = "update" />

</cfif>We reproduced that today with a smaller number of applications, same behavior:

In our security.log we cannot find any entry since the last start of Coldfusion after installing the hotfix. Completely empty.

Copy link to clipboard

Copied

Thanks for the update, Dirk, and the encouraging news that the fix helped with the one issue of blossoming pool- threads, due to cflogin. That is indeed what it was said by Adobe to fix.

As for the security.log, the real question is not so much whether it's empty since installing the fix. The point being raised above was a question of whether it was littered with them BEFORE applying the fix. We were trying to discern if there's a connection between that cflogin/threads issue and a correlating explosion of authcachemanager errors in that security.log. Can you check that and report back?

Finally, as for this other issue now, well, it would indeed seem different. And of course sometimes when one problem is solved, we find that another was lurking behind it, perhaps totally unrelated. Indeed, while it's creating new threads, can you confirm what kind they are (what name pattern do they have)? They may well be pool- threads (like the previous issue), or they may not. For the sake of all this discusison above, it could be helpful to know.

You mention that it's when you do an orm reload, which by the code shown indicates you have a URL to do that, and if true it sets that ormsettings.dbcreate to "update". I'll leave others with more ORM experience to chew on that. And are you saying that for every one call to such an app with such a reload, there's a single new thread? or some number? That may be a useful clue.

And FWIW, ORM does have caching that leverages ehcache, so there may be SOME connection between the issues. Again, Adobe never clarified what the real issue was that was fixed in the hotfix--only that it addressed the symptom of blossoming pool- threads due to cflogin use. There may be some different or related problem.

Or it may also be that these extra threads you see only lived on for some time. In addition to confirming their name, it would be interesting to hear if you confirm they never end or do end after some time.

Again, thanks for jumping in to confirm that the fix has at least addressed the most significant thread-creating issue. Looking forward to hearing from others who have that issue and try this fix.

/Charlie (troubleshooter, carehart.org)

Copy link to clipboard

Copied

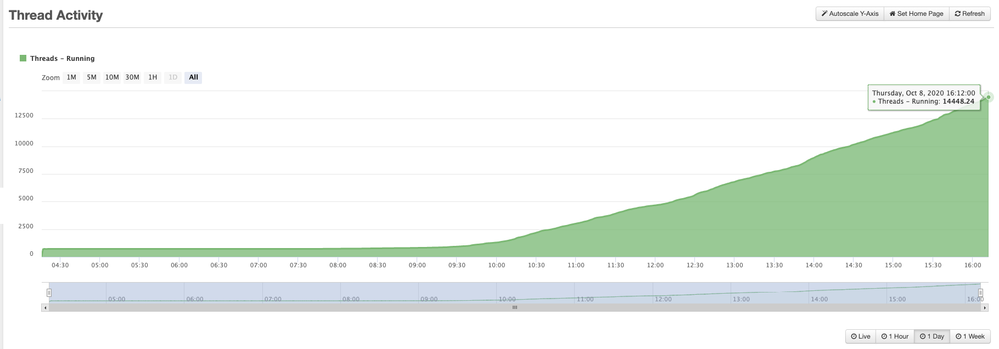

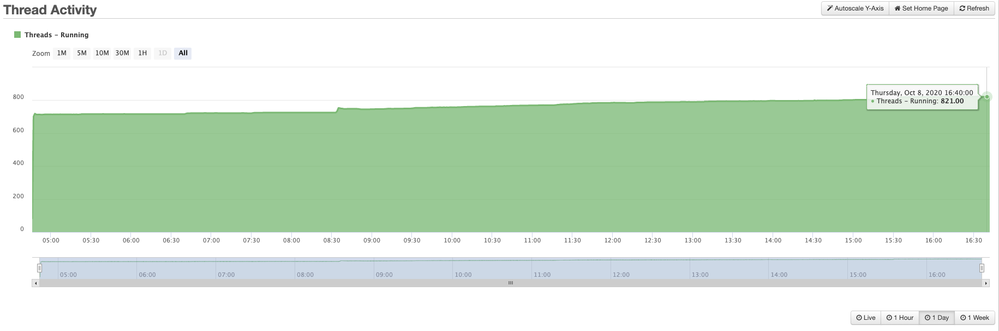

We did have the same issue with pool threads count growing abnormally which cuased CPU spikes and server unresponisive eventually. We also got the same hot fix file from Adobe, hf201800-4207395.jar, and after applying the fix, the issue went away.

Thanks for Charlie and other members who provided the solution in this thread and another one, https://community.adobe.com/t5/coldfusion/coldfusion-2018-timed-waiting-threads-stacking-up-endlessl....

We never thought that cflogin was causing the issue and spent coutless days and nights tuning JVM, IIS tomcat connector, template cache settings and etc.. we even added more memory to the server.

Below is the thread counts from FR with hot fix and without hot fix.

Before hotfix

After hotfix

Copy link to clipboard

Copied

Thanks very much for the update, Takumi. Like with Dirk, it's great to hear news of people having benefited so clearly from the hotfix (and the news shared here about it).

It is interesting to see that you, too, are still showing a growth of about 100 threads in about 12 hours--but that could be "normal" for you for any of many reasons. Or perhaps like Dirk there's some connection with a remaining separate issue. But I suspect you may find that growth was "always there" and never really a problem...or perhaps you would restart CF or the box well before it ever became a problem.

But with this other pool- thread issue (due to cflogin), the rate of grown was just so fast that it was causing people such CPU problems. Again, great to hear more confirmation about the fix at least making that go away.

/Charlie (troubleshooter, carehart.org)

-

- 1

- 2