Adobe Community

Adobe Community

- Home

- Premiere Pro

- Discussions

- Re: Dear Adobe. I want what I see in Premiere Pro ...

- Re: Dear Adobe. I want what I see in Premiere Pro ...

Dear Adobe. I want what I see in Premiere Pro to match how it renders out

Copy link to clipboard

Copied

I hate the gamma compensation LUT. It is such a blunt instrument. Is it so hard to make the blacks match how they would appear in YouTube? Come on, we're not all working for broadcast here. What percentage of your users are producing for broadcast? Three or four percent? I bet.

Please give us a checkbox: Optimize Program Monitor to match YouTube Gamma

Is that so hard? Your Gamma Compensation LUT (an admission of a problem by its very existence) is a total sledgehammer after hours of colour grading. Wake up.

Copy link to clipboard

Copied

It isn't "YouTube gamma" that is the cause of the problem.

I expect from your comments you're on a Mac. If I look at the same YouTube file on my PC, I will see it probably pretty close to what you saw within Premiere.

I will not see what you see for gamma, for that same YouTube file, on an Apple device. Why?

Because Apple for some reason chose to apply the camera transform function of Rec.709 as if it were the display transform function, for any application allowing their ColorSync utility to control display of video files.

So depending on whether that file is displayed on a Mac in Chrome, Safari or QuickTime Player, or ... on the same Mac in VLC, or on any PC or TV, the file will have different display gammas.

The Mac ColorSync controlled view will use gamma 1.96 for the display. All others will use gamma 2.4 for the display.

It's a complete mess, and a right pain for all of us, even the non-Mac folks like me.

But until Apple makes a change to their Rec.709 settings, ain't nothing any of us, nor Adobe nor BlackMagic, can do to "fix" it.

Some Mac screens now have a setting that includes the term "HDTV". That setting will actually use gamma 2.4 for all Rec.709 video files. But many do not have that setting.

A common thing among the colorists I work for/with/teach (mostly based in Resolve of course) is to add a track grade or adjustment layer after finishing the grade, on projects bound for the web.

Then they go to the color wheels, and slightly drop the Gamma (middle or Mids controls) brightness slider.

It will be just a bit dark, but usable on "correct" systems. And a bit light, but still usable on Macs.

About the best we can do.

Neil

Copy link to clipboard

Copied

Why is my Mac perfectly good with colour in Photoshop but terrible in Premiere Pro? And why do video files that I have exported with the Gamma Compensation LUT applied display correctly on PCs as well as my Mac? Shouldn't they be overly dark? Don't Adobe communicate with Apple at all? Perhaps a Post It note on someone's desk? "Your colours are wack matey"

Copy link to clipboard

Copied

In case you missed it in my previous post, note ... it's the display gamma for video files, which is not the same for any stills image files. That you don't have the issue with Photoshop stills is irrelevant.

And the issue is totally due to the Mac ColorSync issue using gamma 1.96, rather than 2.4.

On many Macs, VLC doesn't allow ColorSync 'control', so playing the file in VLC gives the same image as within Premiere, and QuickTime player/Chrome/Safari give the lighter ColorSync gamma image.

As noted in my previous post, I work for/with/teach pro colorists. And you know they're what, 90% Mac based? Use Resolve or Baselight, right?

And they are all furious with Mac about this. It's been covered thoroughly by the experts of pro video color. Non of this is any opinion of mine, it's the hard data from the experts.

While I've thought that as we shift to HDR, this mess would eventually go away. But at this time, HDR is so freaking Wild Wild West that it's even worse than the old VHS/Beta "war".

Neil

Copy link to clipboard

Copied

"and QuickTime player/Chrome/Safari give the lighter ColorSync gamma image."

I can add Firefox to that list.

If 97% of preferred display softwares are giving the impression of having washed out the renders wouldn't it be a good idea for Adobe to take note of that and offer a more controllable solution? The GCL is OK but goes a shade too far in the opposite direction. Even a locked adjustment layer that desaturates and overexposes the source footage within the edit environment would be better, as all subsequent gamma corrections can then be made by hand and adjusted accordingly.

My gamma-adjusted renders display perfectly fine (ish) on PCs and Macs when viewed through a browser on YouTube. It is only the Program Monitor in Premiere Pro that is the oddball in all of this. That is literally the only space where my video files are not displayed as I would expect and consequently I make adjustments and corrections which look fine while I'm editing and then terrible on the render.

I hear what you are saying about Apple colour management but this has been going on for three years and all we have as a "fix" (bodge) from either organisation is, in effect, a global filter applied with no idea how the end result is going to perform.

So a question: Do DaVinci resolve and Final Cut Pro have the same issues? If so what have they done to fix it? If they don't have this issue then I am definitely jumping ship.

Copy link to clipboard

Copied

Final Cut, as the "house" app, is setup differently somehow. No clue how they manage that.

As noted in all my posts on this issue ... I work both in Resolve and Premiere, and work for/with/teach pro colorists, mostly based in Resolve. Though a few are in Baselight.

Resolve has many options that can be used or at times mis-used to effect. But that's irrelevant.

Working in a standard Rec.709 workflow on a PC, you can go forth & back between Resolve and Pr twenty times with a file without troubles. I can take every "problematic" file from a Mac user and work with it with no troubles both Pr and Resolve.

And this has been tested thoroughly by people with WAY more experience than thee & me ... and that's what the top experts all say. Period.

The issue is the difference in gamma between the 'standard' Mac "Rec.709" gamma using 1.96, and the entire rest of the world using 2.4.

And if Premiere on your Mac is off from say on a PC .... or whatnot ... then there's a setting somewhere that isn't great on your kit somehow.

So ... you do have the DCM ... display color management option ... set in the Premiere Preferences, right?

Neil

Copy link to clipboard

Copied

Thanks for your input on this Neil.

Yes DCM is checked.

I have to say I'm staggered that between them the biggest pillars of digital creative output - Adobe and Apple - haven't lifted a finger to resolve (no pun intended) what is a real issue for so many users, save for a sticking plaster LUT.

OK if it's not Adobe's issue but tbh if Apple have hobbled people's perception of colour and gamma then surely Adobe should make it their issue as it is making Premiere Pro significantly less useable. In fact I'm actively looking for alternatives now. If FCP is behaving properly with colour then that is where I may have to go.

On a wider note as far as Apple goes, I have a brand new Mac Studio and I have to say it's terrible. My last Mac - a 12 year old iMac - is running Photoshop 6 and is a significantly superior machine to the Mac Studio, with a tenth of the processing power. I wish I'd bought a PC now, and I never thought I'd say those words after having had a Mac since 1996.

Copy link to clipboard

Copied

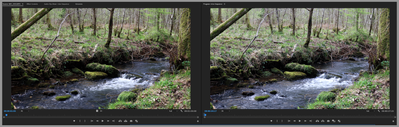

Look at this. This is a screengrab of a clip of 4K in Premiere Pro. The screen on the left is the source footage and the one on the right is the Program Monitor.

They are totally different. Look at the greens. All I have done is drag it into the timeline on a fresh project.

Why? What is going on? This is crazy.

Anyone from Adobe care to elaborate?

Copy link to clipboard

Copied

The two images ... they are not expected to be the same.

The Source monitor is the image prior to any processing of any kind in Premiere. Unless you have specifically applied something as a Source effect.

The Program monitor is after it applies the timeline working space, any display changes, any auto-tonemapping they now have, all that stuff.

So just applying the sequence working color space may change the image.

Neil

Copy link to clipboard

Copied

I've read an article by someone with both high-end credentials in video post color management and obviously connections in Cupertino. His comments were carefully worded, I'm sure to avoid "conifidential" or NDA restrictions.

The essence was that "perhaps" the Apple people looked at the original Rec.709 specs from the late 80's or early 90's ... which were back when 'we' were all using CRT screens ... and decided that was really the "correct" Rec.709, not the addendum that came in Bt.1886 a bit more than a decace ago. Let's look at how we got "here".

The "original" Rec.709 did only include the camera transform function, roughly gamma 1.96. This was to match the image coming from digital-linear of the camera sensor, to match the native 'curve' in shadows of the CRT tubes. That way, digital images were able to be treated the same in video post as film created images.

But then, flat-screen digital screens were created, though many still worked with CRTs for some years. Both type screens were in use. And the digital "flat" screen tech didn't have that shadow response/curve.

So the question was ... 1) drop the original camera transform, or 2) add the transform for digital display, or 3) change the camera transform?

The far simpler process was to simply do option 2. Add a transform function for digital displays to the then current system. That way the images worked fine without any other changes in the entire system. Whether on a CRT or a flat-screen.

Apparently, Apple's engineers decided the "scene-referred" gamma of the original Rec.709 was the 'correct' way to be working. On a theoretical basis, also "apparently". And are all heck-bent on sticking with the "correct scene referred" transform, internally insisting everyone else on the planet is doing it Wrong. And they will not join those doing it Wrong.

Whatever. And though this seems to many colorists I know to have some probable basis in reality, all of them Mac geeks, many with some small connections in Cupertino, it's all speculation. As Apple won't say anything publicly past a couple papers they've published with their operating specs. No explanation or justification.

So take the above as speculation. The rest of this is all "known" techy stuff for colorists, but the direct reasons Apple does what they do ... well, Apple will be Apple.

Even if you're trying to claim, however, that by using the original camera transform by itself is the "correct scene referred" image data, that original camera transform was only done to match CRT's native curve. So if you're trying to really be technically scene-referred, you should drop the 1.96 and just go linear to begin with. And stay linear to linear.

Ah well.

Now, I'll throw in another silly kink ... "full" range versus "legal" or limited range encoding. This one is quite possibly affecting your experience. Without being obvious or that easy to check.

Early digital to analog broadcasting systems needed 'headroom' to hold other signals as part of the file, that told the TV/broadcast systems how to behave. So as the files were then only 8-bit, and therefore 0-255, they set 0-15 and 236-254 as "out of bounds" and used only by control signal data. Meaning the image was to be recorded between 16-235.

But the image was the full image from the sensor, just encoded, in a mathematical formula, to 16-235. The display would automatically transfer the image to 0-255, full signal.

And no one needs that headroom anymore, so technically, we could all run Rec.709 as full range and be done with it. However ... "The System" was already in place. As YUV formats (Technically Y-Cb/Cr) were "legal/limited" and RGB formats (the high-bit 12 bit stuff) which weren't ever used in broadcastm, were "Full".

It doesn't change the data encoded to the file at all, just how it's encoded. Doesn't affect the range of values light to dark actually in the file, it's just a math thing. Which a lot of people ... myself included ... are fooled by at first.

So some systems set themselves correctly so that YUV "limited" formats are accepted as "limited" and expanded by the disply to "full". Some are unfortunately set to assume everything is Full, and those handle the few full range files correctly, but improperly display 'standard' Rec.709 YUV limited files only as data between levels 16-235.

You can probably see where that is an issue from computer and system and displays forth and back in and of itself. If someone's system is set so that everything is displayed as if full range ... standard Rec.709 limited files are displayed with very lifted shadows, weak brights, and lower apparent saturation.

Or the other thing, setting the monitor or system, so that all files are displayed as if there are limited ... means that any files that are correctly 'full' are shown with crushed blacks and clipped whites.

And we've had people on here who insisted that they were only going to use full range because they wanted all their data. They were convinced that there was a contrast range difference in 16-235 and 0-255 files for the brightness range the file could handle.

Which ... isn't correct. Same data can be recorded either full or legal, without losing a bit.

Sadly, that decision, in itself, caused them to lose data. That they were furious about.

Now, I'll throw in the next potential problem: viewing environment!

There's a reason colorist suites have very specific and rather draconian specs for room wall brightness and color, plus room lighting brightness and color. All this past the strict specs for the Rec.709 monitor itself. Because the viewing environment completely alters our perceptions for contrast, saturation, shadows, and whites.

So a colorist works in a very controlled darkened room, with a very low lighting level. The walls are specified as an incredibly neutral gray (and yes, there are color coeeficients specified for lighting meters to check), and ... the monitor is required to have a "bias light" mounted to the monitor or between it and the wall, lifting the brightness around that monitor a precise and yes measured amount.

(And yes, my office is fully and correctly setup according to specs. Because, well, it has to be to produce the materials I make for pro colorists.)

The monitor is carefully calibrated, a LUT generated either parked in the monitor itself or in the breakout device feeding that monitor, because they do not trust computer OSs and GPUs.

(This is the one thing I vary from "full" specs ... I still use my GPU to feed the monitor. BUT ... I've carefully calibrated and run profiles to check the calibration! ... and had my files checked by colorists on their systems for accuracy of hues/sat/brightnesses to verify things.)

The monitor is set to 100 nits pure white, D65, sRGB Primaries, gamma 2.4. And even then, they use outboard scopes normally as a further check of brightnesses and saturation.

Look at something in their suite, watch the same file on a phone on a park bench at noon on a sunny day, or on the same phone lying in your bed in a dark room at night. You will see three different images.

Right.

And even between monitors, if one is set to say around 230 nits in a moderately dark room, and another is up over 300 in a much brighter room, that file will have completely different 'feel' for the black level & saturation.

So between the Apple non-standard Rec.709, the potential issues of screens set or not set to full/legal levels correctly, the wide range in screen brightnesses and room/viewing brightness, pro colorists know there is only one guarantee for their carefully crafted work:

No one watching their work will ever, ever! ... see exactly what they saw in their suite. Period.

Not in a movie theater, not via streaming or broadcast, nor even via DVD/BluRay disc. Ever. Period.

That's ... image post processing.

Neil