Mouths mysteriously won't move.

Copy link to clipboard

Copied

Hi,

This is my first time using the 2019 update... our company updated around May-June of 2019 (way late), but the version I'm currently on is Version 2.1 (build 140).

*Real quick* I had an initial issue which was that the .ChProj would not open from our server. This was not an issue in 2018 Ch.Anim, but now we get an error message telling us we have to put it on our drive or desktop. Thoughts?

*ACTUAL ISSUE*

So once the project is open (from desktop), everything seems to be in working order. However, when I sync new audio in a new scene, although it DOES produce visemes, there is no mouth movement upon playback. I have checked all the settings, and tags, etc... This was a working, functioning puppet and project the last time it was opened and used, and no changes have been made except for the update.

What could this possibly be, given that everything appears correct, and hasn't been touched since before our update?

Thank you.

~Andrew

PS: Some helpful additional info... I opened Old scenes that have already been animated and exported successfully, and they do not play back either. All other triggers, face, and eye gaze behaviors appear to work normally.

Copy link to clipboard

Copied

The old scenes not working might be of interest to DanTull

If I understand correctly, you are saying you get vizemes in the timeline, but the mouth is not moving.

I think some of the behaviors have changed in how scoping works. I would find the face behavior and expand the “handles” and “views” sections. This shows what the behavior bound to. My guess is you have multiple profiles but they no longer all link up correctly.

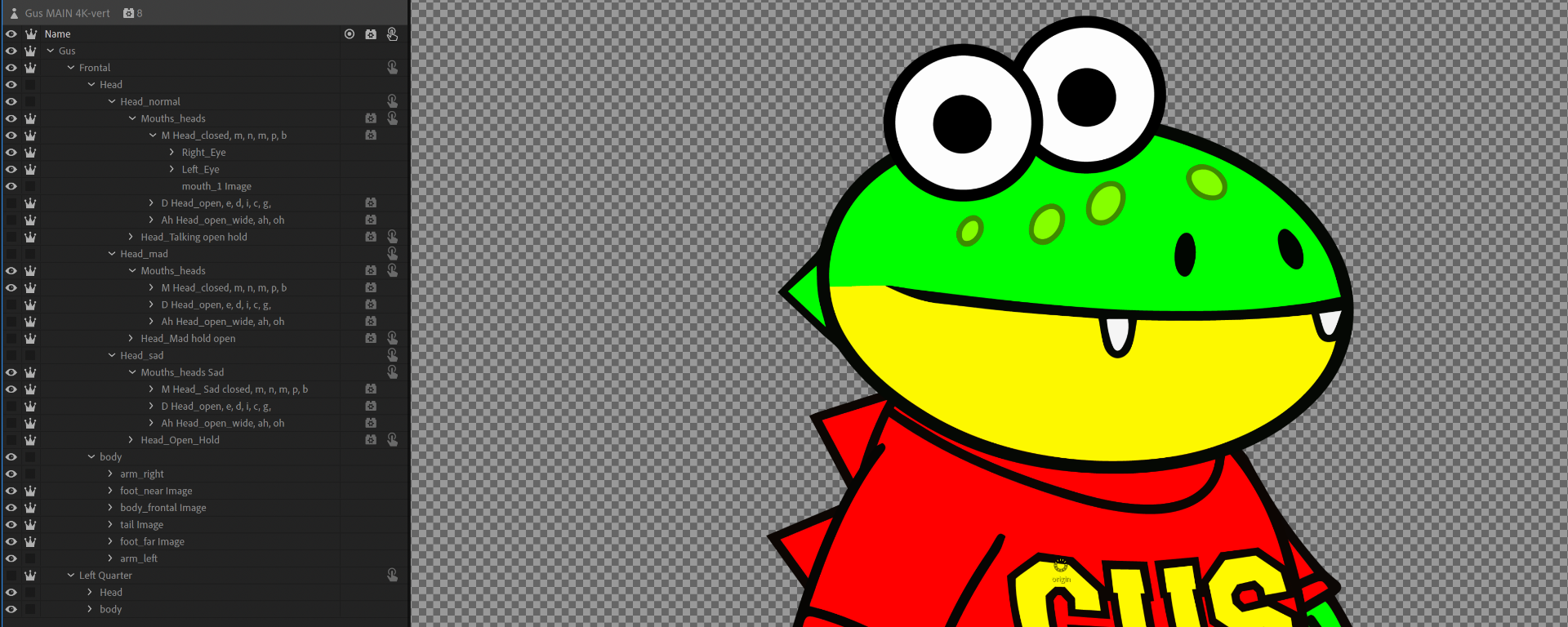

Could you share a screenshot of the rigging hierarchy of the puppet?

Does your puppet have profiles? There have been changed there (eg walk behavior understands more profiles than before)

Copy link to clipboard

Copied

Hi Andrew, has alank's response helped at all here? Are you still trying to work out the issue?

Or have you found the solution? If you've found the solution please post your answer so others who may come across the same issue will have an idea of how they may be able to fix it.

Copy link to clipboard

Copied

I have not figured out the issue, but we were able to reinstall 2018 in order to deliver.

In regards to Alan's response, yes the compute lip sync feature produced visemes in the timeline, but the mouth wouldn't move on playback. I'm not quite sure what is meant by scoping, so I'll have to look into it.

The puppet does not yet have a walk cycle set up. There's a "frontal" and 'left quarter' profile' ( which is essentially mirrored frontal), though for the current project, isn't being used.

It must be some change in the hierarchy for 2019 that doesn't work with my rig. I did some experimenting with different methods with nothing. I'll send screenshots soon.

Copy link to clipboard

Copied

Glad you were able to install the previous version to get you sorted on this occasion.

It would be great to keep this discussion going in case there is an ongoing issue which the developers need to review with current updates.

Copy link to clipboard

Copied

Under "Head" there are 3 emotions (Normal, sad, mad)

Each emotion has a set of mouth heads for lip syncing, and a set of mouth heads for "holding open" the mouth w/cycle layers trigger.

Under each set of mouth heads are 3 mouth heads (closed, open, wide open), each of which are assigned to various mouth characters (Aa, ee,Oo, etc).

All of this is then applicable to the qtr left turn, which is not being used.

Here's a screenshot I took during playback, where the playhead is clearly over a viseme that should produce and open mouth, but is not.

Copy link to clipboard

Copied

BTW, the screenshot you posted won't show the viseme from the timeline if your puppet is armed and the lipsync behavior is also armed. That's because it needs to be able to respond to the live input. Ideally we'd add some sort of playback vs. rehearsal mode to make this simpler.

Thanks,

Dan Ramirez

CH QA

Copy link to clipboard

Copied

Funky puppet! 😉

My suggestion is in the lip sync behavior (or was it face) there is a View or Handles section with s little triangle next to it to expand the section. What this will reveal is what layers have been bound to different visemes. I would look to see what layers you expected to get bound to visemes but were not.

The rigging hierarchy is fairly complex. I am wondering if the auto tagging rules where changed, or the scoping code is only picking up one set of visemes and not all of them. If the latter you may need to add a lip sync behavior per profile? Then you might need to make sure you record the right lipsync behavior. But not sure.

Happy to have a play with the puppet if you are willing to share it if you cannot work it out. But certainly this rigging is a bit more interesting than s typical puppet. Not wrong, just different. It might need fiddling with the puppet itself as a result.

Copy link to clipboard

Copied

If you can post the project, I'll take a look. You can do that in this thread, or send me a PM.

Thanks,

Dan Ramirez

CH QA