- Home

- Character Animator

- Discussions

- Re: Replace limb IK with Midi simulation?

- Re: Replace limb IK with Midi simulation?

Replace limb IK with Midi simulation?

Copy link to clipboard

Copied

Hi, I am experimenting with advanced scenarios to control the Character animator. Recently, I started to move away from limb IK (arms) toward midi control and I find that it is much easier to control character this way if the character animator window is not opened.

For example, I use Unreal Engine to make a 3D room and my character lives inside

Problems with it:

- When I switch cameras and shoot the camera from the back, I need to swap ears, hide eyes, etc. – solved with an AutoIT script. They have a command “controlsend” to send the keypress to a specific part of any program that you have opened. So, I just sync the button press between the unreal engine and Character Animator and swap all required elements

- Wrist swap required. I need to switch the wrist based on the elbow angle, so the big finger looks appropriate – not solved, but have an idea. We can send keypress to Character Animator as discussed in #1 + we can control the hand with midi slider. If we develop some small program that can read the value of my Midi slider, I can code the logic to send swap keypress based on the midi values.

- Live Replay cycles + looping. Idea from #2 can be developed further. Let’s say we have a virtual midi controller that simulates midi? Let’s say we have a record and replay button and the ability to save and adjust midi values. It seems like it would pretty much give me as much control as possible.

The question to the community if someone knows a good way to deal with midi this way. Should I go straight for something custom or is there some software or hardware you can think of that does something similar? I would rather buy than develop something that exists.

Let me know if there are some good workflows to automate midi. I see some cases like control servers with midi, but the only thing I found work with PowerShell only and lagging up to 20-30 seconds behind.

If you are interested in details on how I use midi

Details on that “controlsend” from AutoIt

Copy link to clipboard

Copied

Cool stuff! If any use, I played with MIDI a few years back. The blog post has a link to some Unity C# code I wash playing with. It converted HTC Vive (VR) controller positions into MIDI events which got sent to Character Animator. https://extra-ordinary.tv/2018/09/28/midi-and-adobe-character-animator/

I have not touched the code in a few years - buyer beware! 😉

Copy link to clipboard

Copied

Nice, it looks like it may just work for sending signals, so looping animation should be straightforward. Just need to wrap around some recording and stick it into the unreal engine would be nice. I will post if manage to develop it into something useful.

The cool part that it allows to record joint angles so recorded midi should work across different characters with different limb sizes. I have 40 characters planned, so this should be a neat speed up in prepping the puppets

Copy link to clipboard

Copied

Wow this is impressive stuff!

Copy link to clipboard

Copied

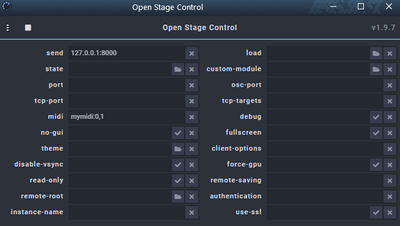

Hi, quick update. There is no need to go full custom there is an amazing project Open Stage Control

https://openstagecontrol.ammd.net/

It allows:

1) Create a custom layout

2) Choose from/to values for knobs and a step (if you want more stop/motion look, so steps are further apart and more snappy)

3) There is a scripting section, that I did not check much yet, but it seems like sending a midi key to flip wrist based on the elbow is on the table.

It is a bit tricky to set the first time, but nothing much. Here are some settings I used to send a test message to Character Animator

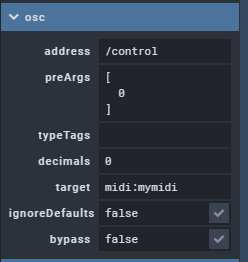

When you add knobs and controls the main part is. Where we care about:

1) address

2) preArgs

3) target from the settings in the screenshot above

I also tested, we can attach knobs to existing midi values to use the same ones that the physical controller uses. All the way seems like an app to investigate here

Copy link to clipboard

Copied

Hi, one more update. I figured out the way to use a webcam to track hands and use hand gestures to control the character animator. I recorded the video on a basic gesture - when flipping the wrist, trigger swap set.

Let me know if some of those use cases are interesting or you have an idea of what else to do. I am going to explore using facial expressions for character control. But that probably would not be as easy to replicate as this one.

Copy link to clipboard

Copied

Innovative ideas! Yes, hands or motions being a way to initiate triggers is definitely something that has come up recently as people are testing out the body tracking beta and are unable to reach their keys.

Copy link to clipboard

Copied

Sounds good, I will make the next one with a front camera and body tracking behavior. In terms of gestures I am thinking:

1) 2 hands wrist track - one wrist used to recognize that we want to do trigger (e.g. ignore both wrists if not spiderman). This is probably required, so we do not have things triggered at random. In the case of a top/down camera, we can just position it, so hands are outside, with the front camera + full-body tracking we need to be more careful.

2) The second wrist can trigger: 1 finger, 2 fingers, 3 fingers, thumbs up, spiderman

Any thoughts on what else to add? I am thinking touching the fingers can be a trigger and then match the distance to translate finger distance to a knob value. But those seem to be involved and I want to keep this side of a project at least somewhat easy enough for a random person to try

Copy link to clipboard

Copied

Currently I know the most requested hand positions (outside of a default open hand position) are a) palm swap (thumb facing opposite way), b) point, and c) fist.

Copy link to clipboard

Copied

Here is how things look with the front camera. In this walkthrough, I swap the eyebrows, but nothing would stop people from doing the same thing with a wrist.

https://www.youtube.com/watch?v=rxdnlg4utm4

Copy link to clipboard

Copied

Awesome!

Copy link to clipboard

Copied

Hi,

Just to bring a conclusion to the topic. I tried to integrate the joint angles to bring to the character animator, seems to work fine as the POC. The part that I like best is an option to record and replay tracked joints and you can push it to the character of a different size.

Check it out, let me know if someone has some thought or tries to make it work, will be glad to help.

Copy link to clipboard

Copied

Made it easier to try it out. Now there is no need for a complicated setup + both arms work + real-time.

To run you will need to prepare your puppet.

Here is rigging:

1) Arms Top - top arm layer is not independent. Used to connect arms to the body

2) Left Arm - forearm art + all nested layers. The dot of this layer will represent the shoulder. We add transform behavior and will use rotation, so both forearm and nested wrist will move together

3) Left Shoulder - shoulder to forearm art

4) Left Forearm - elbow art + all nested layers. Same as #2 but for only the elbow and wrist. Extra transform behavior here.

5) Wrist layer should have a swap set with the big finger on the opposite side. If you want to flip your wrists automatically.

Refer to pic for details. On the right you see where to add transform behavior, on the left what to make independent.

You will also need to prepare a "control" panel. Add character to the scene, go to controls.

Grab:

1) Rotation of the shoulder

2) Rotation of the wrist

Refer to the picture of how to set up minimum and maximum knob positions.

Start with a position on the left, move joints counterclockwise until complete a circle.

Character setup should be done. To run body tracking you will need to download the file:

It is a python3, so you will need to have it + you will need to run "pip install" to get libraries: cv2; mediapipe; rtmidi.

You will also need to install a loopmidi and add a virtual midi device

After this, you should be good to go. Python files should not require adjustments. One thing you may want is to adjust what camera or loop midi port to use. You can do it here

Copy link to clipboard

Copied

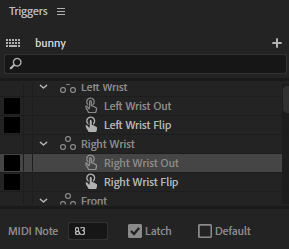

Oh and to flip wrists automaticly you will need to assign spesific notes to the wrists:

1) Left Out - Midi Note 81

2) Left In - Midi Note 80

3) Right Out - Midi Note 82

4) Right In - Midi Note 83

You also need to check "latch"

Copy link to clipboard

Copied

Hi,

One more update. This use case covers the automation of arm flip when we move the knob. There is some code in the scripting tab of the Open Stage knobs, but it is very light.

Video description

Let me know how it went if someone tries

Get ready! An upgraded Adobe Community experience is coming in January.

Learn more