- Home

- Lightroom Classic

- Discussions

- Re: Lightroom 4.2 very poor CPU usage

- Re: Lightroom 4.2 very poor CPU usage

Lightroom 4.2 very poor CPU usage

Copy link to clipboard

Copied

Lightroom 4.2 seems reasonably fast when I work with it, whether it's browsing photos or adjusting sliders, although it takes several seconds to go into develop mode after launching it for the first time.

But now I'm exporting 1498 photos that are 5184 by 3456 and it's taking quite a while, I would say about an hour or more. This is on a brand new system I just assembled consisting of an i7 3930k with 32 GB of RAM that flies with every other program. While exporting this batch I opened Task Manager and I noticed that CPU usage never goes to 100%, not even close. There are peaks of 50%, but on average it must be in the 20s:

This is very disappointing on a CPU that has 6 physical cores and 12 logical cores with hyperthreading at 3.2 with turbo at 3.8 Ghz. The batch is exporting these photos from one SATA 6 drive to another SATA 6 drive, and the HD LED barely lights up, so I know the hard drives are not the bottleneck. So I'm wondering, is Lightroom 4.2 really that bad when it comes to taking advantage of the CPU cores? Is there anything I can do to make it use the CPU more?

Thanks,

Sebastian

Copy link to clipboard

Copied

Sebasvideo wrote:

I would prefer not to turn off hyperthreading, since part of the reason I spent $1300 in a system upgrade was to get that feature and it's not practical. Even if it worked, I would have to reboot every time I need to do a long export, go into the BIOS, turn off HT, do the export, reboot, turn it back on. Too much trouble.

From doing a lot of reading and with my experience with Win7x64, hyperthreading seems to be more of a marketing exercise than a performance improvement! My 6 core i3930K runs faster and cooler with hyperthreading off for everything. The virtual cores are not real cores, but just 6 extra queues for the 6 real cores. They might save a little time when the 6 cores have a slack moment, but some programs simply don't like them, and a lot of people in the past have recommended turning HT off. As I said, my cpu runs several degrees cooler and no slower with HT off all the time. It is not a 'must have' feature!

Bob Frost

Copy link to clipboard

Copied

bob frost wrote:

Sebasvideo wrote:

I would prefer not to turn off hyperthreading, since part of the reason I spent $1300 in a system upgrade was to get that feature and it's not practical. Even if it worked, I would have to reboot every time I need to do a long export, go into the BIOS, turn off HT, do the export, reboot, turn it back on. Too much trouble.From doing a lot of reading and with my experience with Win7x64, hyperthreading seems to be more of a marketing exercise than a performance improvement! My 6 core i3930K runs faster and cooler with hyperthreading off for everything. The virtual cores are not real cores, but just 6 extra queues for the 6 real cores. They might save a little time when the 6 cores have a slack moment, but some programs simply don't like them, and a lot of people in the past have recommended turning HT off. As I said, my cpu runs several degrees cooler and no slower with HT off all the time. It is not a 'must have' feature!

Bob Frost

I do have hyper-threading present in the Develop module.

I do not have hyper-threadiing present in the Library module.

Until someone can tell me how to activate and deactive hyper-threading on OS X (10.8.2) I can't do the appropriate experiment.

Copy link to clipboard

Copied

Hyperthreading OFF: sudo nvram SMT=0

Hyperthreading ON: sudo nvram -d SMT

& reboot.

ref: http://grey.colorado.edu/emergent/index.php/Hyperthreading

(that's for 10.7, I assume same for 10.8, but dunno...)

~R.

Copy link to clipboard

Copied

bob frost wrote:

From doing a lot of reading and with my experience with Win7x64, hyperthreading seems to be more of a marketing exercise than a performance improvement! My 6 core i3930K runs faster and cooler with hyperthreading off for everything. The virtual cores are not real cores, but just 6 extra queues for the 6 real cores. They might save a little time when the 6 cores have a slack moment, but some programs simply don't like them, and a lot of people in the past have recommended turning HT off. As I said, my cpu runs several degrees cooler and no slower with HT off all the time. It is not a 'must have' feature!

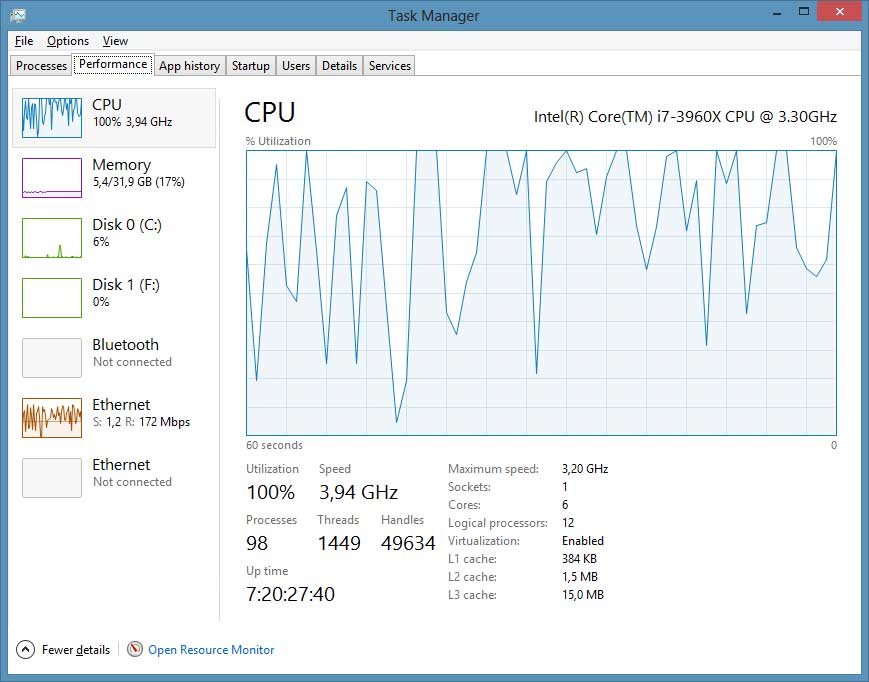

That may be the case for six-core and higher core-count systems, but not so with my i7-860 quad core system running LR4 on Windows 7:

http://forums.adobe.com/message/4661813#4661813

Excerpt from above post:

"To verify how much hyper-threading helps LR4 I ran benchmarks with hyper-threading enabled and disabled. Interestingly the benchmarks were almost identical, but LR lost its responsiveness in both the Develop and Library modules when 1:1 previews were being built or images were being exported.

With hyper-threading enabled all eight threads never reached 100% utilization (see above screenshot). With hyper-threading disabled utilization rose to near 100% for all four cores during 1:1 preview building and during exports. This may be one (1) of the reasons why LR becomes sluggish on systems without hyper-threading."

Message was edited by: trshaner - Added quoted text by Bob Frost.

Copy link to clipboard

Copied

bob frost wrote:

From doing a lot of reading and with my experience with Win7x64, hyperthreading seems to be more of a marketing exercise than a performance improvement! My 6 core i3930K runs faster and cooler with hyperthreading off for everything. The virtual cores are not real cores, but just 6 extra queues for the 6 real cores. They might save a little time when the 6 cores have a slack moment, but some programs simply don't like them, and a lot of people in the past have recommended turning HT off. As I said, my cpu runs several degrees cooler and no slower with HT off all the time. It is not a 'must have' feature!

I wouldn't be so sure. In Lightroom disabling HT seems to help, but the gain is minimal, unless you have tens of thousands of photos to export. In other programs, however, it degrades performance. I ran some benchmarks.

Lightroom 4.2

(34 photos, 5184 by 3456 from a Canon 60D in RAW at the highest quality, all of them with many develop settings applied, export to JPEG 100% quality to a different hard drive)

With HT: 1:13.4 mins

Without HT: 1:08.9 mins

After Effects CS6 11.0.2.12

(30 second composition with several layers and effects applied)

With HT: 1:15 mins

Without HT: 1:20 mins

Cinebench

Open GL

With HT: 53.49 fps

Without HT: 54.85 fps

CPU

With HT: 11.44

Without HT: 9..48

So at the CPU level, programs can take advantage of hyperthreading if they are properly designed. Hopefully the Lightroom team will address this in the near future.

Copy link to clipboard

Copied

As far as I know, the lower CPU usage is good when you do export in background and retouch while exporting. There is a way to force more CPU usage.

Lightroom can scale pretty well when you use multiple exports at a time. I have a PC with Windows 8 and Core i7-3960X here and when I'm have to export few hundred RAW to JPEG, I separate them in even groups.

For example, I have to export 450 RAW files. To do it faster I select 200 of them and launch the export dialog. After the export starts I select 150 more and run the export dialog again. And than again with last 150 files. This gives my PC a good load and the increase in output performance is about 35-40 percent.

You can use 3-5-6 or more export processes at a time if your computer can handle it. For example yesterday I exported in 9 threads and the CPU was used all the time 100%, the LR took about 10.5 gigs of RAM during the process.

I've done a special benchmark for this post. Exporting 500 NEF files (Nikon D4) to JPEG@90% took me exactly 9 minutes and 14 seconds. Source RAW files were located on a NAS device connected with a Gigabit LAN. So doing simple math gives me about 1,01 FPS of rendering speed.

Copy link to clipboard

Copied

I would expect any export operatorn to be "bottlenecked" by the peroformance of I/O operations, not by CPU or memory prerformance. Which means that it is prefectly normal to observe fairly low CPU loads during export operations. If you somehow manage to create a very CPU-intensive Export operation, run several of them in parallel and use a very fast I/O destination (SSD or virtual RAM disc) then it might be possible to saturate the CPU(s)... But in case of normal everyday export operation I would expect the I/O to be the bottleneck and CPU loads to remain low.

Copy link to clipboard

Copied

A fast I/O system is a good choice, but it does not influence the export much, as the source files and destination files aren't that big (20-50 Mb per RAW 5-10Mb JPEG). The disk I/O is critical for LR database, as it uses thousands folders and files. So a fast SSD is a must have for LR database location, but not for RAW storage and export.

BTW, I've done one more experiment – exported the same 500 NEF files from Nikon D4 in 1, 3, 5 and 9 threads. The results are impressive:

1 thread – 14m17 = 857 sec total export time

3 threads – 9m14s = 554 sec

5 threads – 7m56s = 476 sec

9 threads – 7m27s = 447 sec

So in total we have an almost 2x increase in export performance while using more threads.

CPU usage on 5 threads:

Copy link to clipboard

Copied

I've noticed almost no reference to file defragmentation as a performance enhancement. The Lightroom databases (indexes, caches, catalogs, previews, etc) are build in such a way as to make them easily fragmented. As an example, I had a catalog with 2100 photos have 1200 fragments after just a few days of editing. For rotating media (HHDs) this will slow access considerably. That is a partial explanation for slowing down as you progress through a heavy editing work session. That and real memory exspansion.

I have found that defragmenting frequently is quite beneficial. I also use a product called Perfectdisk Pro to minimize fragmenting using the Opti-Write feature.

While I haven't bothered to document the value of defragmenting, I can say with some certainty that it is a relatively easy and cheap enhancement.

Copy link to clipboard

Copied

Hi Bill,

Your catalog was in 1200 pieces (fragments) - how did you decide that? - that sounds extreme.

I almost never defragment my disk, it shouldn't be much necessary if there is ample free-space - right?

Rob

Copy link to clipboard

Copied

Rob Cole wrote:

I almost never defragment my disk, it shouldn't be much necessary if there is ample free-space - right?

Wrong, as I understand it!

Example:

- Write a block to new file A.

- Write a block to file B - if there's no fragmentation, that goes at the at the end of the allocated disk space, after file A.

- Write another block to file A. That goes after file B, so file A is now fragmented.

However, W7 normally automatically defrags rotating disks (it doesn't defrag SSDs, as they don't need it). Go to drive properties, tools, defrag, and see the defrag schedule. I don't think XP defrags automatically.

Copy link to clipboard

Copied

I meant on W7+ (dunno about Mac).

Copy link to clipboard

Copied

Rob Cole wrote:

I meant on W7+ (dunno about Mac).

Yes, sorry we're at cross purposes. On W7 you never normally need to defrag a disk, as Windows does it for you (by default once a week).

Copy link to clipboard

Copied

CSS Simon wrote:

Windows does it for you (by default once a week).

I thought it did it as a matter of course (meaning: in the course of normal usage, 24x7...) - thanks for correcting me.

R

Copy link to clipboard

Copied

Disk fragmentation is not an issue on a Macintosh.

Copy link to clipboard

Copied

Bob_Peters wrote:

Disk fragmentation is not an issue on a Macintosh.

Why is that?

Copy link to clipboard

Copied

All hard drive file systems are prone to fragmenation. OS X constantly monitors file activity in the background and than "reorganizes" (AKA defragments) files smaller than 20MB to larger areas of free space. to "reduce" the possibility of future fragmentation.

This is also one of the possible causes of LR performance issues with Mac systems, since you have no control over this background activity. It can't be "scheduled" as on a Windows system to defragment ALL files, including those >20MB. Rest assured large files such as the LR Catalog and Camera Raw cache do get fragmented on a Mac system.

The best solution (Mac or Windows) is to put these performance critical LR files on their own partition or even better a separate SSD, which doesn't have fragmentation performance issues (no spinning media).

Copy link to clipboard

Copied

Rob, I use a utility called SmartDefrag to analyse my HDDs. I gives me a detailed picture of the size of a file and it's fragmentation. I have been using it and other system performance monitoring tools to try and figure out why Lightroom is so slow. Smartdefrag gave me the totals and I was as surprised as you that it was that bad. As to defraging done by W7, I wouldn't know because I stopped using that program years ago. It is very slow and ineffective and has been replaced by many freeware and for-a-fee programs such as PerfectDisk http://www.raxco.com/home/home-professional.aspx

I believe a great deal of the performance problems are due to the way Lightroom uses disk storage and defragmentation is one thing that you can do to keep ahead of the game just a little. And you should definitely look into using one of the tools I mentioned to see how bad (or good) your system is.

I think the Lightroom product is an excellent photo editor and I think that Adobe has maximized the use of middleware software such as SQLite to achieve some pretty impressive results. That being said, I also think they have exceeded the capacity of the average users PC to perform at an acceptable level. What I mean is Lightroom has overshot the capabilties of the current crop of PCs AND the average users ability to figure out what's wrong and how to correct it. Maybe it's time for Adobe to be realistic as to what the minimum system configure should be?

Copy link to clipboard

Copied

Putting high access file in a new partition will do nothing for performance. A partition is just another location on a disk that must share the same and single access path as all other partions on the drive. Plus it will do nothing whatsoever to avoid fragmentation or make its effects any less. Even SSDs can have performance problems if too many file are accessed at the same time as is the case in a highly multithreaded program like lightroom. The problem is queuing for access and the number of I/O operations per second that the drive can support. I've watched the tread count go from a low of 30 to over 300 during an import. But that's a whole other topic.

Copy link to clipboard

Copied

BillAnderson1 wrote:

Even SSDs can have performance problems if too many file are accessed at the same time as is the case in a highly multithreaded program like lightroom. The problem is queuing for access and the number of I/O operations per second that the drive can support. I've watched the tread count go from a low of 30 to over 300 during an import. But that's a whole other topic.

I use 3 SSDs; one for the system, one for LRs catalog and preview cache, and one for LRs acr cache (and other programa caches). I also put an extra pagefile on the third SSD. The images are on a big internal HDD; ssds are not big enough yet at reasonable prices.

Bob Frost

Copy link to clipboard

Copied

Bob, you’ve discovered the key to success! Your technique is identical to that used in the mainframe computing environment. You’ve spread out your high access, smaller sized files to different physical pathways. What you’ve done is called storage management in storage industry tech-speak and it is the only way LR users with performance issues will find steady and consistent relief. By adding multiple access points (3 separate SSDs) to your various working datasets you have alleviated the response time issue caused by multiple threads trying to access different files down the same physical pathway.

I promised a demonstration of the effect of multiple threads accessing related data on a single disk. What I did was run a program called RoadKill Disk Speed (I’m not making this up) to test the speed on my main OS HHD disk. I ran this two minute test on a completely quiet system and got these results:

Access time 6.62 ms

Cache Speed 321.12MB/second

Max Read Speed 148.64 MB/second

Overall Score 2464.2

I then started a second copy of the tester and ran both of them at the same time in order to judge the interference factor caused by two threads accessing the same drive concurrently. I got these results:

Access time 10.36 ms - increased by 56%

Cache Speed 211.77 MB/second - decreased by 52%

Max Read Speed 57.35 MB/second - decreased by 159%

Overall Score 742 - decreased by 232%

I won’t go into detail about each of these stats, but I think it’s pretty obvious that unless the data can be strategically spread out among several disks there could be potentially serious interference when accessing data from a single source.

Lightroom has begun to expand our capabilities to edit, find and catalogue our photos once they are moved from our cameras to our computers. The volume of the data that represents our photos is growing at a staggering rate. So too is the complexity and the expense of the storage subsystem needed to effectively and efficiently store it all.

So what are we to do? For those of us who can’t afford multiple SSDs (I would if I could) there is at least the option of adding a second or third HHD and spreading the data as Bob has done. I would also strongly suggest an investment of $40 for the Perfect Disk product. At least that is a start toward a total storage management approach to dealing with the vast amount of data created by digital photography. Just sayin’…

Copy link to clipboard

Copied

BillAnderson1 wrote:

So what are we to do? For those of us who can’t afford multiple SSDs (I would if I could) there is at least the option of adding a second or third HHD and spreading the data as Bob has done.

Have you actually tested LR's performance using two or more hard drives (not SSDs)? What were the "measured" difference in LR's performance? What file location configuration(s) did you test? I mean no disrespect either, just asking. According to Ian Lyon's testing there is only marginal LR performance improvement when using even multiple SSDs, and only for specific operations:

http://www.computer-darkroom.com/blog/will-an-ssd-improve-adobe-lightroom-performance/

As already mentioned I currently have two hard drives and have found no measurable difference in LRs performance over a single hard drive, regardless where I locate the LR Catalog and Camera Raw cache. I am more than happy to test any configuration suggestion using my two hard drives that you've verified will increase LR's performance. Thanks.

Copy link to clipboard

Copied

TR, it’s important to know what system I am running in order to understand how my experience is maybe unlike yours and definitely unlike Ian Lyons’. I have an Intel Core 2 Quad CPU Q9650@ 3.00Ghz and 8GB memory, Windows 7 64-Bit. I have a 250GB Western Digital 10K RPM HDD SATA 300 and a 1TB Seagate 7200 RPM HDD SATA 300 plus a 500GB and a 1TB USB 2 backup drives. I have a Nikon D600 with a pair of Lexar SDHC UHS 1 600X SD cards. I don’t use a card reader; I transfer directly from my camera over a USB 2 connection. As you can see, my system is a whole generation back. I have tested but not documented my experience with separating files on my 2 HDD drives. The biggest gain came when I moved my cache, catalog and preview databases away from the C-drive and onto the 1TB Seagate. I did this specifically to get them away from the page file and some hidden .temp files that LR uses. Plus, I stay ontop of fragmenting by using the Perfect Disk product. Essentially, with limited resources, I separated LRs working data sets away from the systems working datasets and I keep a clean house. My gains all occurred while I was editing in the form of quicker response times.

I didn’t notice any gains in my upload times, but that’s because my backend storage system and processors are not the bottle neck during upload. Further, I think I can offer some insights into why Ian Lyons didn’t see much difference in testing the SSD subsystem: his bottleneck during the import process is likely the same as mine: a slow SD card or in his case a slow CF card. My card is supposed to transfer at 95MBs, but the best I could achieve was around 12MBs. That’s due to my use of USB2 and the size of the blocks on the card is a smallish 4KB. I could get a faster SanDisk Extreme Pro card and USB 3 PCI card, but I think I’d be better off just biting the bullet and going for the top of the line Intel i7 and a bunch of SSDs. LOL

If you want to test the effect of the transfer speed during upload, simply use whatever software came with your camera to upload to a disk and then import into LR from the disk and time that process.

Bill

Copy link to clipboard

Copied

Bill, thank you for the reply with your system configuration information. You said:

BillAnderson1 wrote:

TR, it’s important to know what system I am running in order to understand how my experience is maybe unlike yours and definitely unlike Ian Lyons’. I have an Intel Core 2 Quad CPU Q9650@ 3.00Ghz and 8GB memory, Windows 7 64-Bit. I have a 250GB Western Digital 10K RPM HDD SATA 300 and a 1TB Seagate 7200 RPM HDD SATA 300 plus a 500GB and a 1TB USB 2 backup drives.

This is actually very similar to my system and probably many other LR users as well.

BillAnderson1 wrote:

I have tested but not documented my experience with separating files on my 2 HDD drives. The biggest gain came when I moved my cache, catalog and preview databases away from the C-drive and onto the 1TB Seagate. My gains all occurred while I was editing in the form of quicker response times.

Using the same configuration I saw no change in LR's Library and Develop module response times on my Windows 7 system with 12GB memory, and i7-860 quad core processor.

The biggest difference is that I have never expereinced LR slowdowns as you and some others have described during heavy editing sessions or at any other time. So defragmenting or adding a 2nd hard drive made no difference on my system. Clearly there is something else different about your LR system that benefits as you say by "frequent defragmentation." I have Windows 7 defrag scheduling turned off and have never seen more than 1% defragmentaion on the separate LR Catalog, Previews, and Camera Raw Cache partition I created.

It would be great if hard drive defragmentation and multiple HDDs or SSDs was the 'Silver Bullet' for killing most LR performance issues. Unfortunately there are many LR users who have already tried this and still have performance issues. I'm willing to bet that in 99% of these cases there is unidentified hardware and/or software at the root cause. As an example we have seen strange LR performance issues caused by phones, other mass storage devices, and even USB monitor calibrators left connected. I'm sure there are many more causes yet to be identified.

Copy link to clipboard

Copied

Tr, while our disk subsystems may be similar, our processors are most definitely not. Nor, I suspect, are our mother boards even in the same ballpark. I have the last of the last generation of the Core 2 technology and you have the first of the Core i7 technology. Believe me; these systems are as different as night and day. Your 12GB is DDR 3, my 8GB is DDR2; your controllers are SATA 3 and while my disks are SATA 3, my controllers are SATA 2; your USB is 3, mine is 2; you have built in Turbo over clocking, raising your processor speed to 3.46 Ghz, I have no Turbo capability at all. This list could go on for every sub-component, but trust me when I say your system is quite likely the new MINIMUM system configuration for heavy LR users.

I have solved my fragmenting problem by using Perfect Disk to avoid fragments in the first place but that has no bearing on why I separated my working datasets. I did that to avoid contention for the channel controllers during multiply processor access. The big culprits were the LR cache and system page file; you saw the contention effect that occurred when I ran my speed tests concurrently. The page file is of particular concern because LR memory demand will grow to 3-3.5GB if I am very active in switching between the various modes such as Library, Develop and Slideshow during a long editing session. Further, the cache can be very active during rendering and preview generation, so when LR has gotten big enough to cause paging and LR is hitting the cache heavy, well you know the rest of that story. To make matters worse, I have a 1GB graphics card that can consume 4GB of main memory during its rendering process thus driving paging even higher. I watched the access times on my page file creep up to as much as 35ms!! It should be 0 to1 ms, tops.

I didn’t mention this before, but I am a novice LR user, I purchased it on February 25th of this year. I am self taught using The Adobe Photoshop Lightroom 4 Book for Digital Photographers by Scott Kelby. I spend a lot of time doing and undoing stuff to see how it works. I believe that was the reason my file system became a fragmenting problem. I don’t know what I was doing to cause any of the files to grow enough to cause it to fragment, but I’m certain that something like preset history, which can grow fast, is at the heart of the matter; I’m my own worse enemy. I suspect that whatever you do doesn’t cause much fragmentation. One note here, you mentioned that you have only 1% fragmentation in your Catalog, Previews, and Camera Raw Cache partition. It’s more important to know which files are fragmented than to know what percentage is fragmented. If, as was my case, your catalogue is heavily fragmented you will have problems each time you access it. Believe it or not, my page file has even gotten fragmented! So you need to use a product like Perfect Disk to analyze each and every file (geez, I sound like a Perfect Disk salesman LOL).

I think that the heart of the matter is that digital photography software (Photoshop, Lightroom, etc) has driven older general purpose technology platforms past their useful lives. I’ve determined that my system is about as good as it can get and I’ve done everything financially reasonable to make it better. I suspect many others may eventually come to that conclusion. I think I have learned enough that I could spec out a LR specific system that would be pretty nearly bullet proof. Adobe would be wise to work with Dell, HP or even Apple to configure a special Lightroom Edition PC. Sorta like a Saleen Edition Ford Mustang.

Bill

Find more inspiration, events, and resources on the new Adobe Community

Explore Now