- Home

- Lightroom Classic

- Discussions

- Re: Slows down over time during session

- Re: Slows down over time during session

Copy link to clipboard

Copied

There is simply no question that LR 2015.9 (and earlier) slows down with continued use during a session.

This is easy to replicate and demonstrate.

Lightroom starts up responsive and quick and, after a short while of working with images, begins to bog down: screen redraws, edits, crops etc all start to take longer and longer to accomplish.

If you quit the app and restart the behavior resets and repeats as above.

The slightest amount testing by Adobe SHOULD have uncovered this.

Windows 10

Intel 6950X

256GB RAM

2/Nvidia Titan Pascals 2/Nvidia GeForce 1080's (tried with and without acceleration on)

All SSD or M.2 storage

100GB Cache (purged before testing)

1 Correct answer

1 Correct answer

It is a known issue with Lightroom if you have more that 4 cores and that processor does.

See here Terrible performance in LR - Fantastic in PP. What gives?!

Copy link to clipboard

Copied

First Optimize your cat.

Then close all other apps when running LR.

Try upgrading memory. This worked great for me I am running 64mb.

Once your cat gets to over 600,000 you should create a new one. The Cat can get buggy with age.

Copy link to clipboard

Copied

Also do the latest upgrade

Copy link to clipboard

Copied

As mentioned, I am running the latest version.

My catalog is optimized.

I have far less than 600,000 images.

And I am running 256GB (that is a quarter terrabyte) of RAM.

Thanks but those tips seem to have nothing to do with this behavior.

Lance

Copy link to clipboard

Copied

It is a known issue with Lightroom if you have more that 4 cores and that processor does.

See here Terrible performance in LR - Fantastic in PP. What gives?!

Copy link to clipboard

Copied

Thanks, Bob,

If this is true, how embarrassing for Adobe!

Do you know anywhere where Adobe has acknowledged this highly annoying problem?

I couldn't find a Known Issues page for LR like there is for Premiere or After Effects.

Copy link to clipboard

Copied

I recall seeing a document about it but can't remember where it was at and limiting the cores has worked for others in other threads. If you limit the cores and your system speeds up let us know as it would be great info for others with the problem.

I too wish Adobe would have a COMPLETE "known issues" page for Lightroom. I could list quite a few I know myself. It would sure save a lot of Forum time.![]()

Copy link to clipboard

Copied

I'm new to Windows so I'm still trying to figure out how to make a shortcut that sets the Affinity to 4 cores but will do if I get it figured out.

Copy link to clipboard

Copied

I have a shortcut to LR on my desktop, and I altered the 'Target' line to the following. That only allows LR to use 4/8 cores, and the other 2/4 cores of my 6/12 core cpu are just available to the system and other programs.

C:\Windows\System32\cmd.exe /c start "lightroom" /affinity FF "C:\Program Files\Adobe\Adobe Lightroom\lightroom.exe"

Take note of the spaces between commands.

But I think the main mistake many people are making is turning on the GPU performance. It only speeds up some develop sliders, nothing else, and unless you have a 4K or 5K monitor it will probably slow things down, rather than speed them up (according to Adobe). In addition it doesn't work with all graphics cards and drivers. So my tip would be to turn it off until you get LR running well, and then try it. Not the other way round!

In your case I would also try running with just one graphics card!

Bob Frost

Copy link to clipboard

Copied

Hey Bob,

Thanks, I did test this with no improvement.

I tried running with GPU acceleration on and off.

Many of us are using multiple graphics cards now to improve render speeds (in modern applications--Adobe is lagging behind here) in 3D and video apps.

And, of course I have a 4K monitor!

Lance

Copy link to clipboard

Copied

Ah well, I'm behind the times! I don't have multiple graphics cards, don't use 3D, don't use video, don't have a 4K monitor (just two normal eizo monitors), don't use plugins, don't run other programs while editing images, and don't sync to mobile LR. I do use the two monitors with LR and PS, and my 130K LR catalog RUNS FINE! The only quibble I have is that rendering large numbers (thousands) of previews does slow down over several hours, and even that might have improved with the last update.

It seems it pays to be old-fashioned (although there is nothing old-fashioned about my desktop computer - overclocked 6/12 core haswell, 32GB ram, Quadro card, three ssds, two HDDs, Win10.) ![]()

Perhaps you should have a separate computer for LR, with nothing else on it!! ![]()

![]()

Bob Frost

Copy link to clipboard

Copied

Yes, but you are forcing the 4 cores, right? I don't call that running "fine". I call that an embarrassing work-around forced by poor software and software testing by Adobe.

This problem is rather new, maybe creeping in a few releases ago.

Thanks,

Lance

Copy link to clipboard

Copied

I've been alternating between 4/8 cores and 6/12 cores but I can't really say I can see much difference on my setup. I used the affinity change just to see if I could see a difference. From what I read, there seems to be a lot of stuff in many programs, including LR?, that is not multithreaded or is not multithreaded very well. So more cores may not speed things up. But why they should slow things down, I don't know, except I did read one article once that said multithreading is a programmers nightmare and advised not to do it!!

At each update I just keep my fingers crossed that I don't get a bad problem. I have had bad problems in the past; several years ago updating from one major version to the next could cause a right mess. I found that many images had multiple lines in the catalog; exporting the catalog to a new one, without previews, got rid of the superfluous lines. Perhaps optimizing the catalog does that better these days. Also I have had to delete the Prefs file in the past, though not lately, and I have had to delete and regenerate all the previews because of corruption somewhere, probably in the previews databases.

But in day-to-day use, LR runs OK for me, but I do try to avoid running other programs at the same time (except for PS), and keep face recognition off until I actually need it. I think that LR has become like the old adage - 'Jack of all trades, Master of none'. Presumably in order to make more money they are trying to attract people who all want different things from LR, and Adobe haven't got the skills/time/money to keep them all happy at the same time.

Let's hope LR7/CC2017 improves things for serious photographers.

Bob Frost

PS Another thing I've done is to delete everything in the acrcache folder and make it read-only. That stops LR making another set of files for all the images, that have to be kept synchronised with the catalog and the previews database. It should theoretically slow LR down slightly in Develop mode, but I don't notice it and Lr doesn't seem to get upset!

Copy link to clipboard

Copied

https://forums.adobe.com/people/bob+frost wrote

From what I read, there seems to be a lot of stuff in many programs, including LR?, that is not multithreaded or is not multithreaded very well

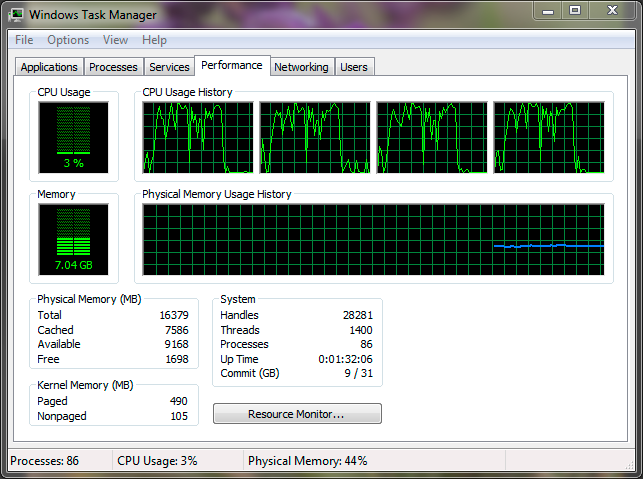

Maybe, but on my old i5-750, this is what going absolutely crazy with the sliders looks like:

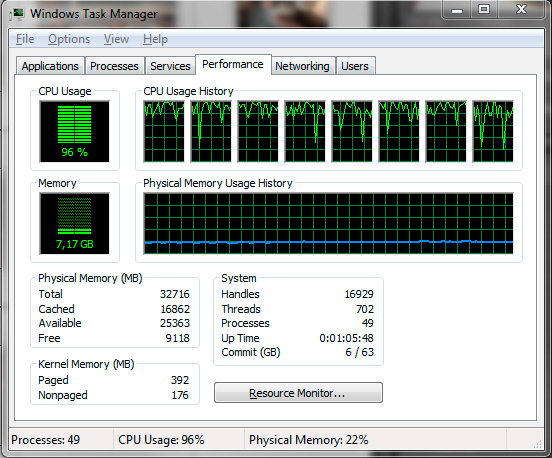

I'm not at my work machine (i7-3820) right now, but this is an Export screenshot I've kept:

It all seems pretty well multithreaded to me. Both are processing Nikon D800 files at 7360 x 4912.

BTW, the i5 performs well, the i7 not so much...

Copy link to clipboard

Copied

This won't fix it, but:

>100GB Cache (purged before testing)

will not help and might actually be a problem if this pushes your SSD over 75% full. I have found no benefit from increasing your cache when running your images off SSDs. I would as a guideline not put this cache much larger than a few times a typical shoot size.

Also, it was mentioned but it is essential to keep automatic face recognition off. That is one thing that will bog down LR completely right after an import. I wish they had put some intelligence on that to get out of the user's way if you're actually using the program and only run if you have been idle.