Adobe Community

Adobe Community

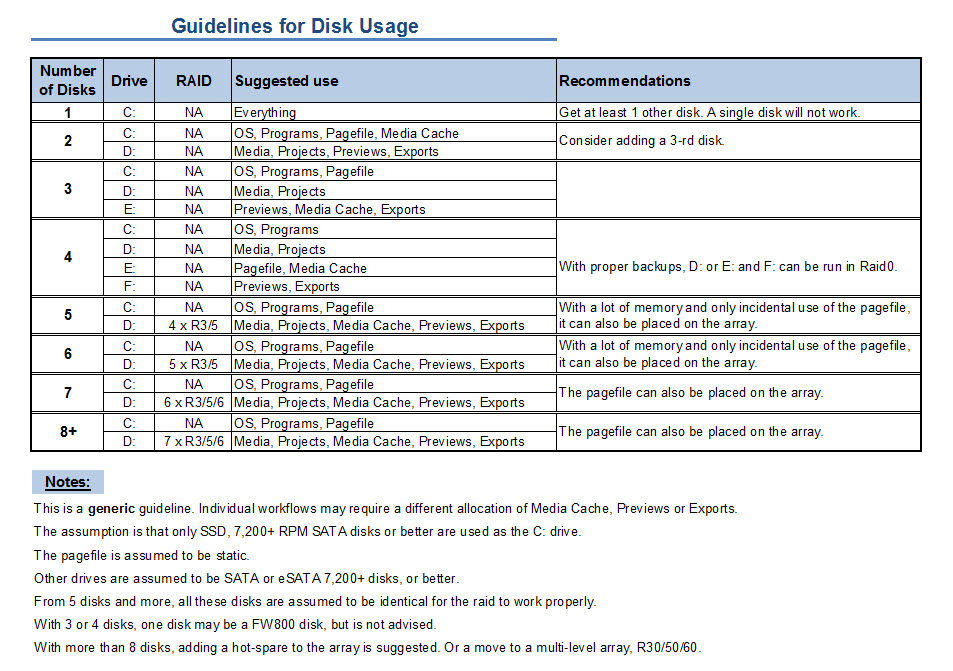

Generic Guideline for Disk Setup

Copy link to clipboard

Copied

There have been many questions about how to set up your disks.

Where do I put my media cache files, where the page file, and what about my preview files?

All these allocations can be set in PR, so I made this overview to help you find some settings that may be beneficial. It is not a law to do it like this, it is a generic approach that would suit many users, but depending on source material, workflow and backup possibilities, it is not unthinkable you need to deviate from this approach in your individual case.

The reasoning behind this overview is that you want to distibute disk access across as many disks as possible and get the best performance.

Look for yourself:

I hope this helps to remove doubts you may have had about your setup or to find a setup that improves performance.

Copy link to clipboard

Copied

A reminder to everyone again: keep personal comments, including sarcasm and veiled insults, out of your posts and responses. This topic will be locked if it continues.

Jeff

Copy link to clipboard

Copied

MagicManASC wrote:

So Are you saying SSDs with TRIM or garbage collection won't wear out over time and will be ok for scratch and cache drives?

That's what he's saying.

I've asked repeatedly, and I'm still waiting for someone to point to a scientific study of this issue so we can get an answer.

[Personal comments deleted]

Copy link to clipboard

Copied

VHC-CO-IT wrote:

Btw, OCZ's 1-3 products SUCK!!!!!!!! Their 4's are nice though. They bought out Indillinx to use their new Everest controller and now developed a new 2.0 Everest controller for their new drives. It basically builds a RAID array inside the flash chip array inside the drive so if one fails, it can warn you but not destroy your data. They're also REALLY fast and use low voltage rewrites so you get like 9000+ write cycles instead of 4000-ish from other brands.

I happened to pick up an OCZ Vertex 4 and wanted to test it with a real application; This application is a derivative of the Disk I/O part of PPBM5 test but much improved.

I checked the SSD and it was the latest firmware.version 1.5 so I did not have to update. Here are typical results with different hard disk drive results with my benchmark:

- 8 x 146GB SAS 15,000rpm R0 9 seconds

- 2 x 2 TB SATA III 7200 rpm R0 20 seconds

- 1 x 2 TB SATA III 7200 rpm 68 seconds

OK the first try I recorded a fairly decent score of 13 seconds but the next 5 trials were 45, 42, 45, 51, 41 so I decided to do a secure erase with the OCZ tool.and then I got 104 seconds and 165 seconds. Now this SSD while a good price is on its way back to the dealer ASAP. I hope they will allow me the purchase price on a good old-fashioned hard drive!

Copy link to clipboard

Copied

What I got from that Tom's Hardware HDD charts link, was that the Seagate Barracuda 7200.14 seem to be the best value disk drives for read and write speeds. They didn't perform so well in some other categories, such as Random reads, random writes, and other benchmarks.

http://www.tomshardware.com/charts/hdd-charts-2012/benchmarks,134.html

I don't know how relevant those benchmarks are to CS6 application's performance though.

If anyone does, please enlighten me.

Copy link to clipboard

Copied

I agree that the Seagate Barracuda 14 series is probably the fastest conventional disk currently, based on the benchmarks and reviews available. There is however one serious drawback, as I stated elsewhere:

The Seagate Barracuda ST2000D is probably the fastest SATA disk available at this moment, has the best price per GB and has a low sound level. This is of course important with 24 of those disks in the system. All the files that can easily be recreated are on the raid0, so the risk is negligent in case of disk failure. The important raid, drive E: with the media and projects on it, has a hot-spare for each raid3, in addition to the parity disk. Of course I lose the effective storage space of 4 disks with such a setup, but it buys me safety in case of disk failure.

However, there is one complicating factor specifically for Europe and that is that legal warranty is two years, no matter what the manufacturer says. In this case Seagate gives only one year warranty, so the consequence is that every shop that sells these disks has to pay the 2-nd year of legally required warranty out of his own pocket. For many shops this simply means they no longer sell these Seagate disks. The risk is too big, especially since these disks have - again - a high failure rate, many are DOA or develop screeching noises. Period.

Luckily I'm not in a hurry, so I can look around for alternatives, but the choices are very limited. WD RE4 are still SATA 300 disks, WD Caviar Black are notoriously bad for parity raids, Hitachi 7K3000 Deskstars may be an option, of course the Hitachi Ultrastars are much better but carry a corresponding price tag.

Copy link to clipboard

Copied

Where I live (Australia) Seagate Barracuda 14's are readily available, and very cheap.

They're 8 times less expensive per GB than say; Velociraptors.

I guess your comment : "a high failure rate, many are DOA or develop screeching noises" explains why they're so inexpensive here.

Disk drive prices in Australia are normally much higher than in other countries.

Decisions, decisions ![]() . I'm eagerly waiting to see what you end up choosing for this build >

. I'm eagerly waiting to see what you end up choosing for this build >

http://ppbm6.com/Planning.html before I purchase components for mine.

Edit: Why so much of my message came out highlighted, I have no idea.

I hope it's still readable.

Copy link to clipboard

Copied

Edit: Why so much of my message came out highlighted, I have no idea.

I hope it's still readable.

It is, but this is a byproduct of the Jive software, that creates inaccurate .hmtl code. The only way to get rid of it is to edit the Jive generated .html code manually.

In fact it inserts "span style="font-family: adobe-clean, 'Helvetica Neue', Arial, sans-serif; text-align: justify; background-color: #ffffff;">the text but with the required <>"

Copy link to clipboard

Copied

You did read the last page of that article explaining that those results are completely incorrect, right? Anyway...

Tomshardware has a little oversight there. If you instantly fill like 60GB of data onto a 128GB drive then a couple minutes later, attempt to use it, that's not "natural" use of the drive. The firmware would get extremely confused and didn't have time to update its index of which chips have which write counts, attempt to move the data around intelligently, do garbage collection, etc. It also switches into something called "storage mode" instead of "performance" mode which results in a very short time performance drop as it moves stuff around to adapt.

Also, that entire test said "out of the box" and didn't say anything about updating the firmware. Well, there's your problem. And this data is from June 25th, which is before the 1.5 firmware was even released so they weren't using it for the test. It even says, they used the 1.4 firmware. That's at least 2 versions ago. 1.4 even had drive detection problems in the BIOS. It was useless! Any benchmark using it is wrong.

Also, OCZ's own website claims they already fixed this sort of problem in the changelog for 1.5, likely by telling the wear leveling to ignore files over a certain size or to terminate wear leveling chip write count searching after a certain time period or by limiting the period of time for processing to change over into bulk storage mode.

Also, read speed never degrades on any drive ever.

Also, other manufacturers don't have this specific problem anyway as long as they do garbage collection (except Kingston). But as the final page states, this isn't a problem, it's a feature and it only affects the drives for a couple minutes after doing something stupid like filling it half full with data all at once.

If you read the final page..."Effectively what this means is that drives that are less than half full will enjoy further optimized performance and after crossing more than half full the garbage collection algorithm will re-optimize the drive for maximum efficiency based on a larger data footprint. During this transition there may be a small latency hit, but this is a onetime event, and overall performance quickly improves as the drive is now optimized for the larger amount of storage."

It's a one time deal. Like I said, you write a sequential 50-60GB data file, it gets a little confused about what you're using the drive for and re-optimizes itself the maintain the best speed BUT only temporarily until the firmware adapts and then it operates at full speed again. From the article: "From our observations on a partitioned drive, “storage mode” is encountered when sustained write activity exceeds 50% of the available free space." So if you slowly fill the drive up over a month, it won't do that.

And the biggest also is also, Premiere cache drives are usually around 100% empty lol. They're cache drives. They cache stuff, not store it long term ![]()

P.S. why aren't they using cold fusion for the forum? lol.

Copy link to clipboard

Copied

Premiere cache drives are usually around 100% empty lol. They're cache drives. They cache stuff, not store it long term

Incorrect. When you use the program you will notice this is again a faulty statement. Had you ever used the program, you would know that the cache files are used from the moment you import footage into the project, till you remove the project from your disk.

Copy link to clipboard

Copied

I wanted to jump in quickly on this point as I've been trying to recieve clarification on this issue. Is it ok to store other frequently unused items on the cache drive, or am I negatively affecting performance by doing this?

I've been throwing some archives of install files on there thinking 'I have all that free space, might as well put some boxes in the back of the closet'. Is this a mistake?

Copy link to clipboard

Copied

I forgot, that's how it works by default but that's not how I use mine. When the final project is done and encoded and there's a low chance I'll edit it later, I either delete the cache files and they'll just have to regernate on the fly if I decide to reopen the project later or I move them to my long term storage drive within the project preferences, which is the way everyone should do it unless they have a reeeeeeeeally big SSD.

Large, bulky files are fine on SSDs as long as you have room but if they're not frequently used, throw them on a stable low powered drive or something instead. I use a 640GB green drive for backup images of all my software CDs and DVDs. It spins at a low speed, gives off almost no heat, and basically is extremely unlikely to fail because of that. It's around 130GB so that'd be a total waste of space on an SSD considering I pretty much never use those images unless the original disc gets destroyed ![]()

Copy link to clipboard

Copied

VHC-CO-IT wrote:

Also, that entire test said "out of the box" and didn't say anything about updating the firmware. Well, there's your problem. And this data is from June 25th, which is before the 1.5 firmware was even released so they weren't using it for the test. It even says, they used the 1.4 firmware. That's at least 2 versions ago. 1.4 even had drive detection problems in the BIOS. It was useless! Any benchmark using it is wrong.

Also, OCZ's own website claims they already fixed this sort of problem in the changelog for 1.5, likely by telling the wear leveling to ignore files over a certain size or to terminate wear leveling chip write count searching after a certain time period or by limiting the period of time for processing to change over into bulk storage mode.

Also, read speed never degrades on any drive ever.

Also, other manufacturers don't have this specific problem anyway as long as they do garbage collection (except Kingston). But as the final page states, this isn't a problem, it's a feature and it only affects the drives for a couple minutes after doing something stupid like filling it half full with data all at once.

If you read the final page..."Effectively what this means is that drives that are less than half full will enjoy further optimized performance and after crossing more than half full the garbage collection algorithm will re-optimize the drive for maximum efficiency based on a larger data footprint. During this transition there may be a small latency hit, but this is a onetime event, and overall performance quickly improves as the drive is now optimized for the larger amount of storage."

It's a one time deal. Like I said, you write a sequential 50-60GB data file, it gets a little confused about what you're using the drive for and re-optimizes itself the maintain the best speed BUT only temporarily until the firmware adapts and then it operates at full speed again. From the article: "From our observations on a partitioned drive, “storage mode” is encountered when sustained write activity exceeds 50% of the available free space." So if you slowly fill the drive up over a month, it won't do that.

And the biggest also is also, Premiere cache drives are usually around 100% empty lol. They're cache drives. They cache stuff, not store it long term

I cannot tell if these comments were meant for me as you were replying to Harm. I said nothing about "out of the box" on the SSD testing--I specifically stated that the drive I got was firmware 1.5 and therefore I did not have to update it. Also our PPBM5 test running in Premiere does write a 13 GB file. so their so called fix would never be usefull as a Premiere Project file location.

Copy link to clipboard

Copied

I was referring to the tomshardware article, which stated they used it out of the box. Also OCZ's reply at the end explained everything and invalidated the entire article, lol.

Your test:

OK the first try I recorded a fairly decent score of 13 seconds but the next 5 trials were 45, 42, 45, 51, 41 so I decided to do a secure erase with the OCZ tool.and then I got 104 seconds and 165 seconds.

was simply a bad test. Yes, the firmware's garbage collection gets a little confused and slows down when you erase the entire drive then immediately do another benchmark without waiting for it to adapt to what you just did. Also, it would appear you did those last 5 trials too close to each other. For all I know, you had the drive on a SATA II controller by accident or were using the source file for the test on a hard drive on SATA III port #1 while testing the SSD on SATA III port #2, causing bandwidth issues. I tend to trust tomshardware more than any individual person's single test.

Copy link to clipboard

Copied

All the files were on the SSD; project, source and output files. Any idea of how long you would have to wait before it is ready to be used?

Copy link to clipboard

Copied

Bill Gehrke wrote:

All the files were on the SSD; project, source and output files. Any idea of how long you would have to wait before it is ready to be used?

Ask OCZ. Their people definitely need to learn how to program and test their products instead of using end users as the beta testers but I use their drives because they're cheap, reliable, and can take over double the total amount of writes per chip before failing so they last a lot longer. If I had unlimited money, I'd go with an intel 520 series with TRIM on. They're THE fastest in certain tests, use top of the line chips, and their firmware is perfect.

TRIM or no TRIM, the performance will deteriorate and the drive will be upgraded sooner or later

That is completely incorrect. That's just not how flash chips work. With TRIM or firmware garbage collection, they don't suffer from fragmantation delay or any type of performance drop at all, unlike a spinning drive. That's the reason people love SSDs. They're fast and stay fast forever.

In the last 10 years, average everyday hard drives approximately doubled in speed. Right now, a Patriot Pyro or Kingston HyperX are 5x faster than my extremely fast 1TB Seagate drive. It's hard to say they're not worth it, considering that.

As far as quality changing weekly, I suppose that is the case if you are scraping the bottom of the barrel. If you go for quality name brands like Intel, Samsung and Crucial, their quality does not change by the day.

Exactly the opposite is true. They're still selling Patriot Torqx drives (ugh!). Bad, old ones stick around forever. It's the top of the line drives that change every week. Patriot was the winner then Kingston made a faster one. Then OCZ developed the Everest II controller. Then someone else developed low voltage writes that don't require an erase command. Then OCZ developed RAID arrays between flash chips within their drives. Then some company called Angel something or other invents the fastest SSD in the world. Then OCZ made a Revodrive, which runs at 1,600MB/s read and 1,500MB/s write. Now Sandforce 2.0 is almost released, which enables firmware garbage collection by default if TRIM isn't available.

Since, over the last year, SSDs were glitchy, experimental, and failed too quickly, you have to keep an eye out for the latest of the late technology to get one that actually works. You can't just say "oh, Samsung is good" and ride that for 2 years because someone will invent something better next month.

Copy link to clipboard

Copied

All the files were on the SSD; project, source and output files. Any idea of how long you would have to wait before it is ready to be used?

Funny, there is a claim that SSD's are so fast and you have to wait an indeterminate time to be able to use them. Sounds conflicting.

Copy link to clipboard

Copied

Your test was simply a bad test. For all I know, you had the drive on a SATA II controller by accident or were using the source file for the test on a hard drive on SATA III port #1 while testing the SSD on SATA III port #2, causing bandwidth issues.

How would you know? Your assumptions say it all.

Copy link to clipboard

Copied

Harm Millaard wrote:

Your test was simply a bad test. For all I know, you had the drive on a SATA II controller by accident or were using the source file for the test on a hard drive on SATA III port #1 while testing the SSD on SATA III port #2, causing bandwidth issues.

How would you know? Your assumptions say it all.

When someone posts a benchmark that has end results that don't make any sense, you have to assume something was wrong with it.

Copy link to clipboard

Copied

They make perfect sense, but then I'm familiar with the test and the conditions under which they were performed and you are not. Maybe you also have to recognize that what you consider non-sensical results are in fact the weakness of SSD's, that occur often in video editing.

Copy link to clipboard

Copied

Here is a retest of the OCZ Vertex 4. I did a secure earase and then waited one hour. I copied the test files (25.8 MB) to the SSD and ran the benchmark. The results of writing the 12.0 GB AVI file is 43 seconds. Maybe I need to wait 24 hours before I run the test. By the way we have test results on PPBM5 from over 100 users using a SSD for the project files on a somewhat similar test that a vast majority do not show this abnormal result.

Copy link to clipboard

Copied

Here is a retest of the OCZ Vertex 4. I did a secure earase and then waited one hour. I copied the test files (25.8 MB) to the SSD and ran the benchmark. The results of writing the 12.0 GB AVI file is 43 seconds. Maybe I need to wait 24 hours before I run the test.

Makes complete sense to me, but what makes no sense to me is why anybody would want such a SSD, when you have to wait one hour before you can use it and it then only shows such abysmal results...

Copy link to clipboard

Copied

TRIM takes an extremely long time to execute, so when deleting large files, expect not to use the SSD for some time afterwards or suffer horrible write performance degredation. This is because TRIM can not be queued as a command, unlike every other command executed in a computer. Compile that with the fact TRIM acts on blocks where more pages are marked for deletion first and there you have your answer. The Benchmarks with the SSD's were done under ideal conditions (whether on Tom's or PPBM5), hence why they show decent scores. Regardless of TRIM you will also see write performance degrade the fuller the SSD becomes, also for the same reason that TRIM commands like to wait for more pages in a block to be marked for deletion. This is to help reduce wear amplification.

SSD's are a great piece of hardware, they just haven't completely snuffed out HDD's for every application. In a Video Editing box, SSD's should really only be used for Read Heavy operations where data doesn't change much. Anytime you will be editing, moving, or deleting large files, a RAID 0 or RAID 3 on traditional HDD's is a much superior solution.

Also here is the change list from 1.4 to 1.5 firmware on the Vertex 4:

- Improved sequential file transfer performance for 128GB, 256GB and 512GB models

- Optimized idle garbage collection algorithms to extend the benefits of performance

mode by enabling the feature across a greater percentage of the drive - Improved HBA / RAID card compatibility

- Further improved compatibility with desktop and mobile ATA security features

- Corrected a corner case issue where the ‘Remaining Life’ SMART attribute could be reported incorrectly

I wouldn't exactly say write performance will drastically change because of these improvements, given the inherent shortcomings in SSDs I stated above.

Copy link to clipboard

Copied

Of course, the worst SSD performance degradation numbers I've ever seen in the worst conditions (except Kingston's SSDNow awful product) are still as fast or faster than a typical single spinning drive performs under perfect conditions. Then there's the fact that a RAID array that'd match the read speed would be at least $400 + the cost of the controller unless you use the not so great one built into the typical desktop board. Then if you have a stack of 4-5 drives, you probably want a high CFM fan blowing across them. You can get a really decent SSD for $120.

And by the way, there is an SSD out there that uses a type of flash that can write to a location without erasing it first. I don't remember which one or if it's been released to the public yet but they exist.

Also, this SSD can immediately blow itself up at the push of a button lol

Copy link to clipboard

Copied

Hello everyone, I have been watching this very interesting thread and have toiled over drive configurations for quite a bit of time over the last few systems I have bought and I can tell you that while it is important, don't spend too much time on it as there are more important things to focus on that will have a much greater impact on your machine and where you want to spend your $$. I also find it interesting how much time I spend trying to tweak my system versus creating content, which is where my time should really be spent...but I digress. I just thought I would share the items I have found to be no brainer decisions and ones that you would think would make a difference but don't. The key however, is evaluating what kind of work you will be doing, and what is likely to have the most impact. Listed below are things I have found over the years that have really made a difference and those that are a waste of time.

1. SSD vs Spinning disk for boot drive: For your boot drive, this is a no brainer, get an SSD. For pretty much everything else I have seen it have zero effect on PPro or After Effects, go with spinning disks (more on this below).

2. Make sure you have a CUDA enabled card, this will enhance your system performance with PPro more than anything else I have found (not as important with AE).

3. IF you are doing a lot of color correction, make sure you use the CUDA enabled effects...10X faster and if you have CS6 they have more enabled.

4. If you want a very fast way to do color correcting, take a look at Adobe Speedgrade, it is simply awesome and is fantasticlly fast regardless of the hard drive configuration, but runs all on your GPU. Also, it comes iwth built in affects for making your video have the cinematic look and those plug ins can be very expensive, this one is free with production premium and is awesome. Only kicker is it is not fully integrated yet and you should use it as the last part of your editing process (i.e. finish your transitions, effects, etc. and then do your color grading last).

5. Really watch what plug-ins you have. As an example, most everyone has noise in their video and wants to remove it. If you get denoiser from RedGiant vs. Neat, the render times differ by a factor of 10x because NEAT takes advantage of multiple threads and the GPU. In other words get smart about what effects you use, which to use in After Effects vs. Premiere pro, get your workflow down, etc. These will pay off 100x more dividends than the time you spend thinking about disks.

6. Fast RPM disks vs. 7200 RPM. In my experience, this has had zero real world impact on AE or PPRo, I ahve 15K SAS drives and 7200 SATA drives in raid 0 and not much difference so save your money. The reason is that the high RPM's are great for applications (like a database) that have non-sequential reads/writes and that doesn't factor in too much.

7. Definitely RAID your drives, I personally like RAID 0 with frequent backups approach as it tends to be the least costly, but I know I will get a lot of flack for saying it, bottom line, break up yoru drives as Harm has suggested in many of his posts, but don't make it a science project...there are much better ways to spend your time to get your system/workflow working faster.

8. Think through your workflow and determine what your bottlenecks are likely to be. As an example, if you do a lot of color correction in PPRO, make sure you have CUDA and look at ADobe SPeedgrade. Do a lot of effects in After Effects? Make sure that is the best place to do it, there are a lot of the same effects now in PPRo.

9. Use performance monitor on yoru system to see where your bottlenecks really are...are you really using all that memory you bought, does the system report you have a bottleneck with yoru disks, etc. The sytem can tell you a lot about how it is running, so you can make more educated reasoning on where to spend your money.

I am out of time, and will continue to add to this list as I think of things and would appreciate people adding to the list of known good practices...just don't beat my recommendations up too badly:)

Copy link to clipboard

Copied

Can someone please answer my question, if it's at all problematic to store infrequently accessed archives on the same disk as the cache/page, or would this in some way negatively impact performance of that drives caching/paging function?