Hello again, Lilybiri;

Many thanks for your continued feedback!

Ok, this is the project I had asked for support on before - where I used your workflow of having a large blank button covering the slide triggering a failure advanced action - with one small correct target on top that triggers a success action.

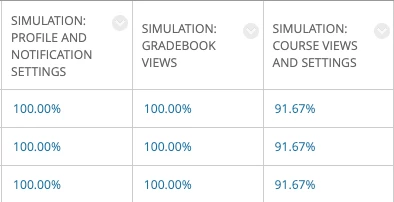

On each slide there is an instruction and a target. If the target is clicked, the project advances. On the first click on the wrong place, a hint pops up. If the user gets it right the second time, the project advances. If the user gets it wrong for a second time, they get a failure message and a button to continue to the next slide. They get a point in the quiz for clicking correctly either the first or second time; none if they get the failure message. On two of the slides in that simulation, there are two targets (each with the success action of go to the next slide) and each worth a point since success gives the user a point in the quiz. But the reason that each successful user was only getting 91.67% rather than 100% is because those 'extra' (i.e. not clicked targets) were each an available point that users could never get (if they click one, they never have a need to click the other).

I'm not sure if that is clear...? Everything in the simulation "works" the way it should. It's just these two slides that have the extra phantom quiz point that are messing up the final score and not allowing for a 100% in the grade centre. I don't know if there is a solution for this. I'm not sure that all the other steps you mention above are necessary since the issue is just the score, but perhaps what you said about "adding a score for the slide" may be a possible avenue...what do you think?

Thanks again!

samamara

There are a couple of ways around the problem you describe (where only one of two scorable objects will be clicked but you don't want the missing score to affect the user being given a perfect passing grade).

One easy way is to have your Completion and Pass/Fail awarded on the basis of scored points rather than percentage. If the user only has to gain a specified number of points to pass, then even if they only click one of the two buttons on each of those slides you mention, then the missing scores will not cause issues. If you have pass/fail determined by percentage (not points) then the problem becomes that Captivate calculates the final pass/fail based on ALL of the scored objects, and your implementation then means that the user can never click all of the buttons to reach 100%.

I've suggested the method above (using points) because it can be done with stanard Captivate functionality. Another way to achieve what you want is to use the CpExtra widget and a Conditional Advanced Action to force extra scored points to be reported to the quiz. Basically, when the Conditional Action detects the user click one of the two buttons on the slide it uses CpExtra's xcmndScore command variable to increase the quiz score to compensate for the user NOT clicking the other button. This then allows you to set the quiz completion and pass/fail to be determined by percentage score instead.

Command Variables | CpExtra Help (widgetking.github.io)

Please note that the widget is not free. But if the first option I suggested is not acceptable, then perhaps the cost of the widget will be worth it for your project.