- Home

- Color Management

- Discussions

- Re: Benq SW2700PT sRGB mode gamma - no linear sect...

- Re: Benq SW2700PT sRGB mode gamma - no linear sect...

Copy link to clipboard

Copied

I'd been wondering why images appear to be higher contrast in sRGB mode on my Benq SW2700PT monitor than in AdobeRGB mode. On investigaton I find it's because the monitor doesn't support the linear region in the sRGB gamma curve but applies a gamma of 2.2 across the whole range, from black to white. If I view a black & white image in AdobeRGB and then switch the monitor to sRGB (without changing my monitor profile) I'd expect the shadows to lighten, but they stay the same. In other words, the monitor is using the same 2.2 gamma curve for sRGB as it uses for AdobeRGB (AdobeRGB gamma doesn't have a linear section). This means when I switch to sRGB mode and apply the sRGB profile, the shadows become too dark.

It's the same if I calibrate the monitor to sRGB primaries with Palette Master Element. The gamma is applied across the whole range. There is no option available for the linear section.

Is this normal behaviour for monitors? I might expect it of budget consumer models but the SW2700PT is supposed to be aimed at the premium consumer market, even edging into professional. I notice it's the same on my old Dell U2410 monitors, which were also higher end models in their day.

When I view in sRGB mode, is there an alternative sRGB profile I should be using, without the linear section? Up until now I've simply been using sRGB IEC61966-2.1.

1 Correct answer

1 Correct answer

In theory you're right. In practice the sRGB emulation is setting the primaries very accurately, but doesn't bother with the tone curve. And honestly - why should it? It doesn't really matter in a color managed environment anyway. This is all remapped from one to the other and any differences in TRC are invisible to the user.

The "black point compensation" kicks in long before the sRGB black toe. Defined as Lab values, the monitor black point never goes that deep, nowhere near where sRGB levels o

...Copy link to clipboard

Copied

Viewing in what application? Not what I see on my SpectraView if I understand what you're saying. In an ICC aware application, the profiles should account and compensate here. Could it be an issue with the creation of either a LUT based profile or the profile being built in the V4 spec? Not all applications deal with these options well. The gamma of the display calibration really shouldn’t play a role. Can your software calibrate to an option called "Native Gamma" and if so, any difference?

The shadows shouldn’t lighten; they should preview the same.

Copy link to clipboard

Copied

The SW2700PT has internal calibration. In other words, the calibration process programs the gamma and colour space response curves into the monitor, rather than it being handled by the computer's GPU LUT.

If I calibrate the monitor to native mode it's fine (just about!). And of course, I use this with the profile created for that native calibration.

It's also fine with Adobe RGB, using the standard AdobeRGB profile, which has a simple gamma of 2.2.

However, the monitor also has a factory calibrated sRGB mode. In this mode I expect it to behave as a monitor to sRGB specification and to use it with the standard sRGB profile. But it doesn't because the factory calibration has been performed using a simple gamma of 2.2. It lacks the linear section contained in the sRGB profile. This means images appear higher contrast in sRGB mode because the shadows get crushed.

Of course, the factory calibration won't be perfect. so it's also possible to calibrate the monitor to sRGB. However, again this is performed using a gamma of 2.2, without a linear section. There's no profile file required from the calibration process. The monitor is calibrated to sRGB via its internal LUTs. Once calibrated, I thought it should function as close as possible to the sRGB spec and should be used with the standard sRGB profile.

Copy link to clipboard

Copied

In fact, Palette Master Element doesn't inspire confidence when it offers users to set gammas other than 2.2 for AdobeRGB and sRGB calibrations. If you're calibrating to a standard colour space I don't see there should be options for deviating from the correct gammas, otherwise they won't be correct for the standard profiles.

Copy link to clipboard

Copied

Hi Richard

You ARE calibrating / profiling with a colorimeter device are you?

If you set the base settings in the display to sRGB then make an ICC profile you should set that profile in the system

[as "system profile'] so that color management savvy applications can use it - I would not use the regular sRGB working space profile for that.

Unfortunately I've not had great reports on the bundled Palletemaster SW from users, some I have discussed this with have tried the basICColor display software for calibration and, despite the fact the one loses the ability to utilise the display's downloadable LUT "Hardware Calibration" the results have been far more satisfactory.

display does offer the option to calibrate to the sRGB tonal response curve. [but not to restrict the device gamut to sRGB].

Try it? There's a free 14 day download, more about display here https://www.colourmanagement.net/products/basiccolor/basiccolor-display-software/ [full disclosure, I am a dealer]

from the display manual

"RGB IEC61966-2.1

sRGB is a working space for monitor output only. You find it mainly in the areas of Internet, multi media video and office applications. The tonal response curve cannot be described with a gamma function (although Photoshop, for example, reports a gamma value of 2.2). In the shadows, it resemble more an L* curve, in the mid tones and highlights it follows the gamma 2.2 curve. In order to exactly match sRGB data, basICColor display and SpectraView Profiler are the only monitor calibration applications that offers an sRGB calibration curve for these applications."

I hope this helps

if so, please do mark my reply as "helpful"

thanks

neil barstow, colourmanagement

Copy link to clipboard

Copied

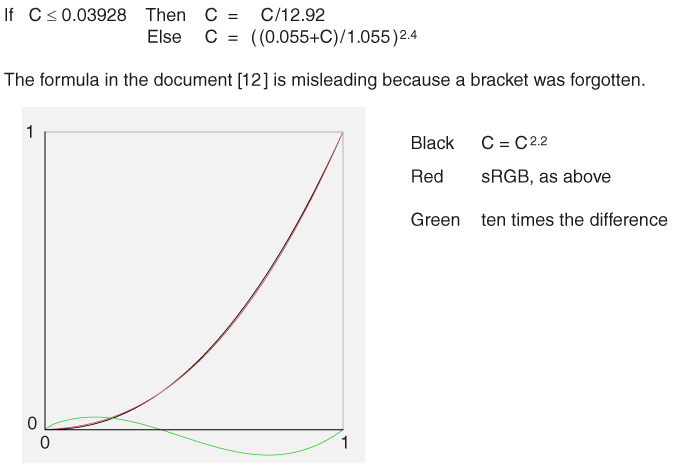

Let`s assume there is a difference between the monitor TRC (exactly G=2.2) and the profile TRC

for the monitor (exactly like that of sRGB). Or vice versa.

Here are the TRCs for both:

The difference is nowhere larger than 1% full scale, let's say 2 to 3 units of 255.

Now I'll show a test. Will this small difference be perceivable? An S-like curve is applied to the images.

By toggling preview On/Off one can see a tiny difference, like a flicker. One cannot perceive the

difference absolutely, like an application of Shadow/Highlight for lifting shadows. The effect of the

deviation between the G=2.2 TRC and the sRGB-TRC is very small.

If everything is correctly color managed (TRCs belong to color management workflows), no difference

will be visible.

Best regards --Gernot Hoffmann

Copy link to clipboard

Copied

Hi Gernot & Neil,

Thanks for your replies.

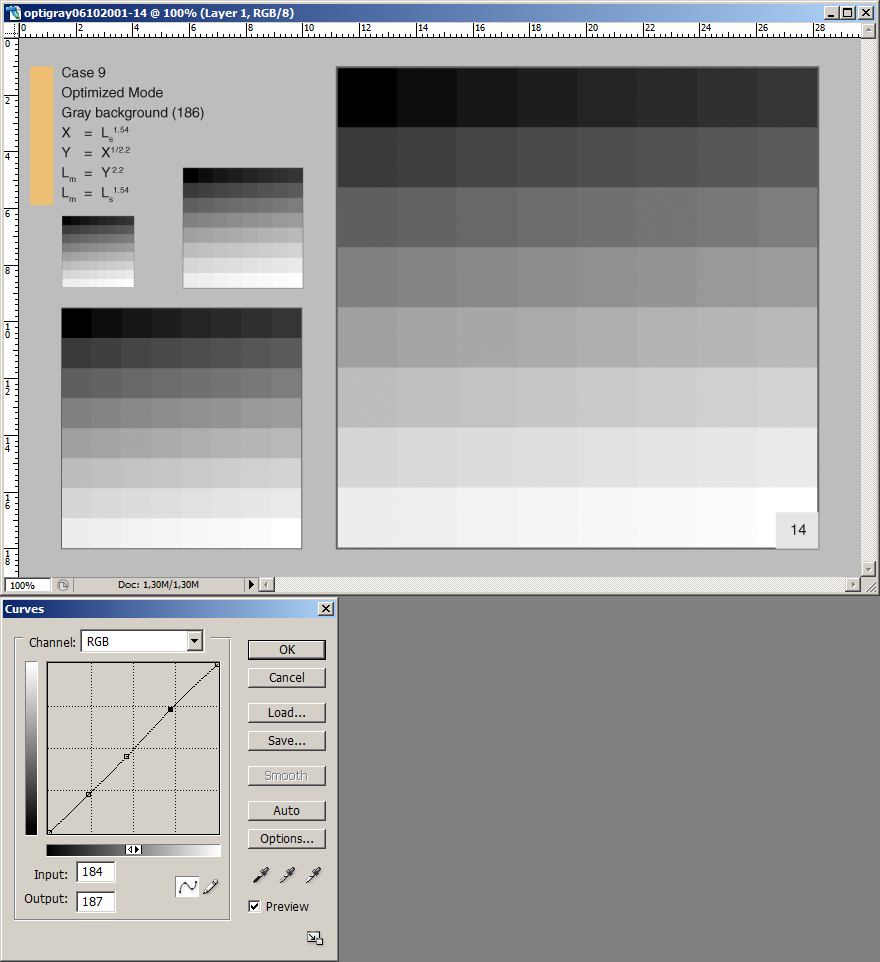

The numerical difference between the sRGB curve and a single gamma of 2.2 is actually quite significant in the shadows.

Using Photoshop you can find the differences between the shadow values with the sRGB gamma and the single gamma of 2.2 used by AdobeRGB (I know this is a crude approach but Photoshop tends to get the numbers right). In 8-bit mode, in order to obtain the same output as 5, 10 and 20 in sRGB gamma it requires 13, 18 and 27 respectively in 2.2 gamma. That's quite a difference IMO.

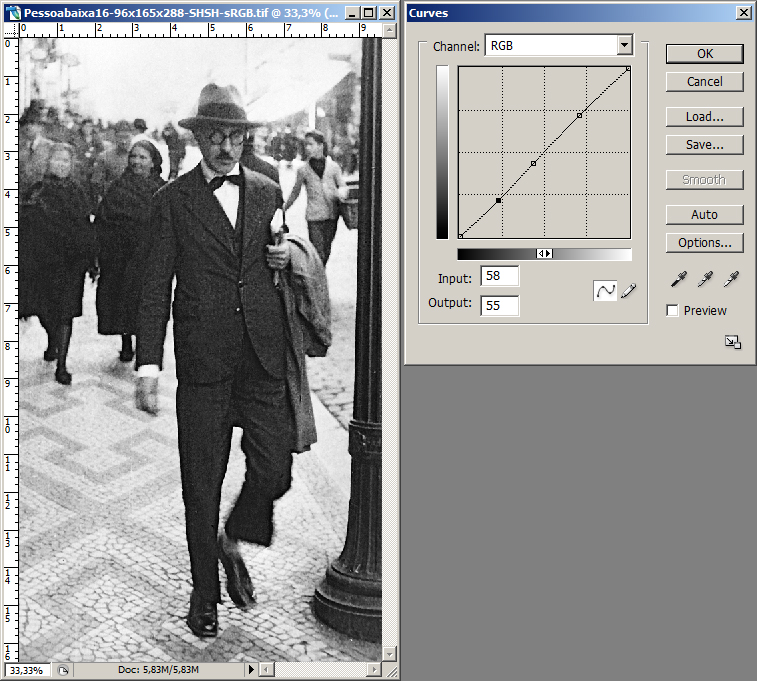

I could upload a couple of demonstration images but suggest you try it yourself. Create a monochrome (B&W) image in Photoshop in sRGB working space. Then assign (not convert) the profile to AdobeRGB (this assigns a single gamma of 2.2 to an image that is profiled to sRGB gamma). Note how the shadow areas get darker. This is exactly the effect I'm seeing with a monitor calibrated to a single gamma of 2.2 when using the standard sRGB profile – the monitor requires much larger shadow values than provided by sRGB.

With a 'conventional' monitor you calibrate the computer to the monitor, rather than calibrate the monitor directly. When you do this of course you will need to characterise the monitor output and produce a custom profile for the sRGB gamut. This means non-colour managed applications still won't display correctly because the monitor isn't a pure sRGB device.

However, one of the reasons for having a monitor with internal 3D LUT calibration is surely that you can calibrate the monitor to a standard colour space, such as sRGB? You should then be able to treat the monitor as a pure sRGB device and use the standard sRGB profile. This means non-colour managed applications will display correctly. Of course, they'll always be a minor deviation from the ideal device but nothing like I'm seeing. It's clear from simulation with Photoshop where the problem lies - the monitor gamma is 2.2, not sRGB.

Palette Master Element doesn't even provide a characterisation profile following calibration to sRGB or AdobeRGB primaries (it does output a profile, but this is to native space!). My assumption is they expect you to use the standard sRGB/AdobeRGB profiles, but it’s absolutely hopeless asking Benq customer support!

Going back to my original question, would you expect a premium level monitor which has been hardware calibrated to sRGB to use a basic 2.2 gamma? Even the factory sRGB mode appears to be calibrated to basic 2.2 gamma. My wife’s modest Dell does a better job of displaying sRGB shadows, but then it is a dedicated sRGB monitor!

It's really not something I should be losing much sleep about as I don't normally work in sRGB. Just I noticed it when trying to sort of print calibrations and wanted to get to the bottom of it, in case it indictaed a problem elsewhere. I'll just add it to the list of Benq/PME issues.

Copy link to clipboard

Copied

So you’re also calibrating white to 80 cd/m2, black to 0.2 cd/m2?

Copy link to clipboard

Copied

thedigitaldog wrote

So you’re also calibrating white to 80 cd/m2, black to 0.2 cd/m2?

I'm not sure how significant that will be. I'm actually calibrating to 100 cd/m2, and using the 'Absolute Zero' black point setting in the calibrator (using the 'Relative' tends to cause PME to 'do a wobbly'!).

I'm convinced the problem is the monitor sRGB gamma curve being set to 2.2, rather than 'adjusted 2.2'. What I observe stacks up with that. When displaying a B&W image, if I change the monitor between AdobeRGB and sRGB modes (without changing profiles) the image looks identical. This means they are using the same gamma curves, which is not correct. What should happen in sRGB mode is the monitor should become more sensitive to the shadow levels and they should look lighter (this would of course be compensated for if I switched to the sRGB profile). As it is, when I apply the sRGB profile to sRGB mode the shadows become too dark.

To my mind, if a monitor has 3D LUTs for internal calibration then, with the correct software, it should be possible to program it to properly emulate smaller colour spaces, such as sRGB, without the need for a non-standard profile. If it still requires use of a non-standard profile in order to display correctly then the monitor hasn't been properly calibrated IMO.

Copy link to clipboard

Copied

richardj21724418 wrote

thedigitaldog wrote

So you’re also calibrating white to 80 cd/m2, black to 0.2 cd/m2?

I'm not sure how significant that will be. I'm actually calibrating to 100 cd/m2, and using the 'Absolute Zero' black point setting in the calibrator (using the 'Relative' tends to cause PME to 'do a wobbly'!).

You wrote: However, one of the reasons for having a monitor with internal 3D LUT calibration is surely that you can calibrate the monitor to a standard colour space, such as sRGB?

You're not producing sRGB without those specific targets for cd/m^2. And a bit more:

Copy link to clipboard

Copied

thedigitaldog wrote

richardj21724418 wrote

thedigitaldog wrote

So you’re also calibrating white to 80 cd/m2, black to 0.2 cd/m2?

I'm not sure how significant that will be. I'm actually calibrating to 100 cd/m2, and using the 'Absolute Zero' black point setting in the calibrator (using the 'Relative' tends to cause PME to 'do a wobbly'!).

You wrote: However, one of the reasons for having a monitor with internal 3D LUT calibration is surely that you can calibrate the monitor to a standard colour space, such as sRGB?

You're not producing sRGB without those specific targets for cd/m^2. And a bit more:

Really, I don't think that is relevant here.

If I display a B&W image and switch between the factory calibrated AbobeRGB and sRGB modes (with the same white/brightness/contrast settings and without changing display profile) I can see no change in the shadows, or in the image at all come to that.

Is that what you'd expect, given that AdobeRGB and sRGB are supposed to have different gamma curves?

Copy link to clipboard

Copied

richardj21724418 wrote

thedigitaldog wrote

richardj21724418 wrote

thedigitaldog wrote

So you’re also calibrating white to 80 cd/m2, black to 0.2 cd/m2?

I'm not sure how significant that will be. I'm actually calibrating to 100 cd/m2, and using the 'Absolute Zero' black point setting in the calibrator (using the 'Relative' tends to cause PME to 'do a wobbly'!).

You wrote: However, one of the reasons for having a monitor with internal 3D LUT calibration is surely that you can calibrate the monitor to a standard colour space, such as sRGB?

You're not producing sRGB without those specific targets for cd/m^2. And a bit more:

Really, I don't think that is relevant here.

It's only reverent IF you believe you're calibrating to sRGB.

As to the differences you see, as I stated, it's not supposed to work that way with proper ICC color management. The TRC or Gamma (and they are NOT the same) you calibrate a display for is compensated using Display Using Monitor Compensation, which is why we can use differing working spaces, with differing gamma or TRC encoding and it doesn't at all have to match the display response. It's why for some, calibrating to a Native Gamma is useful.

Copy link to clipboard

Copied

thedigitaldog wrote

As to the differences you see, as I stated, it's not supposed to work that way with proper ICC color management. The TRC or Gamma (and they are NOT the same) you calibrate a display for is compensated using Display Using Monitor Compensation, which is why we can use differing working spaces, with differing gamma or TRC encoding and it doesn't at all have to match the display response. It's why for some, calibrating to a Native Gamma is useful.

That's the way it works with a device which hasn't been calibrated to a standard colour space, e.g. a typical monitor (one without internal calibration capability). Then you need fiddle factors, like a custom profile, specific to that device.

The point about wide gamut monitors with internal calibration is that they should be able to be configured to emulate other colour spaces, provided they fall within their native gamut. Typically this is achieved using 3D LUTs. These are doing more than simply replacing the 1D LUT in the GPUs. They perform the colour space conversion from the desired colour input space to native monitor space. If done correctly, it means the monitor can be configured to behave as a pure sRGB device. If profiled it would be as close as possible to the sRGB standard.

When a monitor is correctly calibrated to AdobeRGB it will be setup with a 2.2 gamma response curve. When a monitor is correctly calibrated to sRGB is will be setup with the adjusted 2.2 gamma response curve. If you switch the monitor between AdobeRGB and sRGB modes whilst maintaining the same B&W input it should be possible to see the difference between the response curves. With the Benq there is no discernible difference between the modes. The sRGB mode has been setup with the same 2.2 gamma curve. This is why the shadows are too dark when I apply the sRGB profile to the input data.

It seems such a shame to have these lovely internal 3D LUTs and not do the job properly. I can't imagine NEC and Eizo getting it wrong.

Copy link to clipboard

Copied

BTW, I'm not saying there's no ICC colour management. There is, just the monitor has been calibrated to sRGB and therefore the profile to use is the sRGB standard.

Unfortunately though the Benq does not calibrate to proper sRGB space, because the TRC is wrong. To correct this would require non-linear TRCs to be written into the GPU LUTs, which defeats the object of having internal hardware calibration.

Copy link to clipboard

Copied

A-ha! I've found a work around.

I've tweaked the TRCs in the sRGB Color Space Profile file to be the single 2.199219 gamma found in AdobeRGB1998. sRGB mode looks a whole lot better now.

If I can't convince the brains here that this was the problem, there's absolutely no point in me trying to tell BenQ!

Copy link to clipboard

Copied

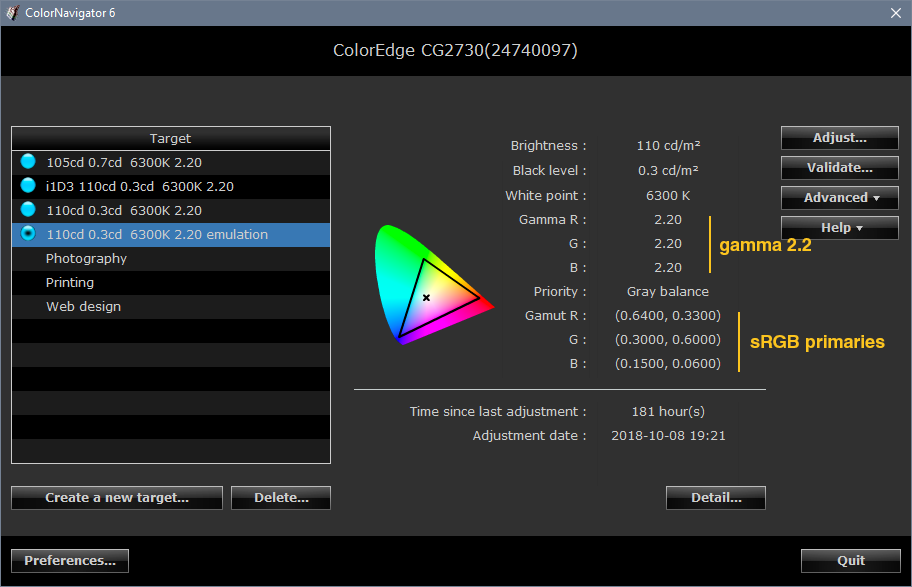

In theory you're right. In practice the sRGB emulation is setting the primaries very accurately, but doesn't bother with the tone curve. And honestly - why should it? It doesn't really matter in a color managed environment anyway. This is all remapped from one to the other and any differences in TRC are invisible to the user.

The "black point compensation" kicks in long before the sRGB black toe. Defined as Lab values, the monitor black point never goes that deep, nowhere near where sRGB levels out.

Eizo ColorNavigator does the same thing. The sRGB emulation is strictly about setting the primaries.

If you have inconsistencies between monitor presets, this isn't the reason. It has to be other bugs in the BenQ software.

Copy link to clipboard

Copied

https://forums.adobe.com/people/D+Fosse wrote

In theory you're right. In practice the sRGB emulation is setting the primaries very accurately, but doesn't bother with the tone curve. And honestly - why should it? It doesn't really matter in a color managed environment anyway.

I've tried to express that fact.... ![]()

Copy link to clipboard

Copied

I don't get this hang up about the "color managed environment".

With a monitor with internal hardware calibration it's still a color managed environment. Properly implemented it's the same structure, just the colour space conversion and TRC LUTs are moved out of the computer and into the monitor. What's the problem with that? The calibration/characterisation process is directed to changing parameters in the monitor, rather than the computer, but the outcome should be the same. I don't believe ICC specify where the colour space conversion and TRC should physically take place. It really shouldn't matter.

Of course, if it's poorly implemented you may be able to improve results by inserting yet another layer of conversion and TRC LUTs, but it really shouldn't be necessary. Would you argue you should have two stages of profile conversion and TRC LUTs in the computer; the first one for one calibration device, followed by a second for a superior calibration device? No, it's nonsense!

What's the conceptual difficulty with hardware calibration? Suppose early monitors had been done that way. Now, in order to save cost, manufacturers come out with uncalibrated monitors and say it can all be done in the PC. I guess they'd be people arguing that wasn't right!

Copy link to clipboard

Copied

richardj21724418 wrote

I don't get this hang up about the "color managed environment".

By that I mean any color managed process, any color managed display chain from document profile into monitor profile.

The calibration LUT doesn't come into this, it's not a factor, whether it's done in the video card or the monitor LUT. This is a remapping from profile to profile.

In a non-color managed display chain you will see a different shadow response - but it's not the sRGB black toe you're seeing, it's the native dip in shadow response that all LCDs have. This has a much bigger impact, and is much more important to correct in the monitor LUT. To correctly emulate a standard color space like sRGB this dip has to be corrected in the monitor LUT.

The other point I'm trying to make is that monitor black is never deep enough for the sRGB linear black toe to even become a consideration. No monitor can display RGB 0-0-0. The native black point is much higher. The monitor can't display pure black.

So that's where "Black Point Compensation" comes in. Instead of just cutting off near black, which would result in visual black clipping on screen, the curve is gently tapered off to monitor black point. This retains full shadow separation on screen, all the way down.

But this is much higher than the sRGB flat linear section.

Copy link to clipboard

Copied

https://forums.adobe.com/people/D+Fosse wrote

richardj21724418 wrote

I don't get this hang up about the "color managed environment".

By that I mean any color managed process, any color managed display chain from document profile into monitor profile.

I get that, but I don't see it makes a difference if you remove the monitor profile conversion from the PC and place it in the monitor. Done properly, it should amount to the same thing. Arguably it's better placed in the monitor as you don't have the bottleneck of the 8-bit/10-bit link.

https://forums.adobe.com/people/D+Fosse wrote

The other point I'm trying to make is that monitor black is never deep enough for the sRGB linear black toe to even become a consideration. No monitor can display RGB 0-0-0. The native black point is much higher. The monitor can't display pure black.

So that's where "Black Point Compensation" comes in. Instead of just cutting off near black, which would result in visual black clipping on screen, the curve is gently tapered off to monitor black point. This retains full shadow separation on screen, all the way down.

But this is much higher than the sRGB flat linear section.

Correct me if I'm wrong but I believe the sRGB linear section extends to between 10 and 11 in terms of 8-bit values. However, the linear section still influences the rest of the tonal curve, which I believe requires a gamma of about 2.4 in order to 'catch up'. This means it affects all tonal levels, not just the shadows (although there is a crossover point, perhaps around 100 - I'll let you do the maths!).

For instance, an 8-bit sRGB of 30 is about 35 with a pure 2.2 gamma curve. I think you'd agree, sRGB 30 is sufficiently above 'monitor black' to be plainly visible. In order to obtain the same output with a monitor setup with a pure 2.2 gamma curve I'd need to supply it with a value of 35. The difference is very apparent. It not only affects the shadows but also the mid range contrast (because at that point sRGB gamma is 2.4, rather than the flatter 2.2). Rather than theorise about it ad infinitum, simply try it! Emulate it in Photoshop.

I guess I've the 'luxury' of having a monitor incorrectly calibrated to sRGB with a pure 2.2 gamma curve. It's therefore easier for me to investigate the issue and experiment. From what I've observed I'm convinced the monitor has been setup with the same 2.2 gamma curve for sRGB mode as for AdobeRGB. It stacks up with switching between modes. It stacks up with Photoshop simulation. It stacks up with nobbling the sRGB standard profile and changing the TRC to a single 2.2 gamma curve. On the basis of the evidence I'm sure a jury would convict, probably unanimously!

Copy link to clipboard

Copied

richardj21724418 wrote

Correct me if I'm wrong but I believe the sRGB linear section extends to between 10 and 11 in terms of 8-bit values.

No way. Without recalling exactly where it is - that's not possible. The flat section is a clipping point. All information stops there!

Quite honestly, you're chasing a red herring, barking up the wrong tree, whatever analogy you choose. This is simply not important or even relevant.

With display color management any differences in tone response curves are remapped. You don't see them! The net result that you see on screen is gamma 1.0. You can work with ProPhoto at gamma 1.8, and a monitor calibrated to 2.2, and you don't see that difference because it's remapped - by the profile, not the calibration. The same with the sRGB curve.

Without color management then yes, there is a visual difference - but there's a bunch of tone curve irregularities, inherent in any LCD, that far outweigh it. Without color management all this is truly irrelevant anyway.

I feel you have a problem in your BenQ software, and you think you have found the explanation for it. But you haven't. Whatever it is, it's something else. In short, you're wasting energy on this. You should be looking elsewhere.

Copy link to clipboard

Copied

https://forums.adobe.com/people/D+Fosse wrote

richardj21724418 wrote

Correct me if I'm wrong but I believe the sRGB linear section extends to between 10 and 11 in terms of 8-bit values.

No way. Without recalling exactly where it is - that's not possible. The flat section is a clipping point. All information stops there!

Are you suggesting the linear section doesn't extend to levels 10 to 11? If so, why does it need a gamma of 2.4 in order to 'catch up'?

Or are you suggesting it's not possible to observe the difference between level 0 and level 5 and 5 and level 10 on an sRGB screen? Hmm!

Come on, just emulate it in Photoshop. Create a B&W image in sRGB space. Then assign the profile to AdobeRGB (which has a single gamma of 2.2). It's obvious the TRCs are very different. It's not just in the 'cliiping point'!

https://forums.adobe.com/people/D+Fosse wrote

With display color management any differences in tone response curves are remapped. You don't see them! The net result that you see on screen is gamma 1.0. You can work with ProPhoto at gamma 1.8, and a monitor calibrated to 2.2, and you don't see that difference because it's remapped. The same with the sRGB curve.

I feel you have a problem in your BenQ software, and you think you have found the explanation for it. But you haven't. Whatever it is, it's something else. In short, you're wasting energy on this. You should be looking elsewhere.

You appear to think that all monitors should be treated as uncalibrated devices and that colour management can only be performed within the PC, by a combination of device profiles and, in the case of a monitor, TRCs contained in the GPU's. Why then do some monitors offer internal hardware calibration and colour space emulation modes? What's the point?

Yes, I do feel there's a problem with the BenQ. It's not just the user calibration software though (which uses Palette Master Element) but it's also the factory calibration of the sRGB mode, which doesn't appear to support the sRGB TRC. I believe there are several issues with PME.

I think you're right about wasting energy on it though. I was originally looking for an answer as to whether it seemed acceptable for a premium grade hardware calibrated monitor to be using a single 2,2 gamma curve for its sRGB mode. It seems you think it is. I don't. I guess we'll have to just disagree on that!

Copy link to clipboard

Copied

Yes, I know the TRC's are different and that sRGB does not follow a straight gamma 2.2.

What I'm saying is that this is not the reason for whatever problems you're seeing. This will not cause any visual tonal shifts on screen, as long as you have color management and profiles to correct for it. If you see tonal shifts, color management isn't working as it should.

The sRGB specification was originally made to describe the native response of CRT monitors. That's the whole purpose of sRGB. The linear section was put in to avoid some mathematical problem, not to compensate for the rest of the curve. The spec was a pretty good match back then, less so today because LCDs behave somewhat differently.

Copy link to clipboard

Copied

https://forums.adobe.com/people/D+Fosse wrote

What I'm saying is that this is not the reason for whatever problems you're seeing. This will not cause any visual tonal shifts on screen, as long as you have color management and profiles to correct for it. You're wasting energy here, you need to use it elsewhere.

What I'm saying is, if a monitor is calibrated to sRGB, then it should conform to the sRGB profile and comply with the sRGB response curve. The monitor profile you use for colour management should then be the standard sRGB profile and there should be no need for anything other than linear TRC LUTs in the GPU. If it requires a non standard monitor profile and different TRC LUTs, the monitor hasn't been calibrated to sRGB.

PME doesn't even produce a valid ICC profile file for the monitor if it's been calibrated to sRGB. So what are you supposed to use, other than standard sRGB?

Of course, it would be possible to hardware 'calibrate' the monitor to sRGB then calibrate the PC to the monitor in the usual way, but then what's the point of hardware calibration? Obviously it would be beneficial here because the TRC of the monitor has been incorrectly set to unmodified 2.2 gamma. This means a non-linear TRC will have to be written into the GPU LUTs in order to comply with sRGB. That clearly goes against the idea of having a hardware calibrated monitor. Surely you'd agree? Otherwise, what is the point of 'hardware calibration'?

Copy link to clipboard

Copied

richardj21724418 wrote

What I'm saying is, if a monitor is calibrated to sRGB, then it should conform to the sRGB profile and comply with the sRGB response curve.

Well, maybe, but again, there's no need. You'd make very complicated tables in the monitor LUT for no benefit whatsoever. All you achieve is to make life so difficult for the monitor that it starts producing artifacts.

The profiles will handle this, whatever the discrepancies. There is no reason the calibration tables should do it, when the profile achieves the same goal with much less effort.

What you're asking is to make the monitor a perfect sRGB device. That's hard work. No monitor ever was that, nor does it have to be. Color management picks up where the calibration leaves off.