- Home

- Illustrator

- Discussions

- Betreff: Why are javascripts so slow in Illustrato...

- Betreff: Why are javascripts so slow in Illustrato...

Copy link to clipboard

Copied

I have now written over 15 javascripts for Illustrator and have found that if it is a lot of looping commands again, it is very slow. The script is available below to copy and try.

Executing a command by looping 2500 takes in ...

... Illustrator 4.1 seconds

... InDesign 4.7 seconds

... QuarkXPress 0.4 seconds

... Safari 0.1 seconds

QUESTION:

Is there anything that can be done for Adobe Illustrator and InDesign to increase the speed by a factor of 10?

The script:

const cDauer = 500;

var vText = "";

var vLength = 0;

var vStart = new Date().getTime();

for (var i = 0; i < cDauer; i++) {

for (var j = 0; j < cDauer; j++) {

vText = vText + "a";

vLenght = vText.length;

} }

var vEnde = new Date().getTime();

alert ("End. Duration = " + (vEnde - vStart)/1000 + " Seconds.");

/* cDauer = 500 */

/* Javascript (.js) in AI = 4.124 Sekunden. */

/* Javascript (.jsx) in AI = 4.258 Sekunden. */

/* Javascript (.js) in IND = 4.766 Sekunden. */

/* Javascript (.jsx) in IND = 4.62 Sekunden. */

/* Javascript (.js) in QXP = 0.04 Sekunden. */

/* cDauer = 2000 */

/* HTML in 0.295 Sekunden.

/* LiveCode 9.6 interpreter in 0.124 */

/* LiveCode 9.6 compiler in 0.126 */

1 Correct answer

1 Correct answer

I found a solution by me self:

Instead of the for-loop I tried a while loop.

const cDauer = 2000;

var vText = "";

var vLength = 0;

var vStart = new Date().getTime();

var i = 0;

var j = 0;

while (i < cDauer) {

while (j < cDauer) {

vText = vText + "a";

vLenght = vText.length;

j++;

}

i++;

}

var vEnde = new Date().getTime();

alert ("End. Duration = " + (vEnde - vStart)/1000 + " Seconds.");Duration = 0.006 Seconds. That much faster than with the for loop.

Explore related tutorials & articles

Copy link to clipboard

Copied

I found a solution by me self:

Instead of the for-loop I tried a while loop.

const cDauer = 2000;

var vText = "";

var vLength = 0;

var vStart = new Date().getTime();

var i = 0;

var j = 0;

while (i < cDauer) {

while (j < cDauer) {

vText = vText + "a";

vLenght = vText.length;

j++;

}

i++;

}

var vEnde = new Date().getTime();

alert ("End. Duration = " + (vEnde - vStart)/1000 + " Seconds.");Duration = 0.006 Seconds. That much faster than with the for loop.

Copy link to clipboard

Copied

That’s faster because it’s only doing a fraction of the work: the inner loop runs only once, the first time the outer loop runs, after which the inner loop doesn’t run again. I believe what you meant is:

while (i < cDauer) {

var j = 0;

while (j < cDauer) {

vText = vText + "a";

vLength = vText.length;

j++;

}

i++;

}That resets the j counter each time the outer loop repeats, same as in your first script.

Copy link to clipboard

Copied

OMG. I noticed that by me self, that j = 0 is missing before the second loop. Thanks for your notice.

Copy link to clipboard

Copied

I doubt it has anything to do with the loop itself, and everything to do with the general performance of different JavaScript interpreters. Recent versions of Illustrator use an ancient (20 year old) JS interpreter. Recent versions of QXP probably use a modern JS interpreter. Comparing JS interpreters of different vintages by different vendors is a fairly pointless exercise. The last 20 years have seen massive investments by JS vendors to improve their interpreters’ performance, so we’d naturally expect newer JS engines to be faster at running the same script.

As a separate point, the algorithm in your script is well known to be pathological in a naive (non-optimizing) interpreter: repeatedly allocating memory to hold a given string, then allocating a larger chunk of memory to hold both that string and the string being appended to it, then copying both of those strings to the new memory, and finally releasing the previous memory when it’s no longer needed. The longer the concatenated string grows, the more memory needs to be copied each time, and the longer the script takes to do its work.

(BTW, an optimizing interpreter might recognize this repeating pattern, assign more than enough memory to hold the initial string with plenty of room to spare, then copy the concatenated strings to the extra space at the end of end of that; thus reducing the amount of memory copying done for the same amount of work. I’ve no idea if any JS interpreters do this: you’d have to ask the people who write them.)

At any rate, the simplest way to improve performance is not to append to a string within the loop, but to append to an array and join that array once the loop is done. That reduces the amount of memory copying so your script runs faster:

var tempArray = [];

for (var j = 0; j < cDauer; j++) {

tempArray.push("a");

}

var vText = tempArray.join("");

var vLength = vText.length;

Copy link to clipboard

Copied

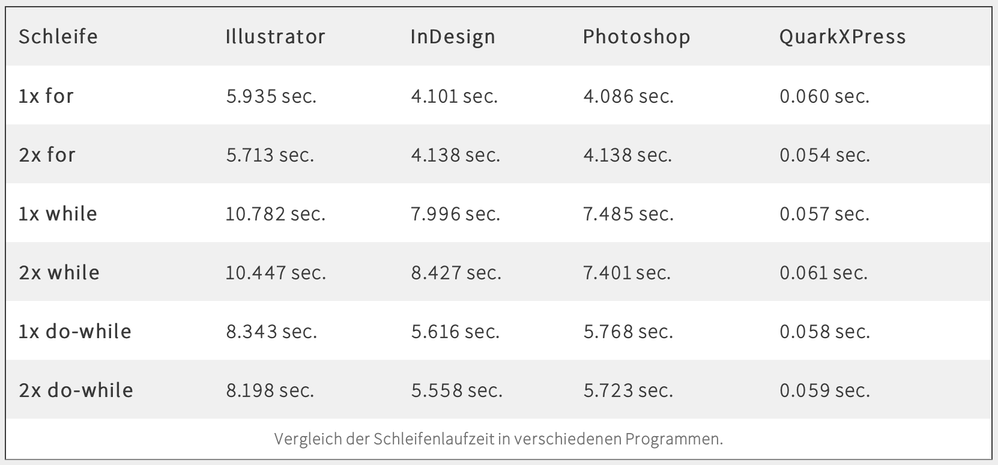

Thanks for the tip doing the strings another way. But my point is different. The problem are the speed of javscript in Illustrator, InDesign and Photoshop, which can be shown with loops:

In this table you can see, that Adobe Apps are 100 to 200 % slower than QuarkXPress.

You can download the six Javascripts for testing your self: Download as ZIP

The complete German article about this can found on my website:

http://www.computergrafik-know-how.de/javascript-schleifenoptimierung-fuer-illustrator-2022/

And again my question: Is there a trick or a compiler to get more speed in Javascripts?

Have a good weekend, jens.

Copy link to clipboard

Copied

Once more: you are comparing chalk with cheese. There is absolutely no reason to suppose a 20 year-old JavaScript interpreter will perform as well as a modern JavaScript interpreter that by now has had 20 years and probably a million-plus man-hours invested in making it go faster.

In any case, you are really measuring the wrong thing. If you want meaningful information, you should compare how the same interpreter performs for different amounts of data. For example, Illustrator’s 20yo JavaScript engine:

function test(samples) {

var res = ["RESULTS:"]

var n = 1000

while (samples > 0) {

var t1 = Date.now()

var s = ""

for (var i = 0; i < n; i++) {

s += "a"

}

var t2 = Date.now()

res.push(s.length+"a = "+(t2-t1)+"ms")

n *= 10

samples--

}

alert(res.join("\n"))

}

var samples = 4

test(samples)

/*

RESULTS:

1000a = 0ms

10000a = 8ms

100000a = 682ms

1000000a = 109171ms

*/

(I only ran 4 samples there; I doubt a 5th would ever finish.)

Compare a modern Node.js:

#!/usr/bin/env node

function alert(s) { console.log(s) }

function test(samples) {

... // same as above

}

var samples = 5

test(samples)

/*

RESULTS:

1000a = 0ms

10000a = 2ms

100000a = 17ms

1000000a = 173ms

10000000a = 1431ms

*/

(I was able to get a 5th sample under Node, though even it crashed with a memory error when I attempted 6.)

If you can’t already see the key difference between those two sets of numbers then go plot them both on paper. It is the shapes of those graphs which is significant. With the old JS engine, the time to complete the task increases quadratically (i.e. it rapidly flies through the roof). With a new JS engine, it increases remarkably linearly—no small achievement on such a pathological piece of code. What’s important is not measuring a script’s raw speed, but measuring its algorithmic efficiency. Inefficient algorithms will make even the fastest of computers run like a slug when fed a non-trivial volume of data to process.

The modern interpreter appears to recognize the inefficient algorithm being used by your JS code and is optimizing under the hood the way in which it evaluates it to radically improve its performance. Whereas the old interpreter is a simple naive implementation that evaluates the inefficient JS code exactly as it’s written. (As I noted previously, the modern optimizing interpreter is most likely reducing the number of low-level memory allocations and memory copies it needs to perform to hold these growing strings.)

…

If you really want to understand this stuff, go read up on Big-O notation and how different algorithms can have radically different performance profiles while performing the same job.

As an automator though, all of this deep Computer Science stuff may be moot anyway, as your most important question should not be: “How fast does my script complete the job?”, but “Does my script complete the job significantly faster (and more accurately) than doing the same job by hand?”

If your script takes 5 minutes to run but replaces a error-prone manual process that previously took half an hour, who cares if it’s not running on the newest, most optimized JavaScript engine? Go make a cup of tea while you’re waiting for your computer to finish if you’re bored. Or, if it’s performng an daily production task, you should easily afford a second machine to run the script just from the salary hours per year it now saves you alone.

And if your script does take half an hour to run, then either it’s doing a helluva lot of valuable production work or you’ve got a horribly inefficient algorithm running somewhere in it; in which case you need to performance-profile your code under different levels of load to pinpoint where its critical inefficiencies lie. My first major script took over 10 minutes to run a standard job; the update that replaced it a year later, less than 1 minute. Not because the computer got any faster, but because I spent the year inbetween learning about speed vs efficiency, common CS algorithms, Big-O notation, and so on. (Funnily enough, its #1 performance problem was caused by the same naive string concatenation algorithm you’re demonstrating here. I changed the way in which it assembled long strings as it ran, and it flew.)

My advice: if you’re teaching yourself, start by going through a high school Computer Science textbook. Once you’ve grasped the basics, get yourself a copy of Steve McConnell’s Code Complete which you can pick up and read individual chapters if/as/when needed.

Oh, and remember to factor in all the extra time you’ve spent learning CS when assessing your final cost-vs-benefit ROI. ’Cos it’s a rabbit-hole.:) Just like algorithms themselves, making code go fast tends to be slower, harder, and more complicated (and error-prone) than making code which goes slow but gets the job done. A good programmer doesn’t write code that is fast for fast’s sake, but code that is fast enough.

Copy link to clipboard

Copied

Thank you for your detailed answer. I don't think you got my point. Loops are loops and must be processed reliably and quickly. If the loop itself slows down the execution, then the optimization codes in the loop are of no use.

If I have to adjust 100 or more objects in Illustrator via Javascript and this takes minutes instead of seconds, then it's annoying.

As a paying customer, I can't accept that Adobe apps have 20 years, as you write, old javascript interpreters in them. On the other hand, Adobe requires me to switch to newer OS and programs with the latest updates. Of course, I may and must compare the performance of current programs. The results of the Adobe apps are very poor. Therefore, in my eyes, Adobe must soon improve the javascript interpreters if possible.

I also did not compare my scripts with Node.js, but only with QuarkXPress 2020 and Apple Safari. And with these programs I have 100 - 200 % more speed.

I want to write a javascript in InDesign that also checks longer texts for certain properties. If these checks take 10 min instead of 0.1 min due to the (old?) javascript interpreter, then this is not usable and unacceptable. I can't comfort my client with the fact that it's because of the (old?) javascript interpreter.

Copy link to clipboard

Copied

For the third time, the difference in performance has nothing to do with the loop. It has to do with the code that you’ve written within your loop, which is an infamous pathological algorithm that causes massive amounts of [redundant] low-level memory copying when run in a non-optimizing interpreter/compiler. The quickest way to improve performance is to replace that very inefficient algorithm with a more efficient one. Which an optimizing JS interpreter does for you automatically; otherwise you have to sort it yourself.

It’s clear you don’t know enough Computer Science to understand why your algorithm bogs down so badly (you’re a power user, not a career software developer with a university education in CS, so it’s entirely reasonable for you not to know this stuff), and the sample code you posted is a contrived example, not a real production script with representative data to test against, so it really isn’t possible for anyone here to advise you on appropriate code changes to make.

Yes, it’d be wonderful if Adobe had a modern JS engine in all their apps, and had kept it up to date over the last 20 years. They are slowly working to address that by introducing UXP (the successor to ExtendScript + CEP), first to XD and currently to PS. Don’t be surprised if AI gets UXP last though; and don’t be surprised if it takes several more years to complete the transition: what may seem simple and obvious to you as the user is no doubt heinously complicated and time-consuming to the software engineers who have to implement it. Remember too: Adobe puts its money in developing the popular features that will sell the most products to the most users. Yes, all professional artworkers really ought to learn some automation skills: it can pay terrific dividends by reducing/eliminating repetitive scut work in the long term. But if a majority of Adobe users don’t choose to learn/use automation then you can’t blame Adobe for pushing new automation features down the priority list. So if you don’t mind waiting a few more years, eventually AI will have a nice modern optimizing interpreter to run your amazingly inefficient JS code amazingly efficiently. But right now you’re just howling in the wind complaining about it.

For now, either 1. go learn yourself enough CS to profile and optimize your code for yourself, or 2. pay someone with more extensive programming experience to develop part or all of your automation for you, and let them worry about making it go fast enough to satisfy your customer’s needs (whether by employing more efficient algorithms in the JS script, or by using a lower-level language such as C that provides professional programmers with lower overheads and precise control over how memory and other resources are managed).

Copy link to clipboard

Copied

In short, avoid nested loops unless you have to. If you have to, there is nothing you can do.

Copy link to clipboard

Copied

It has nothing to do with nested loops. (If you know Big-O notation, you’ll know when nested loops are/aren’t a performance concern.)

It has to do with OP’s pathological string-building algorithm, where the script starts with an empty string, appends a string to it, then appends another string, and repeats this process (e.g.) 1,000,000 times. OP appends one character each time using the “+” operator, so the first iteration has to copy a 0-character string plus a 1-character string into a newly created 1-character string, the second iteration has to recopy that new 1-character string plus another 1-character string into a newly created 2-character string, the third iteration that 2-character string plus a 1-character string into a new 3-character string, and so on, all the way until the 1,000,000th iteration, where it has to copy the previous 999,999-character string plus a 1-character string into a new one-million-character string. Which, if my math is correct, is a grand total of 500,000,500,000 characters copied.

And all except the very last one of those strings was only ever used once and immediately thrown away.

An optimizing interpreter/compiler (or an insightful programmer) observes that this is all massively wasteful, and knows it can eliminate most/all of that waste just by allocating a really large (e.g. 10 million character) section of empty memory right at the start of the process, which it can then gradually fill up by adding to the end of it. That way, the only strings being copied are the strings being appended, which in OP’s artificial example was a single-character string, thus equalling a grand total of one million characters copied instead of 500 trillion.

p.s. OP might also like to add TheDailyWTF.com to their regular reading list, wherein they can laugh at all the so-called “professional” programmers who don’t understand Computer Science either. (e.g. Try searching it for “StringBuilder” for stories that are particularly relevant here, albeit in other languages as JS doesn’t have one of those.)