Adobe Community

Adobe Community

- Home

- Lightroom Classic

- Discussions

- Re: Camera Raw 32 bit computation?

- Re: Camera Raw 32 bit computation?

Camera Raw 32 bit computation?

Copy link to clipboard

Copied

I have several questions below my observations here. Thank you!

I want to open a .AWR (Sony A7R2) as a 32-bit image. When I import the .ARW raw file into Photoshop through Adobe Bridge or Adobe Lightroom Classic, and choose the "Open in Photoshop as a Smart Object" option, and have the export settings in Adobe Bridge or Adobe Lightroom Classic as 16/bit (because there is currently no 32-bit option... yet?) even though the image file name in Photoshop will be something like (DSC00001, RGB/16*)* AND the Photoshop bottom left data bar will give me the data reflecting a 16 bit rasterized image (241.3M)...

When I immediately save the Raw "Smart Object Layer" (WITHOUT rasterizing - single layer) as a TIFF, then I check its actual data size on my computer, it is around 684.33 MB (No compression Tiff).

Also, if I import the .ARW file into Photoshop through Adobe Bridge or Lightroom Classic, choosing the "Open in Photoshop as a Smart Object" option, BUT I immediately change the Photoshop working space to 32-BIT (Image>/Mode>/32-bit) and do not rasterize... immediately the the Photoshop bottom left data bar seems to give me a data reading reflecting a 32-bit image. It DOUBLES from the 241.3M (in 16 bit) now to 482.7M (in 32-bit).

*Additionally:

- If I immediately SAVE the file as 32-bit TIFF (WITHOUT rasterizing - No Compression - 32-bit float option) its saved file size is 1023.41MB.

- If I immediately save the file as a 32 bit TIFF (Flattened/Rasterized) its saved file size is 941.84MB

- If I immediately save the file as a 32 bit PSB (WITHOUT rasterizing) its saved file size is 644.73GB.

- If I immediately save the file as a 32 bit PSB ( Flattened/Rasterized) its saved file size is 563.16MB

The above data amounts seem to potentially partially support the following idea then below questions:

When a raw image is adjusted in Adobe Bridge or Adobe Lightroom Classic (Camera Raw Parametric Edits, with the virtual results being represented as a virtual Preview Image), then the file is imported into Photoshop as a “Smart Object”, then, the ONLY further adjustments done to the file are done by clicking into the “Smart Object” (with actual Camera Raw opening - further adjustments being made there - and then in Camera Raw, “OK” is clicked on and as the preview updates it says “preparing smart object” and then the new adjustments show on the virtual preview image...

*** QUESTIONS:

- Are these adjustments lossless?

- Is the computation being done in Camera Raw?

- Is the computation being done in Camera Raw 32-bit float point?

- Is it done, at a higher bit depth, with more integrity based on, or with access to the original raw data, as opposed to (or different than) typical rasterized 16-bit pixel adjustments?

- If an .ARW raw file is brought into Photoshop as a “Smart Object” layer, then Photoshop’s working space is immediately changed (Step 2) to the 32-bit working space, and then ALL of the additional adjustments to the image are done only to the original raw “Smart Object” layer, and some additional copies of the raw “Smart Object” layer (by duplicating - “New Smart Object Via a Copy”) and then these layers are blended together using Photoshop masks, created while in the 32-bit space (32-bit masks?) and the supposedly 32-bit brush (black/white - concealing/revealing)…

- Do we then essentially have what could be called or considered lossless 32-bit Raw Layers in Photoshop?

- If the parametric editing/computation done in Camera Raw is NOT higher bit depth, higher integrity, less degrading, lossless… Is it basically the SAME thing as adjustments done to a rasterized file, via “adjustment layers” and “smart object” layers applied to a 16-bit image, when flattened?

- If only parametric editing is done via Camera Raw, and raw “Smart Object Layers” with no adjustments made to rasterized pixel data… (especially considering working in Photoshop’s 32-bit space) once the image is eventually flattened, will we now have a substantially more robust data file? Especially if a lot of adjustments have been made?

- Lastly, if a raw image file follows the above workflow, in the 32-bit working space, with a variety of only raw “smart object layers” (new smart object via a copy) and only raw smart object parametric editing is used by clicking into actual Camera Raw for each smart object layer (except for the masking). Then the image is finally rasterized in Photoshop’s 32-bit space. Then maybe just a few minor final adjustments are made to the 32-bit rasterized file, via Levels, or Curves, or Hue/Saturation… and lastly the file is finally converted into the 16-bit space (usually through the HDR dialogue window - choosing the “Exposure Gamma” option) do we now have a potentially unusually ROBUST 16-bit data file, potentially lacking some, a lot, or or nearly all of the typical degrading we can see when a person uses a lot of Photoshop 16-bit adjustments?

*Please keep in mind I’m talking about making HUGE prints here and files with LOTS of adjustments.

Very best regards! Thank you again!

Copy link to clipboard

Copied

Copy link to clipboard

Copied

Thank you very much for your response and your patience with me. I am sorry if I am missing somthing here.

I have read that when using the Merge To HDR option, LR/CR is using 32-bit float point math to do the computation, or somewhere put: "a 32-bit float point engine." I have also been told that in order to process HDR, LR/CR "needs to have 32-bit float point."

Question 1:

Are you saying that when LR/CR is processing a single Raw file (DNG or Proprietery) but not a "Merge To HDR" Raw file, then it does not use 32-bit float point?

Secondly, some Raw Converters (such as Raw Therapee...) claim their Raw converter is a "32-bit (floating point) processing engine."

Question 2:

Is LR/CR not ("a 32-bit floating point engine") with, maybe, the exception of HDR calculations?

Lastly, I'll double check later but I thought I had read somewhere in the Adobe DNG Specification PDF (2021) that 32-bit floating point bit depths were supported.

Thank you (or anyone else) for your help and any clarification.

Copy link to clipboard

Copied

The Sony A7 R2 ARW files are 14 bit depth so there is no benefit to converting them to 32 bit. LrC and ACR Merge to HDR create 32 bit floating point DNG files. They do benefit from the higher bit depth since the dynamic range has been increased by using bracketed image files.

Copy link to clipboard

Copied

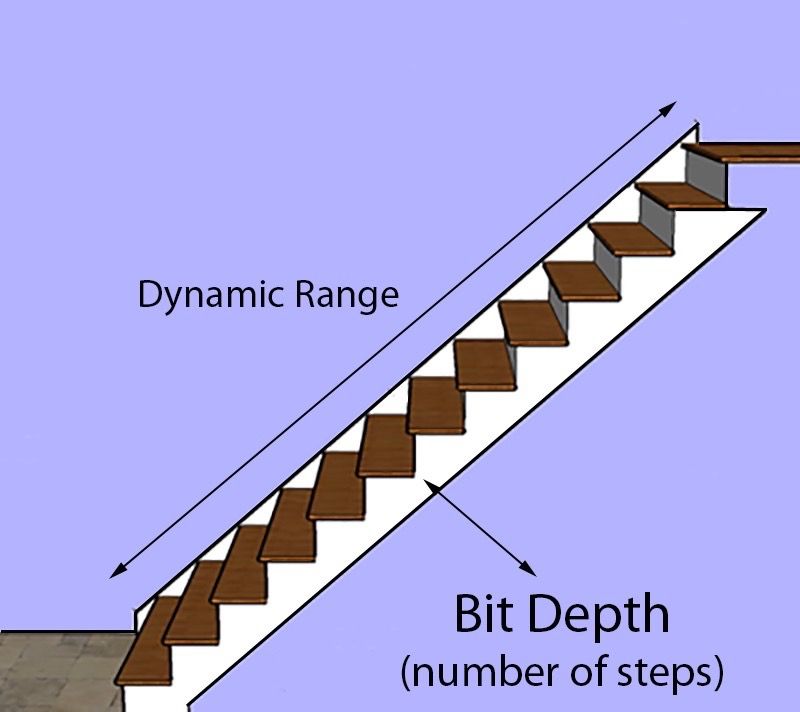

Bit depth and DR are entirely separate attributes. More Bit depth doesn't equate to more DR.

Yes, there needs to be a certain bit depth to support a certain DR but this the two are not mutually exclusive.

The staircase analogy works: DR is the length of the staircase while the bit depth is the number of steps; you can have fewer steps, the length is what it is.

Copy link to clipboard

Copied

Thank you very much for taking time to respond here. I might not be making myself clear enough, but I understand DR vs bit depth. This is extremely basic. Just for background purposes I am a full time vetran photo professional and post-processing educator. Just trying to dig deeper into the computation.

Copy link to clipboard

Copied

Thank you very much for taking time to respond here. I might not be making myself clear enough, but I understand DR vs bit depth. This is extremely basic. Just for background purposes I am a full time vetran photo professional and post-processing educator. Just trying to dig deeper into the computation.

By @Camera Raw Fan

My reply was based on this comment:

"They do benefit from the higher bit depth since the dynamic range has been increased by using bracketed image files".

Copy link to clipboard

Copied

Thank you very much Todd for your response. Although I showed an example of some Sony files, my questions do not relate to Sony cameras as I work on files from all digital camera systems.

Would you be willing to take a stab at my response question to the digitaldog?

If so, thank you.

Question 1:

Are you saying that when LR/CR is processing a single Raw file (DNG or Proprietery) but not a "Merge To HDR" Raw file, then it does not use 32-bit float point?

Secondly, some Raw Converters (such as Raw Therapee...) claim their Raw converter is a "32-bit (floating point) processing engine."

Question 2:

Is LR/CR not ("a 32-bit floating point engine") with, maybe, the exception of HDR calculations?

Lastly, I'll double check later but I thought I had read somewhere in the Adobe DNG Specification PDF (2021) that 32-bit floating point bit depths were supported.

Thank you (or anyone else) for your help and any clarification.

Copy link to clipboard

Copied

LR can store the "history" of the image adjustment settings in 32-bit TIFF or DNG, but as far as image processing is concerned, the output of LR/ACR will always be 8-bit or 16-bit. That is, it is LRs/ACR’s job to tone-map or render the input image to a 8-bit or 16-bit output. The only reason to output a 32-bit floating point image would be to "pass thru" scene-referred data, which is not something that LR/ACR does.

If you want to have the flexibility to use either LR/ACR or Photoshop to process a 32-bit image, you should use TIFF as your file format.

** http://www.color.org/ICC_white_paper_20_Digital_photography_color_management_basics.pdf

Copy link to clipboard

Copied

Here's where I'm getting at. The history or computation of the initial Raw data is in 32 bit correct? Once it is rendered, or rasterized, it has to be in the 8 or 16 bit. Would you say that is correct? A programmer friend of mine says that anyone who knows anything about how data works knows that raw data computation (history) is 32 bit. But with Adobe, must be output to 16 bit.

Thoughts?

Copy link to clipboard

Copied

"Thank you very much Todd for your response. Although I showed an example of some Sony files, my questions do not relate to Sony cameras as I work on files from all digital camera systems."

All digital camera sensors that I am aware of are at best 16 bit depth with most less at 14 or 12 bit depth. So again nothing to be gained by converting the file to 32 bit with the exception of Merge to HDR, which creates a 32 bit floating point DNG file. The 32 bit file file can be processed non-destructvely in LrC and ACR.

The DNG 32 bit floating point file can only be edited in LR or ACR with no capability to export as a 32 bit HDR file. If you need to edit the file in PS you have two options:

1) Apply your LR Develop module edits to the HDR DNG file and then use Edit in PS to open it as a 16 bit TIFF in PS.

2) Use LR Edit In> Merge to HDR PRO in Photoshop to create a 32 bit HDR TIFF.

Copy link to clipboard

Copied

It's kind of important to separate the bit depth of an image, a fixed attribute of capture, and the bits used for processing that fixed attribute.

Copy link to clipboard

Copied

That's exactly the point I'm trying to get at. I would appreciate a comment on my last reply to you if you have the time. Thank you very much.

Copy link to clipboard

Copied

Simple; it's doing this his correct. Go on and process your images.

Very, very few actual output devices output 16-bit.

Copy link to clipboard

Copied

Thank you very much for your feedback here. It has been helpful!

If you will bear with me, I'd like to carefully reword this one more time just to make it as clear as I can.

What my programmer friend told me (at least what I believe I was understanding from what he was telling me) is that Raw Parametric computation (the virtual adjustments) - setting up the parameters in CR for the eventual tone mapped pixel values - or maybe some other ways of putting it: setting up the "history/instructions" or the computation for the sensor data to be eventually tone mapped - or the computation of the demosaicing and eventual set up of tone mapped pixel values (what they will be eventually be - tone mapped to) is done in/with 32-bit computation (bits used for Raw processing, up to the initial tone mapped file).

Of course I am not yet talking about the bit depth of the tone mapped image (the "fixed attribute of capture"). The pixel image does not exist yet (although we do get a "virtual" pixel preview).

Furthermore, the preview we see in CR (we have to see somthing or we could not process an image effectively) is not the same/real (fixed attribute) image that CR can/will eventually produce, which we end up saving. The preview image we see as we are making Raw adjustments in LR/CR is a very good "virtual" pixel representation of what the data CR is computing, will look like. Side note (not to get distracted from the main point) I have read that the preview image LR/CR uses to show us feedback of our Raw Adjusting, is a TIFF (temporary). But this is not what I am trying to clarify.

The "real image" will eventually exist once the image is rasterized (demosaiced, parameter adjusted/rendering instructions, tone mapped and turned into pixels) into an 8-bit or 16-bit pixel output (the "fixed attribute of the capture").

The developed Raw "history" of the image adjustment settings, or what might be described as the initial computation taking place to turn the initial camera sensor data into a demosaiced, tone mapped 8-bit or 16-bit rasterized file, is done in 32-bit computation.

Of course the rasterized output file is 8 bit or 16 bit.

And as you previously wrote, we "can store the 'history' of the image adjustment settings in 32-bit TIFF or DNG."

So, when we save a Raw Smart Object (not rasterized or flattened yet) as a TIFF, that TIFF still holds the initial Raw data, 32-bit computation "history/rendering instructions" (and the Raw data parameters or "instructions" can be readjusted again at a later time).

In Photoshop, if we double click on a Raw Smart Object image thumbnail (in the Layers Palette) we still have access to continuing Raw Parameter Editing (Camera Raw).

If/when the image gets rasterized or flattened, it then becomes an 8-bit or 16-bit "fixed attribute of capture" depending on our choice of working space option/settings, and/or our saving options.

If we process (make adjustments) in LR/CR, to a proprietary Raw file or a Raw DNG, but simply click on "done" we are simply recomputing/changing and re-saving an updated "history/set of rendering instructions" (32-bit computation). Or put another way, we are simply setting up new paremeters (new history/rendering instructions) for the eventual output tone mapped pixel values. The proprietary Raw file (or a Raw DNG) holds that re-computed data.

CR is used to demosaic the initial sensor data, set up the rendering instructions, and when the time is right, tone map the data to a pixel file ("fixed attribute of capture"). These, generally speaking, lossless edits (although nothing is literally totally lossless) are done in high bit.

Lastly, with the exception of Adobe Merge to HDR Pro, the computation for all rasterized pixel data adjustments is done in a lower (not 32) bit depth.

AND even if a person brings a Raw image into Photoshop as a Raw Smart Object Layer, then immediately changes Photoshops working space to 32-bit, then rasterizes (flattens) the file to a 32 bit file... No adjustments done to the rasterized image is 32-bit computation, and the image is not 32-bit (not over 16, 536 levels)?

Thank you for your help.

Copy link to clipboard

Copied

Parametric edits and history are just text; such as XMP. There's nothing here to with bit depth or otherwise.

ALL making a SO does is embed a copy of the raw (and parametric edits) into the Photoshop stack and allows you to then re-edit the raw using ACR there. That's really it. If you render the raw/SO into that stack and save it as a TIFF, it's all 'burned' to pixels in the layers and of course, LR can't do anything with those layers that it doesn't support. You're now editing what amounts to a 'flattened' TIFF if editing inside LR. The source bit depth is what it is.

All parametric edits are truly non-destructive; the raw is read-only in Adobe raw processing.

Anything else would require your engineer friend to discuss with an Adobe engineer outside an NDA which is unlikely.

Copy link to clipboard

Copied

Thank you. I really appreciate your noted patience with me! I'm an Adobe advocate and educator and my take home so far is that:

The beginning pipeline computational bit depth of Adobe taking the initial raw data, demoasicing it, computing/creating text instructions for the creation of the future rasterized pixel values based on the specific profile used, as well as the variety of raw edits chosen (when the image is adjusted in LR Develop/Camera Raw) and then finally gets tonemapped to create the initial 8 bit or 16 bit rasterized file, although lossless, might not (or is not) being done using any 32-bit computation.

Also, what exactly happens when a raw smart object file is brought into Photoshop (not rasterized) as a Raw Smart Object Layer and then Photoshop's working space is immediately changed to the 32-bit space (and rasterization is not chosen during the working space change) although clearly the data amount displayed in Photoshop (below on the left) DOUBLES (from what is says in the 16 bit workspace) if the raw file next becomes rasterized (flattened) in the 32bit working space (it can be saved as a 32-bit tiff or psb...) it is either NOT really 32-bit, and has no increase in levels/gradations/data or editing headroom...

OR, at this point it may be, or literally IS STILL 16 bit (no more levels than 16,536) BUT this is PROPRIETARY information not available to the public in any open disclosure documents such as the DNG SPECIFICATION PDF on the Adobe website.

Lastly, also the masks, brushes or any alpha channels created in this 32-bit space (granted no selections were used to create them...) are also not 32-bit, or is also withheld proprietary information not openly disclosed?