- Home

- Lightroom Classic

- Discussions

- Re: Lightroom denoise remarkably slow

- Re: Lightroom denoise remarkably slow

Copy link to clipboard

Copied

I posted this on a Youtube message board and apparently I'm not alone...so posting here.

I have a relatively fast Win 11 desktop, with 32Gb of RAM, and plenty of free disk space on both my cache and program SSD drives. Lightroom's new denoise takes 360 seconds (6 minutes) to process a single 50Mb file from my Sony a1. Topaz deNoise AI takes 80 seconds for the same file. Another user with a similar Win11, SSD setup with 64Gb RAM reported 5 minutes to process a 52MB Pentax file.

The Youtube creator 'showed' a processing time of 7 seconds. While it's unclear whether he used a technical trick to speed that view on his video, he did suggest reporting our long processing times to Adobe/Adobe Community to see if there's something other than Lightroom causing these long times?

3 Correct answers

3 Correct answers

So you have a 3 year old GPU with 4GB of memory. Eric Chan says you probably ought to use 8GB for Denoise.

My processing times for 45 MP raw files (Nikon Z7) are between 15 and 20 seconds. Very consistent and much faster for smaller MP raw files. Youtube guy absolutely didn't cheat. This is just how fast this technology has evolved over the last years and for any Mac since the M1 machines and any recent windows machine with a good video card) this is what you should expect. Older intel Macs and mobile GPUs, expect processing times around a minute. If you are seeing 10 minutes or more for reasonably r

...It's not lack of ram or hard drive space and my graphics card was updated so I'm stumped.

By @frankt98015343

Copy link to clipboard

Copied

My 16MP images are running about 9 seconds on one machine and 3 minutes on an old one. What's your graphics card? That's the main difference in performance.

Victoria - The Lightroom Queen - Author of the Lightroom Missing FAQ & Edit on the Go books.

Copy link to clipboard

Copied

I have an NVIDIA GeForce GTX 1650 Super. 4Gb of GDDR6 RAM

Copy link to clipboard

Copied

I have an NVIDIA GeForce GTX 1650 Super. 4Gb of GDDR6 RAM

By @hofendisasd

Underpowered for this functionality. My 2022 MacBook Pro with 64GB of unified memory processes a Canon R6 Mark II raw in about 12 seconds.

Copy link to clipboard

Copied

What camera are you using that only has 16MP files? Are they RAW or JPG?

Copy link to clipboard

Copied

It was an older camera (Olympus EM-10) that I was using to test some extraordinarily noisy photos, yes raw images.

You might find Ian's comments here useful re: your GPU Re: Denoise AI in 12.3

Victoria - The Lightroom Queen - Author of the Lightroom Missing FAQ & Edit on the Go books.

Copy link to clipboard

Copied

So you have a 3 year old GPU with 4GB of memory. Eric Chan says you probably ought to use 8GB for Denoise.

Copy link to clipboard

Copied

THANK YOU, ALL! This was helpful in taking another look at this. In Preferences, I changed the processor from AUTO to NVIDIA (and not CPU). My processing time for another 50Mb file dropped to 42 seconds, which is quite acceptable!

Copy link to clipboard

Copied

So you'll be correcting your YouTube comments?

Copy link to clipboard

Copied

No, actually I've been trying to amend my "correct answer" comment. It turns out (user error) that I changed the processor in Topaz, not Lightroom. LR 'was' set correctly to GPU. I changed Topaz deNoise AI from Auto to GPU and the processing time improved from 80 seconds to 42. I just tested LR again and it, again, processed at SIX minutes per file (with all other programs closed except LR). So the problem remains.

Copy link to clipboard

Copied

One final PS. While a faster graphics card will always make a difference, Topaz is now taking 42 seconds to deNoise an image, while LR is taking 360 seconds. That speaks to a software issue, not hardware. My last #100, 6400 ISO image batch using Topaz from a dance rehearsal earlier this week took 140 minutes - and would now take 70 since I corrected the GPU settings. The same processing with LR would take 10 hours (if you can batch process, which I don't believe you can, unless you use the manual denoise).

Copy link to clipboard

Copied

One final PS. While a faster graphics card will always make a difference, Topaz is now taking 42 seconds to deNoise an image, while LR is taking 360 seconds. That speaks to a software issue, not hardware.

By @hofendisasd

So you get the source code to both products the make such a statement; interesting analysis.

Yes, a faster graphics card will always make a difference.

Copy link to clipboard

Copied

I have a similar Windows 11 setup with an I7, 64 GB RAM, SSD drives and an NVIDIA GeForce 1650 with 4 GB RAM. I am having about the same results with the same settings. I also get screen flicker when the LRC DeNoise is processing. The Esimated time shows 3 minutes, but it actually takes around 5 minutes to process a 50 MB DNG file. It would be nice if someone actually with Adobe could specify what we need as far as a graphics card so that we don't go out and buy an 8 GB graphics card only to find out that there is another issue behind this problem. Currently the Adobe recommendation is 4 GB. If that is not correct they should update it.

Copy link to clipboard

Copied

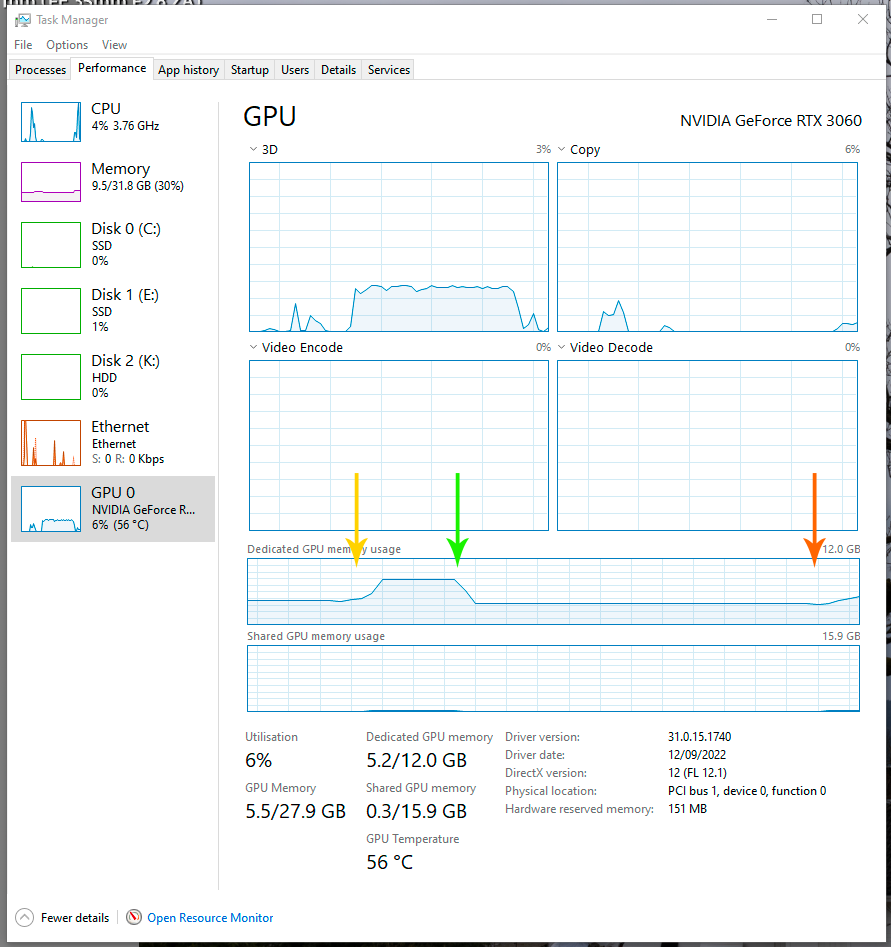

Here's an interesting graph.

Sony a7r V at 60 MP, moderately noisy at ISO 1250. The GPU is an RTX 3060 with 12 GB VRAM.

Yellow - Enhance data starts loading, then sits idle until

Green - start Denoise

Red - finish Denoise

Start to stop Denoise timed in at 33 seconds.

Not sure how to interpret this, but it doesn't seem to draw too heavily on GPU resources. Note memory usage goes down.

EDIT - another one looking at CPU just in case. Brief spike as Enhance loads, then - nothing. Very brief spike just as it finishes.

Copy link to clipboard

Copied

Yeah, I just ran LRC DeNoise with the Task Manager open and also saw the memory usage decrease slightly to a little less than 3 GB. At the same time the GPU video encode was at about its maximum.

Copy link to clipboard

Copied

I noticed the same thing. I have a very old computer but it's been upgraded a lot. A GPU is designed to do many different calualations at teh same time. It feels like Adobe is only using a small part of the GPU. They need to find a way to break down their processing into multiple threads. I'm sure it will come. This is a very new feature after all.

Copy link to clipboard

Copied

It's your GPU that determines how fast Denoise will be.

Copy link to clipboard

Copied

"Remarkably slow" is a reasonable result if you have a low-powered GPU. So reporting it to Adobe will just result in having Adobe point to your GPU. AI Denoise is an extremely computationally intensive process, more than any other process in LrC.

Copy link to clipboard

Copied

My LRX Denoise is taking 12 minutes to process. I guess it's my graphics card, but should it be THAT slow? Here's what I have:

NVIDIA GeForce GTX 1050 2GB Ram

Processor Intel(R) Core(TM) i7-7700 CPU @ 3.60GHz, 3601 Mhz, 4 Core(s), 8 Logical Processor(s)

Windows 10 64 32GB RAM

So, if it's my card... what do you recommend I replace it with?? Thanks

Michael

Copy link to clipboard

Copied

Typo.. I meant LRC

Copy link to clipboard

Copied

Before you start spending many hundreds of dollars to replace your graphics card, recognize that topaz denoise has been taking 9x less time to do the same job that lightroom is taking on the same images. For me, that is 40 seconds versus 360 seconds. So it is very possible this is a software problem not a hardware issue with our graphics cards. Wait a while and see what happens before you pull out your credit card.

Copy link to clipboard

Copied

Copy link to clipboard

Copied

I think the Ian Lyons post that Victoria linked to may hold part of the answer to this. Hardware acceleration specifically for machine learning/AI might be a missing link that helps explain why some computers can process AI features so much faster than others, and might help explain why older computers take much more time.

On my mid-range but recent laptop, I have so far never seen a Denoise time longer than a minute. I just did some tests and below is what I got. My hardware list is at the end…really nothing special from a CPU/GPU point of view, so I strongly suspect it’s the Neural Engine machine learning hardware acceleration that cuts the times. And that machines with older AI acceleration hardware, or none at all, will have to take longer. The Neural Engine is only in Apple Silicon Mac processors, which might help explain why owners of older Intel CPU based Macs are similarly reporting much longer AI Denoise times in the minutes. On the Windows side, I wonder if times are consistently faster using an NVIDIA GPU new enough to have their more recent AI acceleration hardware. (I notice that D Fosse above only took 33 seconds using an RTX 3060 with 12GB VRAM. That GPU model was released the same year as my laptop.)

In my tests, while the GPU is very busy, the CPU is very quiet during AI Denoise, with other background processes using more CPU than Lightroom Classic. This looks very similar to video editing, where newer computers render video very quickly because they have both a powerful GPU and hardware acceleration for popular video codecs. An older computer lacks both, forcing rendering to the CPU, which takes many times longer, and costs a lot more power and heat.

Of course, this only looks at performance within Adobe AI Denoise alone. It does not explain why AI Denoise might be slower than Topaz or others on the same images. I expect Adobe will optimize competitive performance further after this first release.

- - -

Test results

24 megapixels (Sony ARW raw)

ISO 3200

Default Develop state, no edits

AI Denoise set to 40

Estimated time: 40 seconds

Actual time: 36 seconds

16 Megapixels (Panasonic RW2 raw)

ISO 800

Many edits and masks

AI Denoise 25

Estimated time: 30 seconds

Actual time: 28 seconds

16 Megapixels (Panasonic RW2 raw)

ISO 800

Reset to default Develop settings

AI Denoise 25

Estimated time: 30 seconds

Actual time: 27 seconds

Equipment:

MacBook Pro M1 Pro laptop (1.5 years old), 8 CPU cores and 14 GPU cores (yes, it’s the base model), with 32GB unified memory.

(Unified memory means the GPU integrated with the Apple Silicon SoC can use any amount of unused system memory.)

Lightroom Classic uses about 5.5GB memory through most of AI Denoise processing, but that more than doubles to 12–13GB near the end of AI Denoise. That still may have left large amounts of system memory available to the graphics/AI acceleration hardware.

No special preparation: 6 days uptime, lots of apps open behind Lightroom Classic.

Copy link to clipboard

Copied

Another factor that should not be forgotten is that whatever Adobe did, it is leading to FAR better quality than Denoise AI/Photo AI give. Far fewer weird artefacts, no hot pixels that get amplified, no weird grid artefacts, etc. I am frankly blown away by the quality of these denoise results and it is completely reviving old images that I thought were not really scalable to the sizes I like to print. I will happily give up a bit of speed for this. You do need a very beefy GPU or a recent Apple Silicon mac to really use this comfortably though.

Copy link to clipboard

Copied

Well, "far" better is somewhat subjective. I'm certainly not finding that using the deNoise RAW model. A "bit" of speed is somewhat less subjective, LR taking 6 minutes vs 40 seconds for Topaz. And if you happen to need batch processing as I need for my clients' multiple images, well, LR is simply not an option. The 100 4000-6400 ISO images I provided to my clients this week would have taken over 10 hours for LR (and me having to stop and run each one individually, so who knows how long it really would have taken) vs me batching them in Topaz and coming back after dinner to find them all finished.

-

- 1

- 2

Find more inspiration, events, and resources on the new Adobe Community

Explore Now