- Home

- Lightroom Classic

- Discussions

- Re: GPU notes for Lightroom CC (2015)

- Re: GPU notes for Lightroom CC (2015)

GPU notes for Lightroom CC (2015)

Copy link to clipboard

Copied

Hi everyone,

I wanted to share some additional information regarding GPU support in Lr CC.

Lr can now use graphics processors (GPUs) to accelerate interactive image editing in Develop. A big reason that we started here is the recent development and increased availability of high-res displays, such as 4K and 5K monitors. To give you some numbers: a standard HD screen is 2 megapixels (MP), a MacBook Retina Pro 15" is 5 MP, a 4K display is 8 MP, and a 5K display is a whopping 15 MP. This means on a 4K display we need to render and display 4 times as many pixels as on a standard HD display. Using the GPU can provide a significant speedup (10x or more) on high-res displays. The bigger the screen, the bigger the win.

For example, on my test system with a 4K display, adjusting the White Balance and Exposure sliders in Lightroom 5.7 (without GPU support) is about 5 frames/second -- manageable, but choppy and hard to control. The same sliders in Lightroom 6.0 now run smoothly at 60 FPS.

So why doesn't everything feel faster?

Well, GPUs can be enormously helpful in speeding up many tasks. But they're complex and involve some tradeoffs, which I'd like to take a moment to explain.

First, rewriting software to take full advantage of GPUs is a lot of work and takes time. Especially for software like Lightroom, which offers a rich feature set developed over many years and release versions. So the first tradeoff is that, for this particular version of Lightroom, we weren't able to take advantage of the GPU to speed up everything. Given our limited time, we needed to pick and choose specific areas of Lightroom to optimize. The area that I started with was interactive image editing in Develop, and even then, I didn't manage to speed up everything yet (more on this later).

Second, GPUs are marvelous at high-speed computation, but there's some overhead. For example, it takes time to get data from the main processor (CPU) over to the GPU. In the case of high-res images and big screens, that can take a LOT of time. This means that some operations may actually take longer when using the GPU, such as the time to load the full-resolution image, and the time to switch from one image to another.

Third, GPUs aren't best for everything. For example, decompressing sequential bits of data from a file -- like most raw files, for instance -- sees little to no benefit from a GPU implementation.

Fourth, Lightroom has a sophisticated raw processing pipeline (such as tone mapping HDR images with Highlights and Shadows), and running this efficiently on a GPU requires a fairly powerful GPU. Cards that may work with in the Photoshop app itself may not necessarily work with Lightroom. While cards that are 4 to 5 years old may technically work, they may provide little to no benefit over the regular CPU when processing images in Lr, and in some cases may be slower. Higher-end GPUs from the last 2 to 3 years should work better.

So let's clear up what's currently GPU accelerated in Lr CC and what's not:

First of all, Develop is the only module that currently has GPU acceleration whatsoever. This means that other functions and modules, such as Library, Export, and Quick Develop, do not use the GPU (performance should be the same for those functions regardless of whether you have GPU enabled or disabled in the prefs).

Within Develop, most image editing controls have full GPU acceleration, including the basic and tone panel, panning and zooming, crop and straighten, lens corrections, gradients, and radial filter. Some controls, such as local brush adjustments and spot clone/heal, do not -- at least, not yet.

While the above description may be disappointing to some of you, let's be clear: This is the beginning of the GPU story for Lightroom, not the end. The vision here is to expand our use of the GPU and other technologies over time to improve performance. I know that many photographers have been asking us for improved performance for a long time, and we're trying to respond to that. Please understand this is a big step in that direction, but it's just the first step. The rest of it will take some time.

Summary:

1. GPU support is currently available in Develop only.

2. Most (but not all) Develop controls benefit from GPU acceleration.

3. Using the GPU involves some overhead (there's no free lunch). This may make some operations take longer, such as image-to-image switching or zooming to 1:1. Newer GPUs and computer systems minimize this overhead.

4. The GPU performance improvement in Develop is more noticeable on higher-resolution displays such as 4K. The bigger the display, the bigger the win.

5. Prefer newer GPUs (faster models within the last 3 years). Lightroom may technically work on older GPUs (4 to 5 years old) but likely will not benefit much. At least 1 GB of GPU memory. 2 GB is better.

6. We're currently investigating using GPUs and other technologies to improve performance in Develop and other areas of the app going forward.

The above notes also apply to Camera Raw 9.0 for Photoshop/Bridge CC.

Eric Chan

Camera Raw Engineer

Copy link to clipboard

Copied

Eric... K5000 really ? that's a $1000+ desktop card/accelerator - how about more hoi polloi type of cards... I wonder for example why simple zoom in/zoom out/zoom in/zoom out is so different speed wise between ACR 9 and, for example, FastRawViewer (NVIDIA Geforce GTX870M in my case)... does the ACR/LR code recalculate everthing postdemosaick to do zooming ? otherwise how come ?

Copy link to clipboard

Copied

MadManChan2000 wrote:

Victor, that's not been my experience. I also have an NVIDIA Quadro card for testing (in my case, a K5000) and Develop is several times faster in the areas I mentioned above using this card with GPU acceleration on, compared to without. Note that there are many generations of Quadro cards, and just because the cards you've tried work well in other applications it does not indicate that they will necessarily work well with Lightroom.

I would appreciate some insight regarding the behavior I noted in post #7 in this discussion. Specifically, why does the image begin sharp, degrade to grossly blurry and then become sharp again. This sequence persists even though no alterations of any of the images occurred during my "tests".

Copy link to clipboard

Copied

Well. I tested on Quadro 6000, GTX Titan Black, both are not the newest cards, but are pretty good ones, and the brush tool is unusable both with and without GPU. Being faster without GPU. Let's face it. Not everyone will have $1000 Plus cards on their computers.

Graduated filters are fast, brush filters are fast to move around once you painted it, but the paint process is unbearable, unusable. And there has to be something wrong with it because from time to time is fast, then slow again. I see this happening on every machine I try.

Still using 5.7.1 since most of my work is retouching and I depend on the brush tool.

By the way, I'm using a Wacom.

Sent from the Moon

Copy link to clipboard

Copied

Victor Wolansky wrote:

By the way, I'm using a Wacom.

This is a really long shot, but is it any better if you're not using the Wacom? I know it's an odd question, but there have been some other Wacom related bugs, so it's worth making sure we're all looking in the right place for the cause of the issues you're seeing.

Victoria - The Lightroom Queen - Author of the Lightroom Missing FAQ & Edit on the Go books.

Copy link to clipboard

Copied

Victoria, Wacom or not Wacom makes no difference... It's just totally unusable. And as you can see by everyone's comments, it's not just me.

Sent from my iPad

Copy link to clipboard

Copied

Are you serious? Not all of us have thousand dollar GPUs in our machines.

Lightroom CC in its current iteration is a joke. Get back to us when you actually optimize it instead of releasing a half finished product.

Copy link to clipboard

Copied

You are absolutely right. The average machine won't have a such a expensive GPUs. But my point is not even with a super expensive GPU is usable. And not even with the GPU disabled is as fast as it was. Who really needs to have face recognition? Is this software migrating from a professional software to a toy for Facebookers?

LR CC is a big step back in performance in every aspect on every single machine I have installed it.

And don't tell me that is a GPU can do stuff in one software does not mean it can do it in another. I work very closely with other companies that make software that use GPU to manipulate images, PFTrack, PTgui, Flame, Lustre, 3DS Max, Syntheyes, and I can keep writing names, all of them can move 8K images no problem. With the Quadro 6000 I can paint over a 16 bits floating point in Flame with the vectorial paint and the Wacom without absolutely NO lag at all. Lightroom can even make a curved like since is so slow that the sampling gives me straight lines and not curves.

I'm sure it can be optimized, but this version is to me, not even worth of an early beta testing.

Please go back to the drawing board and start over, a GPU like the Titan Black should smoke painting with the adjustment brush.

Copy link to clipboard

Copied

Sorry I was quoting MadManChan2000.

I probably should've made it more clear.

Copy link to clipboard

Copied

Hi MadManChan,

MadManChan2000 wrote:

[...] I also have an NVIDIA Quadro card for testing (in my case, a K5000) and Develop is several times faster in the areas I mentioned above using this card with GPU acceleration on, compared to without.

Perhaps you could tell your product manager that many users of Lightroom out there are hobbyists when you are planning your future development tasks. Hobbyists usually don't spend $1000+ on a graphics card to gain a bit of performance. They rather switch to a different software product (I heard that Capture One Pro is a nice piece of software...). Please consider to do your tests on weaker machines, too.

Copy link to clipboard

Copied

Eric, at least you can get your LR6 to recognize the Nvidia processor... I can't with my GTX850M.

It only sees my Intel 4600 and that slows down everything.

If I force my laptop to use the high performance GTX850M; LR6 GPU won't engage "due to errors"

Notwithstanding your initial comments, Adobe has sold us "sizzle and little steak"; those who benefit from LR6 are the 4K crowd ... a relative minority.

.The on-again-off again release date in my mind points to the conflict between marketing and software engineers, the latter whocouldn't deliver on the formers promises.

In the end IMHO, the LR6 release was all about feeding content and revenues in the CC and standalone pipelines.

It is really not our job to install, uninstall drivers and fiddle under the hood to invoke promises (by marketing) of performance increases!

LR6 wasn't even publicly Beta tested.

I can understand why. (hint.... no-one would have bought the standalone version and the C(redit C(ard) users would have been up in arms.)

Shame on me for upgrading to LR6.

Your comments please.

Copy link to clipboard

Copied

Thank you Eric, I have a MacBook Pro Mid 2012, LR 6 is far better, Develop Module is faster, I have NOT notice any loss in any other areas,

I also have a 2014 Mac Pro Dual AMD Fire Pro 700's, LR 6 is like runs super fast,

Looking forward to the next updates, I hope Adobe will make use of 12 Core machines.

Copy link to clipboard

Copied

I hope someone at Adobe is listening.

GPU Acceleration is not working properly. And it's not just an AMD problem. Nvidia cards fail the test also.

A couple of days ago I upgraded my card from an OEM GTX660 to an Asus GTX960 in the hope that it would be solved. No such luck. On top of that the log shows that my old card is still installed, although I removed all drivers and began installation from scratch. Even in the hidden devices there is no trace of the old card. Am I missing something here?

I use Win 8.1 pro

Copy link to clipboard

Copied

Here's what worked for my setup.

I use an nvidia 960 card.

First I experimented with doing clean installs of the drivers. That didn't work.

But I noticed that when I used the default windows driver (the non-nvidia kind) I got acceleration. Though none of the other advantages of my graphics card.

Then I went to this page: TweakGuides.com - Nvidia GeForce Tweak Guide and learned about Display Driver Uninstaller (DDU).

After applying this free program in safe mode and reinstalling the nvidia driver, acceleration was available.

So, for all you nvidia users: try this before reinstalling lightroom or reinstalling your entire system. It took about 20 minutes in all.

This might also work for AMD users, since the program provides an AMD setting.

Copy link to clipboard

Copied

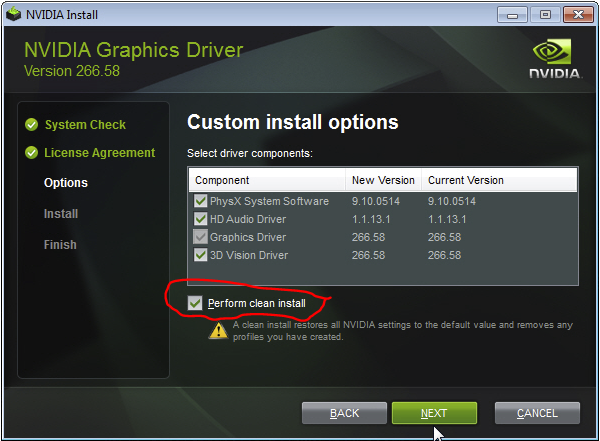

There is a 'Clean Install' checkbox in the Nvidia Driver Installer that removes the current drivers, Registry settings, and all Preferences settings. As per Nvidia at the above link, "If you have experienced install problems in the past, we offer "Perform clean install" which will remove all previous NVIDIA drivers and NVIDIA registry entries from your computer. This may resolve installer issues caused by conflicting older drivers."

Select Custom:

Select 'Perform clean install:'

Copy link to clipboard

Copied

Bart Luyckx wrote:

Here's what worked for my setup.

I use an nvidia 960 card.

First I experimented with doing clean installs of the drivers. That didn't work.

But I noticed that when I used the default windows driver (the non-nvidia kind) I got acceleration. Though none of the other advantages of my graphics card.

Then I went to this page: TweakGuides.com - Nvidia GeForce Tweak Guide and learned about Display Driver Uninstaller (DDU).

After applying this free program in safe mode and reinstalling the nvidia driver, acceleration was available.

So, for all you nvidia users: try this before reinstalling lightroom or reinstalling your entire system. It took about 20 minutes in all.

This might also work for AMD users, since the program provides an AMD setting.

Also: If you are using an 'older' processor like me, You can force pcie3 if your motherboard and bios allow this. Be sure to set the bios first to take advantage of this. Geforce Gen3 Support On X79 Platform

Copy link to clipboard

Copied

Its not a question of the program recognizing a card and turning on GPU "acceleration" as it is avoiding bloat and "deceleration".

Copy link to clipboard

Copied

with this information...now I'm even more disappointed that my import time has slowed down so significantly. An Adobe rep on these forums told me to disable the GPU...but now I know the GPU has NOTHING to do with importing. So WTF Adobe?

Copy link to clipboard

Copied

WTF was not necessary though... something is certainly not right with the first production version that uses GPU, I bet they are working to fix it and developers probably were under a pressure from mgmt/marketing to deliver LR6 by a certain time

Copy link to clipboard

Copied

Personally

I would just be happy if I could view my photos in the Develop module like I used to be able to in LR5. All the commentary leave s me a bit underwhelmed. If it wasn't going to work better or barely I would have thought Adobe should have shave provided better product guidance or a test to indicate if LR6 was going to run properly on my computer.

Very disappointed

Copy link to clipboard

Copied

I also very disappointed when using Lightroom cc.

its performance is very slow and always showing no response.

Copy link to clipboard

Copied

Curious. If the CPU->GPU transfer takes a bit of time, isn't it possible to have a core that's always tasked at transferring future data to the gpu (lazy loading). This would be images N+1, N+2... It's pretty predictable that most of us work sequentially.

I'm on a 4.7GHz 4790K i7 with 32GB and a R9 280X. I agree with people here: LR5 seems much slower for all other functions than slider responsiveness which is much faster. Heal/Clone feels like I'm working on a 8MHz 286 CPU.

Copy link to clipboard

Copied

The time of transfer from CPU to GPU should be almost unnoticeable if correctly implemented, PCIe busses can move 255 MB/s per like, provided you have a cheap card working on just 4 lanes that is 1000MB/s how many images you move in a second with 1000 MB? Lightroom should completely decompress a RAW file as soon as you touch it, and not wait until you zoom in to do it, also there are plenty of glitches when GPU is enabled and you use multiple brushes, you can get horrendous artifacts, the GUI flickers, etc etc.... The person that decided that this was ready to be released, should be released from work.... Or was clearly a very poor marketing decision, some times programmers say, this is not ready and marketing comes back with a don't care sent it out anyway .....

Sent from my iPad

Copy link to clipboard

Copied

> also there are plenty of glitches when GPU is enabled and you use multiple brushes, you can get horrendous artifacts, the GUI flickers, etc etc..

when disabled - too... for example when I switch off GPU in ACR 9 , I manage to draw a several visible (at the same time = at once) rectangles with WB sampling too... just like switching GPU off somehow makes ACR9 to work a magnitude (or more) slower than ACR8

Copy link to clipboard

Copied

Well, I'm using an R9 280X in the 16x slot on a Z97X board. I just did a simple test. 195 Nikon D4 raw images and a few DNG panos from a recent shoot. Did 1:1 previews before test and all raw files are preconverted to DNG. Without GPU ticked, I can hold the right arrow in Develop and go from 1 to 195 in 17 seconds. With GPU enabled, the delay grew to 1 minute 3 seconds (3x slower). So, while the sliders might have more FPS (as noted by dev) the image-to-image and most other actions have suffered GREATLY. When my jobs are in the 4000 image range and 4x per month, that image-to-image delay adds up to days of additional processing time.

Copy link to clipboard

Copied

Exactly. Lightroom's original mission was volume processing. A typical week of sports/event assignments yields 10,000 images (RAW). Given the latitude of RAW, the only module worth talking about is Develop. I don't know who uses quick develop controls or why in Library.

Virtually every image I shoot is viable within that RAW exposure latitude so I have to look at every one in Develop. The lag as images render just kills me. As you point out, all those seconds add up to hours (and days) of additional computer time. Where are Adobe's researchers... come watch some working photographers struggle to process thousands of files on deadline and then maybe you'll understand the urgency around performance.

As someone else said we're working a queue of images. I don't understand why Develop is not pre rendering the next "n" images constantly. Very frustrating to have a glimmer of hope LR6 would be truly faster and live up to it's workflow mission but find out GPU acceleration is just another stub of functionality.

Find more inspiration, events, and resources on the new Adobe Community

Explore Now