Apply Image in LAB Mode Funky Result

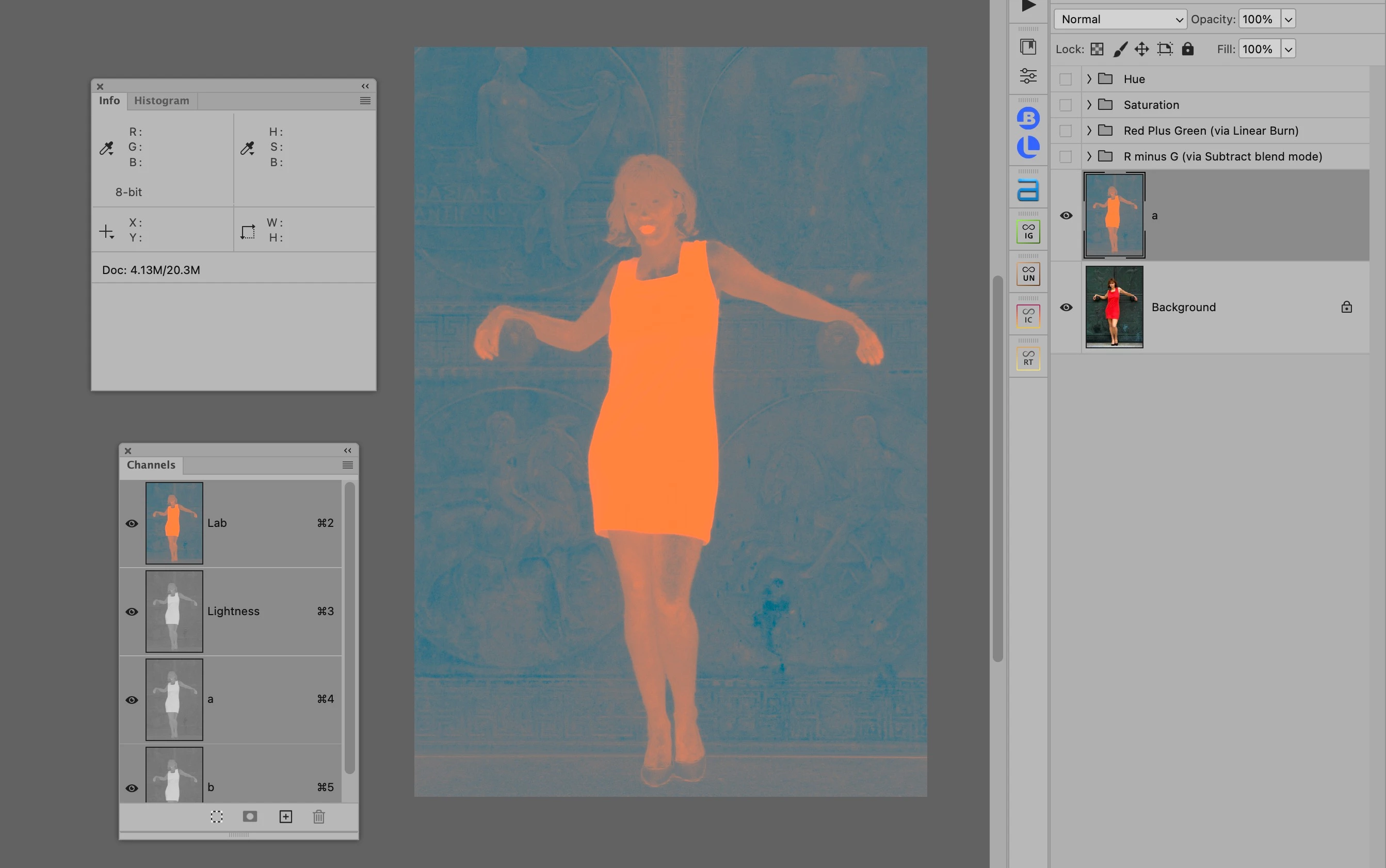

Hi, so I'm learning LAB mode and I'm trying to separate a red dress from a green background (I'm aware that in this case there are easier methods, but I'm trying to learn the mechanics of LAB). So, I create a blank layer and to Image>Apple Image>Background>Channel A. Instead of getting a greyscale image of that channel, I'm getting something in orange-green.

What am I doing wrong?

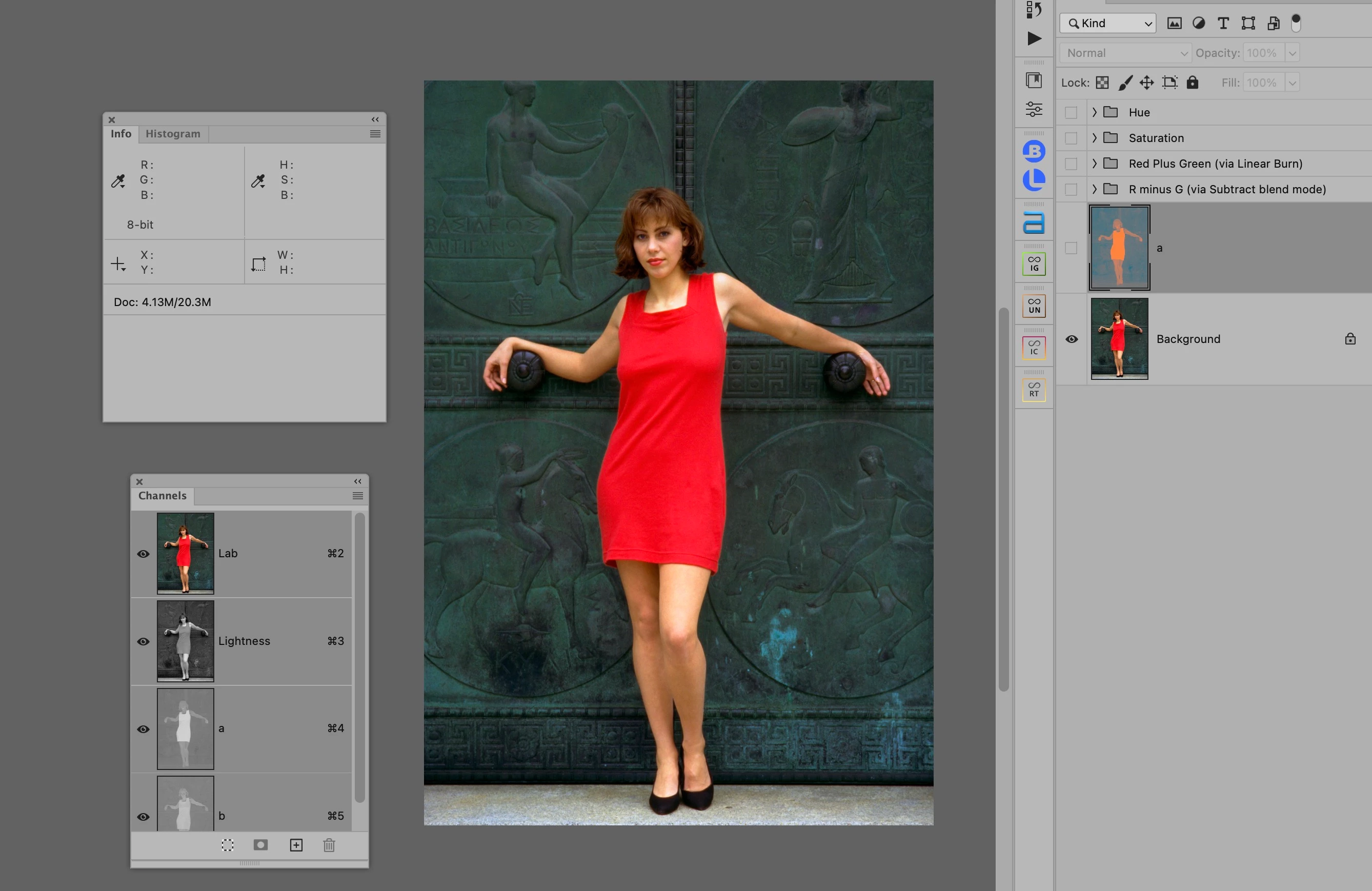

Before doing the procedure:

After attempting the procedure:

Thanks,

Daniel