- Home

- Photoshop ecosystem

- Discussions

- Re: Deep Color based on DirectX?

- Re: Deep Color based on DirectX?

Deep Color based on DirectX?

Copy link to clipboard

Copied

Hello,

I would like to view Deep Color photos on my Windows 10 PC.

I was told that Photoshop has an option about 30 bit display

and I had a discussion with Intel showing that Windows 10 OS/drivers can not use deep color without DirectX.

So my question: Is photoshop using DirectX to display 30 bit colors?

PC Components are:

Motherboard: ASRock H170M Pro4 (Deep Color support with Display Port 1.2)

CPU: Intel Core i5 6500

Intel HD Graphics 530 (Deep Color support ) No extra GPU

Monitor: Dell UltraSharp U2516D 25", (Deep Color support with Display Port 1.2)

RAM: 2 x Crucial Dimm Kit 16GB

Thank you

Best Regards

Lore

Explore related tutorials & articles

Copy link to clipboard

Copied

Hi Lore,

Please go through this article once Photoshop graphics processor (GPU) card FAQ

Regards,

Sahil

Copy link to clipboard

Copied

Well the only thing I found about in the article you advised was:

"30-bit Display (Windows only): Allows Photoshop to display 30-bit data directly to screen on video cards that support it

Note: 30-bit display is not functioning correctly with current drivers. We are working to address this issue as soon as possible."

and nothing about DirectX which reveales a sobering result.

Copy link to clipboard

Copied

Hi Lore,

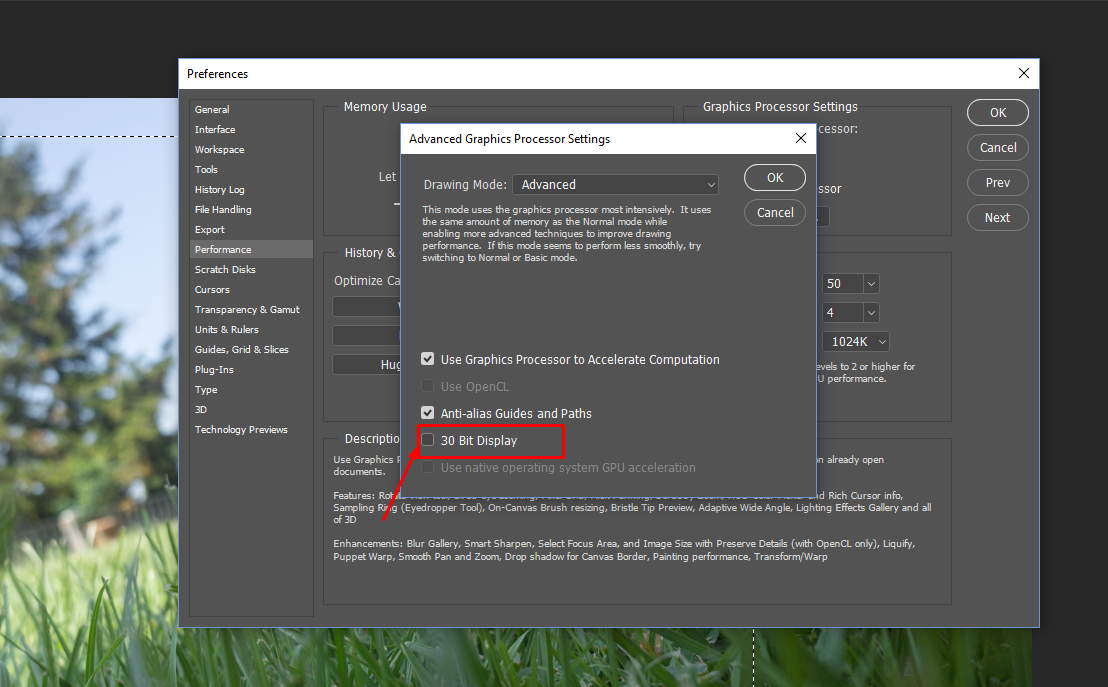

Please check "30 bit display" in Preference>>Performance>>Advanced GPU settings.

Restart Photoshop and check.

Regards,

Akash

Copy link to clipboard

Copied

BTW, Photoshop uses OpenGL for 30 bit color.

A google search for Intel HD + 30 bit color landed me in the Intel support forum. Essentially, lots of users asking this question, with as many evasive answers from Intel staff saying "they are continually working to improve" etc etc. Nothing affirmative.

There is something in the spec about "32 bit support", but what's the extra two bits? Doesn't make any sense.

Copy link to clipboard

Copied

I don't see anything in Intel's specs about 30-bit support - ? The standard cited requirement is NVidia Quadro series or ATI FirePro series.

In any case, getting this to work - even if all requirements are met - is like catching fish with your hands. I have been trying to get it to work on a Quadro / DisplayPort / Eizo CX240 setup. It actually did at first - and then the next day it would only work at 66.67% zoom and upwards. The day after that it didn't work at all.

Not that it's a big deal. With a good monitor you really need very special test gradients to see the difference at all. In a photograph there is always just enough noise to conceal any banding there might be.

Copy link to clipboard

Copied

Intel's Spec about 30 Bit support: http://www.intel.com/content/dam/www/public/us/en/documents/white-papers/deep-color-support-graphics... Chap. 2

Didn't it ever get into a usable state again till now?

Copy link to clipboard

Copied

Well, that white paper lost all credibility when they stated you "must have" 10/12 bit support for wide gamut displays. That's nonsense, and a clear indication they don't know what they're talking about. Color space gamut and color depth are different things.

Yes, a larger color space is more exposed to banding because the numerical steps are further apart - but a standard wide gamut display is nowhere near. It works perfectly well with an 8-bit display pipeline, and people have been doing just that for many years.

I read no further.

Copy link to clipboard

Copied

O.K. Do I understand it right:

Even better GPUs and monitors can't realize 30-Bit depth. So it's in the cards when Deep Color becomes a real option. ?

Copy link to clipboard

Copied

More people than me have been surprised at the lack of traction for 30 bit color. Nobody seems interested.

Partly I think this is because of the massive market dominance of TN-based displays, particularly in the gaming segment, which is otherwise a driving force in technology development. TN displays are fast, which is what the gamers want - but they're not capable of 30-bit depth.

There's also a bit of a built-in paradox here. The small segment of "serious" users who want 30-bit, are also likely to use high-end displays where it really makes very little practical difference. On a hardware calibrated unit with 14 or 16 bit internal processing, there really is no banding, or at any rate it's not a practical problem. You get the 256 discrete steps, but so what...it would make much more of an impact on a lesser display system with video card tables.

The new Apple policy of using DCI-P3 type displays, which I initially thought was pretty ridiculous since it's a standard for digital cinema projectors, might however turn out to be a potential driver. Because there is a larger standard behind this, known as Rec. 2020, which in fact does specify 30 bit color and even larger color gamuts. Microsoft seems to target this standard for future development too. We'll see.

Find more inspiration, events, and resources on the new Adobe Community

Explore Now