- Home

- Premiere Pro (Beta)

- Discussions

- Re: Now in Beta: Timeline tone mapping

- Re: Now in Beta: Timeline tone mapping

[Released] Timeline tone mapping

Copy link to clipboard

Copied

This feature is now shipping in v23.2 of Premiere Pro. More information is available here.

------------

In this latest public beta, Premiere Pro introduces automated tone mapping for HDR (High Dynamic Range) video to SDR (Standard Dynamic Range) video workflows.

Tone mapping works with iPhone HLG, Sony S-Log, Canon Log, and Panasonic V-Log media, as well as media that is in HLG and PQ color spaces.

TWO STEPS TO TONEMAPPING

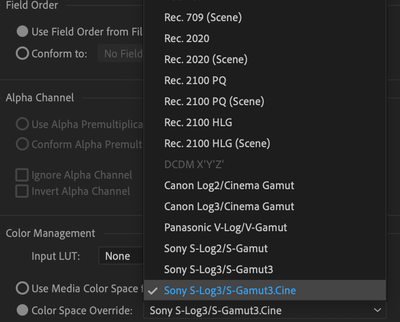

Tone mapping is done in Two-Steps. First, you set the clip’s color space to the wide gamut color space it was recorded in (like Sony S-Log) in the Interpret Footage dialogue.

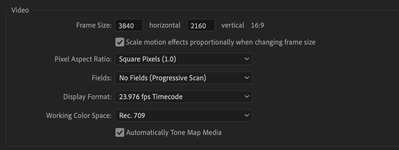

Then you must drag that clip to a Rec. 709 timeline. Tone mapping will automatically be applied, but you can confirm that “Automatically Tone Map Media” is checked in the Sequence Settings:

SOME CLIPS INTERPRET AUTOMATICALLY, SOME REQUIRE YOUR INPUT

iPhone HLG clips are automatically detected and interpreted on import and you don’t have to do anything, just drag and drop them on to a Rec. 709 timeline.

For Sony XAVC S-Log media, you can enable Automatic Detection and interpretation with “Log Color Management” in File > Preferences > General and checking “Log Color Management”:

All other log media must be enabled manually by overriding the color space in the Interpret Footage Dialogue.

COLOR MANAGEMENT GOING FORWARD

Tone mapping currently works with iPhone HLG, HLG, PQ, Sony S-Log, Canon Log and Panasonic V-Log formats.

Currently, Automatic Detection is supported only on iPhone HLG, HLG, PQ and Sony XAVC S-Log media. Other HDR and log formats will be supported in future beta releases.

Tone mapping HDR source media to SDR timelines is one part of a larger color management system in development for Premiere Pro that is being introduced and validated through the beta program.

This workflow offers a LUT-free way to transform your media from HDR to SDR. This is a first step, and more functionality and control will be added.

You can, of course, continue to work with log footage and LUT-based color workflows. This new method is another, alternative color workflow model that exists alongside it.

We want to know what you think. Please join the conversation below.

Copy link to clipboard

Copied

Hi @Baffy19,

We really appriciate for your feedback. There are certains things we are already working on for Lumetri.

Stay tune for further updates from Color team.

Thanks,

Copy link to clipboard

Copied

Hi @Chetan Nanda,

This is insanely pleasing and I, like many, will look forward to it. I appreciate that you responded and penetrated into the problems of LUMETRI.

I would like to mention another important feature that will be in demand with a bang, it is to be able to have a reset button for each of the 4 WHELLS (I hope for this + GROUP LOG WHEELS here the amount can be done more to affect the intermediate areas between the main existing ones), as well as a global reset button for these WHEELS. Now it's crazy to reset each wheel by default and you have to make 2 mouse clicks on the wheel itself and 1 more click in brightness. Count how much and how inconvenient it is. Everyone is waiting for this opportunity.

Copy link to clipboard

Copied

How about giving us an option to display to look correct on mac, so we no longer need to use a compensation lut? A lot of people are still having this issue and ready to switch NLE because of it.

Copy link to clipboard

Copied

Jake,

As explained in your other post ... and for all the other watchers of this thread.

Adobe CAN NOT fix what Apple broke. It's a physics issue, simple ... and period.

You cannot get the same visual image with two widely different gamma settings! And that's the cause of this issue.

Apple uses 1.96 in their ColorSync utility as the display gamma for Rec.709 'tagged' media. Which is WRONG.

It should use the standards-required 2.4.

Premiere abides by the standards, Apple does not. And my many Apple-based colorist acquaintances are FURIOUS over this also ... but correctly, at Apple. As are all color managment specialists.

What NLE would you switch to? Resolve? Think again! I work in that app daily, and again, for/with/teach pro colorists. Who've demonstrated what the "Rec.709-A" export setting does.

Because to get a "proper" view on your Mac with Resolve, you can't use the normal Rec.709 export setting. You have to use the Rec.709-A setting, and yes, A is explicitly listed as meaning "Apple".

What does that do? It changes the NCLC tags to something other than the normal Rec.709 tags ... to something that is in the standards officiallly listed as "unassigned" ... simply because the BM devs found that for some reason, with that specific tagging, the ColorSync utility applies the correct 2.4 gamma!

So they change the file header to trick ColorSync into displaying the file correctly.

But wait ... there's more!

Because on most Rec.709 compliant systems/displays ... that NCLC tagging will get a display with the file way too dark and oversaturated!

Just like with the "Adobe gamma compensating LUT".

Is this a problem? Yes, it's a bugger all right. But there isn't any "fix" available, you just have to understand where the problem comes from.

Neil

Copy link to clipboard

Copied

DCI-P3 vs. REC 709.

DCI-P3 vs. S-Gamut3.

Your solution is to achieve DCI P3 space.

My solution is to work in the SLOG 3 space.

Is there a difference?

Copy link to clipboard

Copied

You're missing a major data point. The theoretical 'space' and volume of both Rec.2020 and even the slightly smaller theoretical S-gamut are simply that ... theoretical spaces.

Most color experts say no current camera known exceeds P3, realistically. Nor does any display space. Nor is any camera or display expected to include data outside of P3 any time soon.

So it is the widest practical, real space in use. And several color experts and colorists have noted that it is a very workable 'wide gamut' base. Especially as every manufacturer of any "serious" camera already has transforms from any gamut their camera's make to P3.

And ... going from P3 to a smaller gamut/volume is also a common thing with tons of transforms/LUTs to accomplish that change to any space you may desire.

We could as easily set say Arri-C as the wide space. Davinci Wide Gamut. Whatever. It just seemed practical to me to use the P3/D65 in a Rec.2020 container. Again, as a practical matter.

Neil

Copy link to clipboard

Copied

Baffy,

You don't like ... messy. And I'm sorry, but this world, by nature, is very, very messy. Color isn't any different.

The colorists I work with, teach, and learn from all stress that there is The Ideal ... and then there's what's possible and/or sensible with this particular project. Which frequently has nothing to do with The Ideal.

Is that frustrating? Yes.

The reality is, there are many ways color management and correction can be handled. And the viewer will never be able to have clue one about how it was done if done carefully and thoughtfully.

So that's why I answered as I did. Do I agree that The Ideal is a wide-gamut working space, with transform for monitoring? Yep. Stated so very clearly in fact.

Is that absolutely necessary to have good color results? No, not at all.

Which is why I pointed out that for many years, nearly all pro colorist work was transformed on input to Rec.709. There weren't the wider spaces to begin with. Does the material look good still? Yes, of course.

So ... where does maintaing a wide gamut working space during color work become really useful?

That's easy ... when you really, really have to push the media hard. As for an extreme grade, or because the DP didn't get their work done well and the exposure/contrast whatever is way, way off.

And the other thing that helps with this being messy ... in so many projects these days there are drones, DSLR and other rigs shooting H.264/5 formats. That even when they are supposedly "10 bit" ... have shall we say, amazingly thin bits?

You can't match those "up" to the better cam's media, so ... the colorist often has to bring the better stuff down mostly to the lesser stuff to get a good, visually consistent match.

And so there are projects my colorist folk work even now that are on a Rec.709 timeline, because there's mostly 8-bit media, or a significant proportion of 8-bit, and no reason to work wide gamut.

Can you spot those when on your TV? I highly doubt it.

But let's say we want the Ideal of the wide gamut ... there's still a ton of very different ways to approach that. There isn't one "perfect" way.

Working in ACES is supposedly the Ideal, right? Well, that involves a transform of all media into the ACES working space/s. You've left the original behind on input anyway. And a lot of colorists don't like working in ACES because you end up with a ... not really "look" per se, but the feel of an ACES image.

Nearly all colorists I know that work with wide-gamut working spaces transform ALL clips to their favorite flavor of wide-gamut. In fact, I can't think of any that actually work all clips in their own native space. So every clip on their projects goes through a transform pre-grading.

And in fact, to them that is the whole point. They make it all the same gamut.

And every flipping one of them handles even that process differently. Some do it all in their Project and Media Pool settings. Some do partly there, partly in the nodes of their grades. And some do all their color management in the nodes.

And as I noted, frequently they will shift color space/gamut in a node to X, then back to Y in the next node, because a certain tool works better or smoother to their taste in X rather than their normal space of Y.

I've seen power grades that have up to six gamut transforms in the grade. That may or may not be used on any clip in the entire project.

What it gets back to is that it is important to handle color transforms cleanly and well. If done so, then where and how you apply them is ... totally negotiable.

And to the viewer, normally completely irrelevant.

So that is the reality of color for grading at this time. But most of the above wide gamut stuff really only applies when you are pushing the media hard for some reason. All my colorist acquaintances will say a properly shot project can be worked in a Rec.709 timeline without any notable problems. They've done that many times.

So the one's I've talked with would prefer Premiere had a wide gamut working space, just like thee & me. However ... do they have a HUGE problem with the Rec.709 workflow? No. With the proviso you get decent media, no viewer will ever know.

And ... HDR is still the Wild Wild West. With all the gambling and suprises that come with that at this time. Very few people working in Premiere will have monitors really up to the task of grading HDR. Making it look mostly ok, sure.

But in reality, for most editors, for the vast majority of uses, "mostly ok" is all that is needed. Realistically. Your viewers will be on so many different screens and viewing environments they simply can't really see what you've done "properly" anyway.

Neil

Copy link to clipboard

Copied

The meaning of the color profiles of cameras is needed to draw out colors and play with curves as much as this space allows. In the REC 709 space, we limit ourselves to this moment. Because it is the smallest. If we pull out all the possibilities from REC 709, then the picture will receive artifacts in the form of color destruction. With wider spaces, you'll have potential compared to the REC 709. This is the point of working with a wide space at the very beginning, and only then limit it to the standard 709 range. As you noted about the frames received from Drone H. 254/265 in comparison with other LOG, BRAW, HLG formats, DRONE frames will be limited in color manipulation. This does not mean that it is bad to work with them and paint them. I'm talking a little deeper for colorists who dive into the possibilities of camera profiles. I explain how to work with color profiles with a wide range. We first develop the material from the camera manufacturer, and then we do all the manipulations with the primary color correction before the development, that is, we set the chronology where the developing LUT will be the penultimate in the color correction chain. It is followed by stylistic images. This way we will retain more flexibility in working with the color in the profile of the shot material. If we interpret the material immediately before placing it on the timeline, then we will immediately cut the camera space into the smallest REC 709 space.

Copy link to clipboard

Copied

We are not talking about the output in a wide range for the viewer. We are talking about the chronology of the color correction process. In most cases, we all output the material for display in SDR format. I'm not arguing with that. I meant the process of working with color in a wide profile, and at the end of the color correction/grading process to classify it under REC 709. I don't quite understand you, why trim the native space of the material obtained with a wide color girth into a narrow REC 709 color space at the initial stage from the project basket to Premiere, when it needs to be done at the very end of color correction.

Copy link to clipboard

Copied

I have been explaining the chronology of the process. And in great detail. There are different, and all viable, ways to handle color management. The only time I mention output is to note that the viewer will rarely be able to see a difference.

Everything else has been totally about the chronology of the process.

The reality is you can transform to Rec.709 at the beginning without suffering mass defects. The practical difference for the majority of projects ... is not something a viewer will notice.

Unless there are problems with the media itself, really. Or ... you are pushing a very hard grade. Very hard. Under those specific circumstances, then you really do want as late a transform as possible.

I don't see what's so difficult to understand there. There is not any absolute, MUST BE DONE LIKE THIS! method in use by any colorist. As noted, they tend to all be very idiosyncratic in how and when they handle transforms.

That is a chronology comment.

Again ... would I prefer the transform be later? Yes.

Is it absolutely necessary that it be later?

No. As long as it's done well, it's very workable. As has been shown by thousands of hours of professionally graded material done with an initial Rec.709 transform.

Neil

Copy link to clipboard

Copied

Okay, if there are different chronological options, then so be it. I'll stand by my own.

Copy link to clipboard

Copied

Everyone has their own patterns. Feel free!

As noted, I know a bunch of colorists. I've spent many hours in discussions at NAB and online. There is no such thing as the one and only way.

They are all highly skilled and knowledgeable. And all work different "chronological" patterns.

And what works practically for any one project may be different than what works best for another.

Neil

Copy link to clipboard

Copied

Whats the workflow for those of us that select a bunch of media and then click the "create new sequence from media"? Will there be an option to use HLG media, and have it create a time line that can be converted to 709? for exporting?

I tried to read the notes above several times to follow along and It seems the current workflow is to create a timeline that is already Rec709, drag your clips to it, an it will then auto tone map...

I am hoping for a way to create a complaint REC709 timeline with HLG clips by using the create new seq from clips process as this saves me a the hassell of pickign resalutiosn etc in many cases.

I cant download and use the beta or I would check for this myself, I hope someone who has already used it can confirm.

Second question which is not clear, can you create both a HLG and REC 709 export that is compliant and not blown out with this process? basicaly built the project once, and export once (or twice) but get a REC 709 and a HLG project fromt he same timeline thats proper?

Copy link to clipboard

Copied

1) IF you are using 23.1 or later (if you read this some time from now) ... you may be able to select the Preferences option, for Tonemapping. As then, when HLG media is brought onto a timeline with Rec.709 color space, Premiere can auto-adjust the HDR media to fit within the color volume and dynamic range of Rec.709. (In my experience, it does a pretty decent job at this.)

You may also check the Log color management preference and see if that helps with your media. That is also new.

2) IF your media does not then 'fit' within a Rec. 709 sequence, you will need to do manual color management in the Project panel. Select one or more clips that are the same codec/color space, right-click/Modify/Interpret Footage, and set the Override-To option to Rec.709.

3) IF you want to handle it all yourself, you can make or acquire a transform LUT from HLG or whatever log or raw type your media is ... apply that in the Interpret Footage Input LUT slot, set the Override-To option to Rec.709.

Neil

Copy link to clipboard

Copied

Can we get an option for display colour management (quicktime version) instead of using compensation luts? And instead of crushing all the shadow detail only to add a compensation lut so it matches the crushed version on export..

Copy link to clipboard

Copied

Is this to deal with the issue of mis-application of gamma within the Mac ColorSync utility?

As that is caused by Apple choosing to apply a 1.96 gamma to Rec.709 rather than the full standard 2.4..

The problem is basically ... you can't create a file that looks the same when displayed at two significantly different gammas.

So that file looks 'crushed' on a Mac outside Pr in Chrome/Safari or Quicktime Player. But on PCs and broadcast setups with full Rec.709, it looks correct. Same image data.

Neil

Copy link to clipboard

Copied

Yeah, that issue. Would be good to give us the option so if we want it displayed incorrectly so it will look correct on youtube, quicktime, fcp, and mobiles instead of broadcast, then we can choose toCopy link to clipboard

Copied

It occured to me last night that one thing that might be misapprehended by many users of Premiere, is how the math works in Premiere. Something you may not know or consider, that is crucial.

All computations concerning image data within Premiere are performed in 32 bit float.

What does that mean? Or perhaps, what are the full implications of working within that 32-bit float math? (And this is why nearly all other color/tonal tools are in the Obsolete bin ... their math ain't good.)

For an example, let's take a numerical data range of zero to one thousand. 0-1,000. All as whole integers, no fractions.

Now 'shrink' that to a range of zero to one hundred. You lose a LOT of data, right?

Well ... no, not if you use the glories of fractional mathematics. When using a proper mathematical calculation to accomplish the change, while 500 does become 50, 499 doesn't become rounded to either 49 or probably fifty ... it becomes 49.9.

You keep every one of your individual data points, just in a smaller 'range'. There is no "lost" data.

And by so doing, you can even do all sorts of mathematical operations to the data and then mathematically expand back to a range of 0-1,000 ... with all computations working out exactly as if you'd stayed in the wider range to begin with.

So when is the data lost?

Only when having to return to a 'whole integer' type of math. Which in this case, using a Rec.709 timeline, means going to the monitor display, properly set to 100 nits, and when exporting a 'new' file.

While working the image data on the timeline through the Lumetri color controls, you are working with the fractional math. Not the after-export 'whole integer' data.

So the changes are computer as applied to the full data, simply ... mathematically ... using a smaller outside range. For both dynamic range and color.

And yes, the various mathematical models that can be used will 'look' a bit different. For example, in Resolve, you can apply transforms from a wide gamut to Rec.709 through various processes, transforms, LUTs, etc. ... and all of them will get all your data through.

However, they do not exactly look the same. Which is why colorists will have their preferences for how to do the math based on which feels easier for them to work the controls upon. Are any "wrong"? No ... not if they are built properly to keep all the data. They just use different methods in the scaling of the data. Which results in a different 'look' to the displayed image.

In Premiere, you can apply a correctly built transform LUT in the Interpret Footage Input LUT slot, and a LUT for the same purpose from a camera manufacturer, and get a slightly to notably different look to the image. But both still have all the data included due to the calculations being in 32-bit float.

Or use the transforms that the Premiere color devs have built, and get another slightly different look, but again ... with all the original data as part of the calculations.

And when you then work the Lumetri controls on the data, it still works with the original data. Not with truncated "whole integer" data.

The program must compute the results of that work out to 'whole integer' math to send to the monitor, of course. And for exporting to an SDR/Rec.709 file.

But the calculations for the transforms, however applied, and for all work done within Lumetri, are done with 32-bit float 'referencing' the original data.

Copy link to clipboard

Copied

Would love to have some Cine-EI (Sony) based controls or have it read the metadata and offset the lut by the intended exposure. I often shoot at 800 or 1000 base ISO and then it comes in over exposed in this new workflow. As it is is now it becomes hard to accurately bring down the exposure and I had to revert to my old methods.

Copy link to clipboard

Copied

OK, give full details as to your camera & media format, and the process you are currently using in Premiere.

With that I can sort through what other options might work better for you. And they have several Sony log options available of course.

They've added more user options in the last month, are you aware of those, and have you tried them?

Neil

Copy link to clipboard

Copied

I have a decent current workflow. This auto-recognizing log footage from Sony cameras is literally 10 years late as far as I'm concerned, the FS7 has been an extremely popular camera for about that long, and the F-55 was around before that. We've had to ad-hoc it for the entire period. I'm glad we are getting some better color options but its a bit LATE to have a new workflow.

I probably won't use this tone mapping thing all that much since I have a pretty established workflow and need more control over the image BEFORE a transform lut (or tone map etc) of any kind is then baked into the image pipeline.

I'm just saying if someone has this log feature enabled and the DP shot 800ISO on a cine-EI based camera you are going to have to load something in the interpret footage lut box to compensate that exposure to get anything close to correct. It's not ideal. I think there should be a way in interpret footage to lower the exposure or ISO for CINE-EI cameras or auto-read it like Catalyst browse does.

My current workflow is typically:

Step 1. Load footage as REC-709, don't use any of the new interpret footage settings or tone mapping.

Step 2. Load in a transform LUT into the "Creative" tab (I.E. Sony S-log3 to 709 Venice or any of the other popular ones on the market) Whatever you do don't put anything into the input lut its in the wrong spot.

Step 3. Make basic exposure, CT, and contrast adjustments in the "Basic" tab as this preserves the correct order of operations and gives the best results. Alternatively you could add in a second layer above the transform lut layer in Lumetri but that just leads to a lot of mistakes.

I have always hated this part of Lumetri. Each "module" within lumetri should be able to be added and layered as needed instead of creating a bunch of sepearate effects, with every single module. Opens the door to a lot of mistakes.

Step 4. If time permits: Get frustrated with Lumetri's/Premiere's color correction limitations and kick it all over to Resolve, its what it's made for. 😛

Step 5. Curse the Adobe manager who decided to abandon Speedgrade after I spent a lot of time learning it.

Copy link to clipboard

Copied

LOVE step 5! SO agree there ...

You've got this down basically.

Where some will find the tonemapping useful is as the first "normalization" step. And build a set of presets to mod different clips to taste as needed.

As the tonemapping is carefully designed to bring the brightness within say Rec.709 without either clipping or crushing, no lost data. And bring sat within Rec.709 if needed with as little loss of data as possible.

I've had some Sony and Panny clips where the tonemapping was actually better than I could quickly do in Lumetri.

Not exactly the aesthetic sense I wanted, but it quickly got the data to where I could take Lumetri to it and make it what I wanted.

Had someone send an AS7iii clip shot as Rec.709 primaries and conversion transform but Rec2020 HLG tonal range. Odd but intriguing clip.

Actually Pr did a great job and it was a mid-day snow scene with colored skier racing unis, some white, all the white snow and brightnesses.

I was impressed in spite of my expectations.

Neil

Copy link to clipboard

Copied

And dang I miss Sg ... that was heavily targeted and FAST to use.

Neither of which seems natively a description for Lumetri.