Adobe Community

Adobe Community

Copy link to clipboard

Copied

Sure,it says Unsupported,but i have a doubt because i'm scared as *BEEEP!* now that i've seen this topic i've been looking for

I own a GTX 660 Ti OC 2GB and it works perfectly with my Premiere Pro CC 2014. However,i was about to buy a GIGABYTE Nvidia GTX 970 until i tought: "Will it work with my Premiere Pro CC 2014?..Better ask".. Been asking and nobody replied to me.

Because i don't want to buy a card that'll NOT WORK AT ALL with my CC 2014!

In other works: If i buy a GTX 970, will it work with Adobe Premiere Pro CC 2014 COMPLETELY/FULL POWER, supported or not??

Please reply ASAP!!!

Thanks in advance

DV

1 Correct answer

1 Correct answer

Right now the 900 series cards are testing fine with the MPE engine and acceleration. I have not seen any limitation including effects. So I am not sure where people are running into problems. AE acceleration is ray tracer and on the way outs. Dont expect Nvidia to maintain the version AE left at in the drivers and I would be surprised if any new cards work with it since Adobe is done updating it. Very few use it at this point and C4D with Octane is far better especially for the GPU acceleratio

...Copy link to clipboard

Copied

Bill Gehrke wrote:

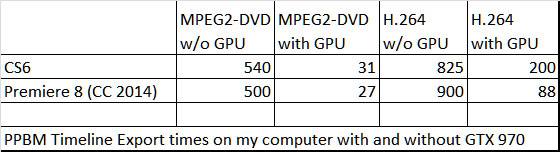

I am not sure about all the problems this thread is seeing but with my GTX 970 SC I am getting absolutely great results. Here is documentation to show 99% usage when I export the PPBM MPEG2-DVD timeline with GPU acceleration. With my specific computer with CPU only it takes about 500 seconds to export that timeline and with the GTX 970 using CUDA hardware acceleration it takes 27 seconds. Using the much more complex H.264 timeline with the CPU only it takes about 900 seconds and with the GTX 970 hardware acceleration it takes 88 seconds.

Here is first plot, GPU-Z showing 99% GPU Load

Just for correlation here is the EVGA NV tool showing the same 99% GPU load

Are these results with CS6 or CC?

Copy link to clipboard

Copied

I'd like to know too. Perhaps CS6 was able to use full? potential of the GPU while the CC 2014 is having some issues...I think i am keeping the CS6 version around just in case this is the case.. If someone can confirm it

Copy link to clipboard

Copied

Given the H.264 results, it can only be done with CC 2014. Not CS6.

Copy link to clipboard

Copied

cc_merchant is correct, but that is an interesting challenge, I will run the CS6 results. Now remember that Adobe made major improvements with Premiere 8 in the GPU utilization with the 4K media. But here are the CS6 versus v.8 results

Copy link to clipboard

Copied

With my gtx770 card, I got it because 1) I saw several comments from people using this within Adobe that it was so good they'd even replaced Quadro cards for better output and 2) it was a couple hundred less than other 'comparable' cards.

I've had a couple private responses from people for whom this card is wondrous. GPUZ seems to find it quite accessible and uses it easily in testing. It's just that ... somehow ... on my machine, in my parallel universe, Adobe DVA's aren't mating up well with it. Why? Dunno.

Monday or Tuesday I'll have the time at machine to run the benchmark, see if that gives any answers.

Neil

Copy link to clipboard

Copied

well, I reloaded and cleaned up everything last night and can now report that PP CC14 (8.1) is indeed playing nice with my MSI GTX 980. I'm a little behind on work right now so I don't have time to do any benchmarking but I'm satisfied to stick with it for a few days instead of pulling the trigger on a GTX 780 replacement.

Copy link to clipboard

Copied

@mercuriusdormit: Did you used the Adobe "supported cards" and that NVENC hack or.....Was it "Natural" ?

Copy link to clipboard

Copied

DV2FOX wrote:

@mercuriusdormit: Did you used the Adobe "supported cards" and that NVENC hack or.....Was it "Natural" ?

There is no supported cards txt file in CC14 to hack so it is recognizing the GPU on its own

Copy link to clipboard

Copied

Then did you used the NVENC hack?...

Copy link to clipboard

Copied

DV2FOX wrote:

Then did you used the NVENC hack?...

no

Copy link to clipboard

Copied

....That's actually suspicious...I mean,not that you're hiding anything or something. It's just i tought from a beginning that PPCC2014 (before the actual 8.1 version?) used ONLY MY CPU instead of my GPU... What should i do?, 100% uninstall PPCC2014 AND Media Encoder CC 2014 ,delete the NVENC hack and try rendering something long to see the waiting time...

....OR should i just choose a 720p/1080p option from the OFFICIAL presets ,configure it to my likes and see if it goes fast or something?

Thanks in advance...Weird that Nvidia didn't warned about this change from the latest Patch update LOG...

Copy link to clipboard

Copied

NVENC is completely irrelevant for PR. It is only suitable for gamers like yourself.

Copy link to clipboard

Copied

But i have a GTX 970 and YOU have a GTX 980, THAT'S MAINLY FOR GAMING TOO. And they're both in the same 900 series. The thing is what i've said before. Before, i could export stuff pretty fast with my GTX 660 until i've changed to a 970 and then things dramatically slowed down, making PPCC2014 use mainly the CPU instead of the GPU.. Had to make the program recognize my GPU and i'm depending of the NVENC thing to export by using ,sadly ,20% of the GPU (Dunno where's the limit to that).

If there was a way to use the full potential, or 3/4 of the GPU,to dramatically speed up the export speed that'd be great, but i think it's not possible somehow,unless i am missing something...

Copy link to clipboard

Copied

You have to realize what you are doing.

The 660 worked perfectly well with PR in native mode. No need to add NVENC into the equation. You changed it to a 970 on a system that bottlenecks that 970 because it can not feed the 970 quickly enough to fully use it's potential. Then you introduced NVENC, a non-supported - at least by Adobe - factor into the equation and now you complain that the card does not deliver what you expected. Well, that might be caused by unwarranted expectations, by not recognizing your systems limitations, or by the introduction on NVENC into the equation.

Adding a Ferrari V12 engine to a Toyota Aego does not make it run faster, but it will run at 5% of its potential, instead of the standard engine that runs at 80 - 100% of its potential during normal use.

Helping you solve this issue as it stands, is highly complex because of the additional factors you introduced. When solving a problem, you have to return to the basics and work from there, not making things even more complex than they already are.

Copy link to clipboard

Copied

Yes, cc, I agree that some part of the OP's system isn't up to the performance standards of the rest of that build. If the CPU is at 100% while the GPU is barely used at all, then yes, it is the CPU that's bottlenecking things. But if both the CPU and GPU are both averaging well below 100% while the disk light is constantly solid on, the disk subsystem is the bottleneck.

Looking at what the OP posted, it is likely that his CPU is partly to blame. And it is also likely that that CPU has a cooling system that cannot cope at all whatsoever with even a very slight overclock. And given that most i5-2500K CPUs are highly overclock capable (to at least 4.5 GHz), it is the OP's refusal to overclock or upgrade the CPU cooler that made me recommend that the OP stick with his "old" GTX 660 instead of "upgrading" to a high-end GPU that IMHO needs at least a highly overclocked and super-expensive hexa-core CPU plus tons of RAM just to take anywhere near full advantage of. (At this present time there are no GTX 900 series GPUs below the 970 - and among the GTX 700 series GPUs that are "newer" than the GTX 660, the GTX 750 Ti would have been a slight downgrade while the GTX 760 is of the same GPU generation as the GTX 660 - at best an upgrade that doesn't provide sufficiently improved performance over the existing GTX 660 to justify the cost of such an upgrade.)

Copy link to clipboard

Copied

The problem is that i'm waiting for Intel's Skylake to come so i can do a better future proof upgrade instead of the actual MB/CPUs.. You know,better CPU performance,16GB DDR4,etc, so it wouldn't bottleneck the GPU.. But i can't do it now for personal reasons.

And stop with the car comparison,i've heard that too many times...

Copy link to clipboard

Copied

There's a little bit of bad news for Skylake waiters:

The initial CPUs that will be released for the new socket LGA 1151 will be multiplier locked, which means that the initial Skylake CPUs cannot be overclocked at all. This can actually result in lower performance than today's sensibly overclocked systems.

Copy link to clipboard

Copied

Source Link

Copy link to clipboard

Copied

I'm sorry, but there are no benchmark results yet for desktop Skylake CPUs at this time. What I stated was a guesstimate based on the potential performance of a locked-to-stock Skylake S-series CPU and a current overclocked-to-4.5GHz Haswell refresh CPU. There will be unlocked K-series Skylake CPUs – but those will not be released until some time after the locked-to-stock Skylake parts have already been on the market.

Now, going back to your original topic:

Yes, the GTX 970 will be held back by your current system's i5-2500(K) CPU, and also by your outdated disks. To the point where an i5 with a top-of-the-line GPU actually benchmarks slower than an i7 with a much lower-end GPU in the H.264 AVC Blu-ray encoding test of the PPBM8 benchmark suite for Premiere Pro CC 2014. As a matter of fact, even on a quad-core Haswell i7-based PC with a typical 2-disk RAID 0 array, the GTX 970 does not perform sufficiently faster than a GTX 750 or 750 Ti in the Adobe Creative Cloud applications to justify the price difference.

Copy link to clipboard

Copied

I am seeing ZERO performance increase with my GTX 970sc. Encoding a h.264 file using Media Encoder (sequence dragged in from Premiere), 32Mbps (49 minutes long) takes 92 minutes whether GPU acceleration is enabled or not. And monitoring using Afterburner, my gpu is showing 0% usage during the render.

Windows 8.1 Premier/Media composer CC latest versions, all drivers up to date.

Something isn't setup right, but I don't know what else to try...

Copy link to clipboard

Copied

That is exactly what should happen, because encoding to an export format is done by the CPU, not the GPU. No performance gain from a video card. See Tweakers Page - What video card to use?

From there:

Category B

If you belong to category B, the first thing you should do is to read Tweakers Page - Exporting Style to help you understand in what situations a top-notch video card, or even two cards in the case of CC can be helpful and when the impact of the video card is minimal. Depending on your export style, it is quite common that the video card is not used at all or only minimally.

Often people look at GPU usage during export and are surprised that the GPU is not used. That clearly shows that encoding is CPU bound. That is not a bug in the software, that is a bug in (unfounded) user expectations.

See the RED warning

Copy link to clipboard

Copied

Understoood...interesting article. I did a test render by applying only effects that support acceleration and saw a nearly tenfold increase the cuda accelerated render vs sofware only (even though my 970 still showed 0% usage. I guess rendering RGB curves and noise isn't very taxing for a modern GPU. ![]()

Copy link to clipboard

Copied

If you want to see what 'taxing a modern CPU / GPU' means, try out the benchmark at PPBM6 for CS6 and later

Copy link to clipboard

Copied

Moxtelling wrote:

Sorry if this question is a bit off topic - but besides from quicker encoding (exporting sequence from PPro) will there be any other noticable improvements upgrading from a GTX 570 to a GTX 780 or GTX 970? Will timeline playback be faster and more fluid with or without effects?

Cheers 🙂

Just for you I just ran a test. With our Premiere Pro BenchMark (PPBM) and specifically with the H.264 timeline I have my playback resolution set at full resolution on this laptop and with GPU hardware acceleration enabled I recorded 73 dropped frames when playing the timeline, with CPU only it recorded 1298 dropped frames.

Copy link to clipboard

Copied

Just replying as a general umbrella answer about upgrading computer components.

The application, render engine, filter, and codec all have there differences. There's dozens of things to consider and just as many companies responsible, other than Adobe.

There will never be a clean answer to the question of a specific piece of hardware and exactly what benefits it affords, since the rest of the computers hardware must also be put into question.

At best, you should investigate your own workflow and check who made each piece of your most commonly used software tools. Visit their sites, ask some questions and find out. That goes for this generation of hardware and all of them in the future.

The only real blanket statement that stands the test of time is, adding more performance to any part of your system should help. A video card alone is just one part. You need to know your own hardware and the bottlenecks involved. While I could say "of course a 980 will speed you up" and be mostly correct, if your CPU and memory are ancient, it will barely be worthwhile. A less expensive card and a CPU/memory/HD upgrade might make more sense. Everyone's own personal situation has a different answer.