Haswell E benchmarks

Copy link to clipboard

Copied

Hello all. It's is once again time for some benchmarks with the new release. I will also include the previous X79 4930K clocked at 4.4GHz and Dual Xeon 10 Core as reference to these. I will add them as I get them done. Please let me know if you have any questions.

5960X @ 3.5GHz

64GB DDR4 2400

1TB Crucial M550 SSD

780Ti

AVCHD 4 Layer 30 Minute test

Adobe CC2014

3 Layer - 14:35 (Match Source selected)

3 Layer - 28:57 (HDTV 1080P 23.976)

4 Layer – 16:01 (Match Source selected)

4 Layer - 31:37 (HDTV 1080P 23.976)

Red 4K to DPX 4096 x 2048 24p Full Range (max bit depth) 30 seconds of media

3 Layer - 2:08

4 layer - 2:08

Red 5K 98 Frame to DPX 5K 23.976 slow motion Frame Full Range (max bit depth) 30 seconds of media

1 Layer - 2:12

Red 6K to DPX 6K (max bit depth) 20 seconds of media

1 Layer - 1:31

Red 4K to H264 4K 30 seconds of media

4 layer - :50(Match Sequence H264)

DNG 2.4K to H264 2.4K 26 seconds of media

1 Layer - :15

AE CC 2014

Red 4K to AVI Lossless 4k 30 seconds of media

1 Layer: 2:19

5960X @ 4.5GHz

64GB DDR4 2400

1TB Crucial M550 SSD

780Ti

AVCHD 4 Layer 30 Minute test

Adobe CC2014

3 Layer - 11:36 (Match Source selected)

3 Layer - 22:54 (HDTV 1080P 23.976)

4 Layer – 12:48 (Match Source selected)

4 Layer - 24:58 (HDTV 1080P 23.976)

Red 4K to DPX 4096 x 2048 24p Full Range (max bit depth) 30 seconds of media

3 Layer - 1:54

4 layer - 1:58

Red 5K 98 Frame to DPX 5K 24 Frame slow motion Frame Full Range (max bit depth) 30 seconds of media

1 Layer - 1:58

Red 5K 98 Frame to DNxHD 1080 23.978 36 OP1A Frame 30 seconds of media

1 Layer - :12

Red 5K 98 Frame to DNxHD 440X 1080P 60 frame OP1A Frame 30 seconds of media

1 Layer - :14

Red 6K to DPX 6K (max bit depth) 20 seconds of media

1 Layer - 1:21

Red 4K to H264 4K 30 seconds of media

4 layer - :49(Match Sequence H264)

DNG 2.4K to H264 2.4K 26 seconds of media

1 Layer - :13

AE CC 2014

Red 4K to AVI Lossless 4k 30 seconds of media

1 Layer: 1:59

| The playback and export performance currently with CC 2014 is now relatively consistent. The CPU threading was across all 16 threads both on playback and export now. The GPU load consistently pushed up to 90 to 98% when the benchmark tests included GPU accelerated plugins and scaling of multiple layers. The overall efficiency is far better which is why I didnt put notes after each test. The 8 Core clocked at both 3.5GHz and 4.5GHz played back 4K, 5K 98 frame (both 24 and 60 frame playback), and even 6K at full resolution playback without dropping frames. The 5K playback was smooth regardless of slow motion or full motion preview setup. The increased bandwidth and speed of the ram is definitely having an impact there. The ram usage was as high as 30GB in Premiere for the testing but AE went well over 46GB on export. GPU ram usage pushed 2.5GB on the 3GB card with 4K+ media in Premiere but normally used around 1GB for 1080. I also included some request DNxHD OP1A exports from 5K media as a comparison of media timeframe to encoding time for off line. I will be testing the 6 Core 5960K after I do some testing with the ram at stock 2133. |

Eric

ADK

Copy link to clipboard

Copied

Yes the 780Ti was a little faster but not enough to cancel out the advantages of the 980GTX. Also the 980GTX was not running near the load consistently that the 780Ti and Titan cards were. This means there is more headroom for the 980GTX card that is just not being utilized at this time by Adobe.

Eric

ADK

Copy link to clipboard

Copied

Hi Eric - to clarify these are all export time tests, correct? (ie when you're done with a given project or you're sharing interim results).

Curious - what other tests are important to you when working on projects? What about real-time playback at differing resolutions, warp stabilization times, etc.?

Copy link to clipboard

Copied

Yes all of these are export times. We use export times because those will push the CPU and GPU to the limit of what the player and codecs can handle. They are also much easier to standardize versus realtime playback. I use observation based on hardware load to determine realtime playback performance until we have a standardized project that is difficult for most systems to playback realtime.

Eric

ADK

Copy link to clipboard

Copied

Got it. Thanks for posting these, very helpful.

Copy link to clipboard

Copied

Hi Eric,

By extension are you saying for Premiere Pro, a 5960X and 64GB RAM and 2 x GTX Titans Black is better than 2 x 10 Core E5 2687W v3 CPUs, 64GB RAM and 2 x Titan Black?

What if use is something like 40% Premiere, 20% After Effects and 20% Davinci?

Last question - just read about Fincher's 6K (RED Dragon) workflow on Gone Girl in Premiere Pro, and they were all dual Xeon + K5200 (and one K6000) GPU equiped workstations. Have read many times that GeForce is best for Premiere but...seems these guys would be pretty smart about their production hardware.... Thanks for the great benchmarks BTW.

Ben

Copy link to clipboard

Copied

The render performance would be better on the 5960X clocked over 4GHz. The realtime playback capability ceiling would be higher with the dual xeon. However the Dual Xeon would need 128GB of ram to really make use of all those threads. 64GB wont cut it with that many threads especially in AE. Davinci would perform better with the single CPU I7 clocked higher up to 2 video cards if you take render into the equation. If you add a third video card then you definitely want the dual xeon.

The K6000 and the Titan Black are the same GPU with regards to specs other than the extra vram on the K6000. However 6GB of vram on each card is more than enough for almost all workflows. They likely chose the K6000 because of the dual xeon workstation manufacturer sold with those systems. Tier 1 system configurators normally only sell the Quadro cards with their dual Xeon workstations. There would be absolutely no difference in performance between the K6000 and the Titan Black card with those systems and those applications with regards to GPU acceleration performance and that is even if they were using Vray on some of the workstations.

Eric

ADK

Copy link to clipboard

Copied

Hi Eric,

Thank you for posting this information, as I was deciding between xeon or 5960x OC. However in regards to your last statement regarding Titan cards, do you think a Titan Z would be a better investment over a sli 980 counterpart? Thanks again.

Kris

Copy link to clipboard

Copied

Hi Eric or others),

The plot deepens.

So is the reason a Dual Xeon with 3 x Titan Black for example will pull ahead of a 5960X cconfig with 3 x Titan Black because the 5960X only has 40 total PCIe lanes available and the Dual Xeon has 80? In other words, the 5960X would have x16 / x16 / x8 (hence getting only half bandwidth on the 3rd TB), whereas the Dual Xeon would be x16 / x16 / x16 (and actually have 32 lanes of PCIe bandwidth left)?

I have been wondering about this, and if true, why ASUS would release the X99-E which has 4 PCIe x16 capability....

Man this is really hairy! Thanks again.

Ben

Copy link to clipboard

Copied

the ASUS X99-E WS with more lanes than normal has more latency than a dual Xeon because those lanes are created by adding third part PCI-e chips. A small price to pay for a much reduced cost and with the i7-5960X chip you can overclock and get much more performance for no additional cost! Probably the route that I am going to take!

Copy link to clipboard

Copied

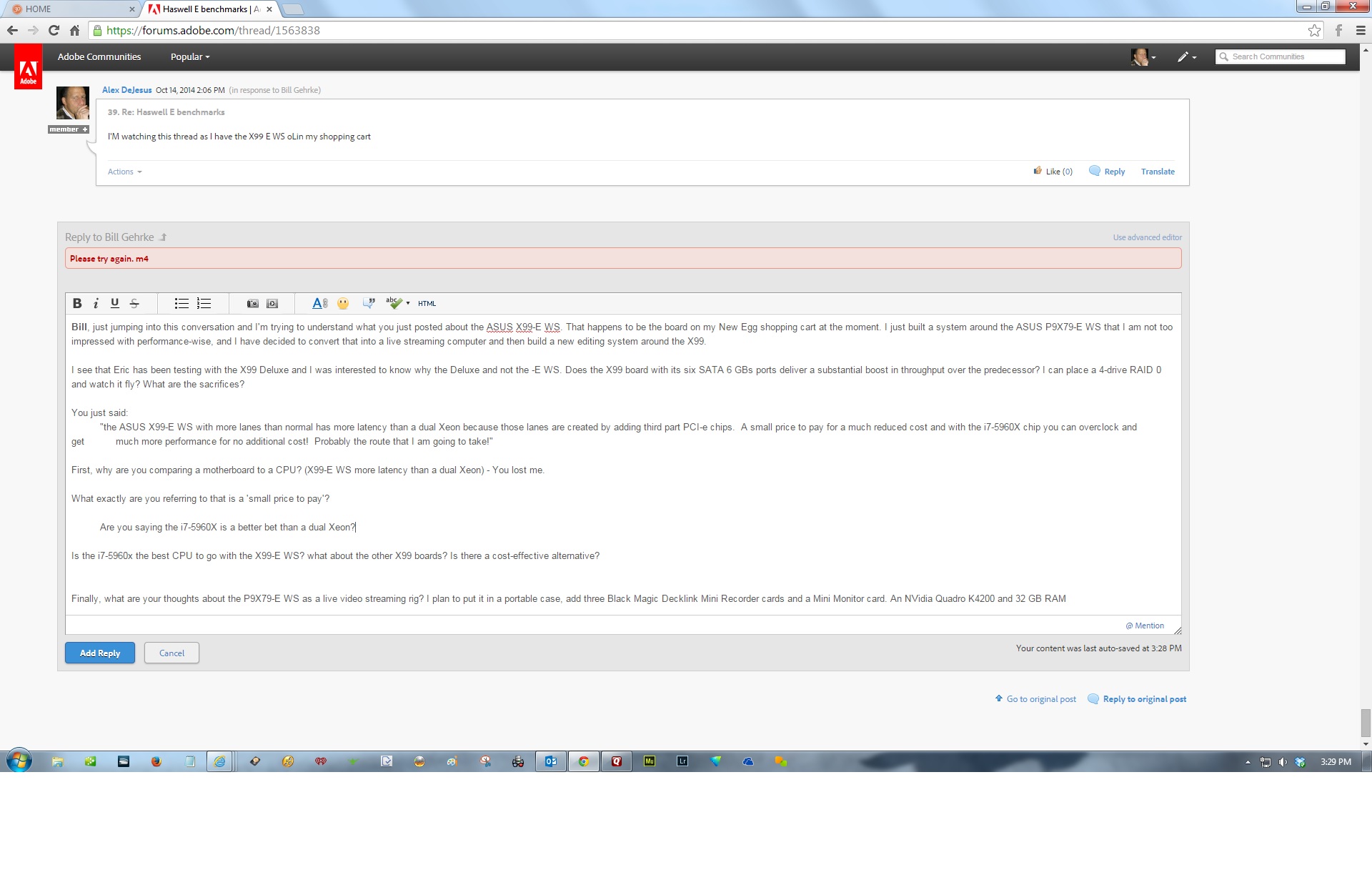

I had an error trying to post to this thread, so I made a picture of it. These questions are for Bill G

I had an error trying to post to this thread, so I made a picture of it. These questions are for Bill G

Copy link to clipboard

Copied

Alex,

Since I cannot copy your series of questions I hope to have some time this evening to work on a response

Copy link to clipboard

Copied

BTW Bill I submitted the 5960X@4.5Ghz with the 980GTX and the Intel P3600 NVMe drive results to the PPBM7 site.

Copy link to clipboard

Copied

Good morning, Eric,

From what I have been reading, Windows 8.1 seems to be the best operating system (OS) for using NVMe SSDs, although Windows 7 drivers for NVMe SSDs have been emerging, lately. For the Intel P3600 NVMe SSD that you reported the results of the PPBM7 tests on, which OS did you use for your testing?

Thank you.

Copy link to clipboard

Copied

I used Win 8.1 . All the benchmarks for the last year have been done with Win 8.1 .

Eric

ADK

Copy link to clipboard

Copied

Thanks Bill, but still unclear re single proc v. dual proc GPU processing effectiveness. Eric?

Is the reason the dual xeon overtakes the 5960X with 3+ GPUs because the 5960x only has 40 lanes to work with? Also, if so, why would you want 4 x16 slots like on the X99-E?

Thanks a lot.

Ben

Copy link to clipboard

Copied

It really comes down to what the CPU's can push. 2GPU's right now is more than 1 a single CPU can really push. There is also the total throughput a system board can handle. The WS probably has more throughput than the other X99 boards but still no where near the Dual Xeon Z10 board. Either way 3xGPU's for applications scuh as Davinci and you want a Dual Xeon because you need the Dual CPU's to really make use of 3 cards with GPU acceleration.

Eric

ADK

Copy link to clipboard

Copied

Great feedback Eric - thank you. On the modeling side, if using Octane as render engine with 3dsMax (or C4D) and single CPU, is full GPU power capped in the same way? Or does Octane scale more linearly?

This is really great- thanks everyone.

Ben

Copy link to clipboard

Copied

As a general rule yes because of how GPU acceleration works with media content applications. This is variable to application caching models and fx processing on the GPU. Applications such as Octane may have less data decoding coming from the CPU which would translate to greater load on the GPU's by a single CPU than other GPU acceleration applications. Octane users have been using more than 1 GPU for couple years now. However there is still a limit to what 1 CPU can push for GPU acceleration applications since the processing starts there.

Eric

ADK

Copy link to clipboard

Copied

Thank you Eric!

Alex et.al.

I am speaking from my perspective. Without winning the lottery I cannot consider a dual Xeon system.

- Why the X99-E WS? Because of the 88 PCIe lanes without dual Xeons.

- Why the large number of PCIe lanes? It is the future of storage.

- Currently there are M.2 storage SSD's that go up to 1 TB and with the best one's you need 4 PCIe lane to get maximum 32 Gb/second performance, the X99-E has one port for this form of storage. Example Samsung XP941 which is 512 GB and has sequential read speeds of 1100 MB/sec. The next generation Samsung M.2 is the SM951 and supposedly has read speeds about 1600 MB/second

- Also another new generation of storage will interface by SATA Express. There are 2.5-inch SATA Express SSD's announced by Intel but currently only available from OEM channels. See connectors below. There will be two new connectors on SATA Express, which will deliver up to 1GB/s on PCIe 2.0 and up to 2GB/s over PCIe 3.0. On the left there's the eventual landing place: the SFF-8639 connector, good for PCIe x4 with speeds of up to 4GB/s (PCIe 3.0). The X99-E WS has two of these lower speed ports. See also the Intel links in 3 below. They have two form factors like the standard 2.5-inch SSD unit above and what Intel calls the AIC (add in Card) described in 3 below.

- The third new storage technology actually has been around for quite a while it is a PCIe board which directly plugs into a PCIe slot. The new communications interface of Non-Volatile Memory Host Controller Interface Specification (NVMe, which is already standard in Windows 8) designed for SSD versus the SATA interface which was designed for rotating mechanical devices. The current specifications for NVMe SSD PCIe cards from a Samsung SM1715 are read speeds of 3000 MB.second and apparently they are now in OEM production with 1.6 GB and 3.2 GB units (probably at $2-3/GB). But this is the future, Intel also has NVMe's in production like their P3700 (2000 GB $6,295 2.8 GB/s) or P3600

Any questions about my desire for more PCIe lanes to be able to follow my quest for future expandability?

Copy link to clipboard

Copied

This is great information Bill. I feel good that the X99_E WS was in my shopping cart. Now, the i7-5960X is the only CPU compatible with that motherboard spec-wise? How much am I sacrificing by going with an i7-5930X? 8-cores to 6-cores?

I plan to put my Asus GeForce GTX 780 3GB DirectCU II Video Card (GTX780-DC2OC-3GD5) - PCPartPicker I see no point in two GPUs.

I'll need RAM 64 GB DDR4 - Not sure which yet

I still have the 16 TB (4 x 4 TB WD Black) in RAID 0 to throw on the SATA III ports

Maybe use a 500 GB or 1 TB SSD as storage for just the current project I'm working (Samsung XP941)

Copy link to clipboard

Copied

Very nice post Bill, people should take note.

Copy link to clipboard

Copied

So the biggest question now remains.. new computer or do I put that supercharger on my mustang. Decisions Decisions.

Copy link to clipboard

Copied

Great info! But are you going to tell us which motherboard all this is sitting on?

Copy link to clipboard

Copied

Testing is now done on the Asus X99 Deluxe

No I still think the 980GTX cards are the way to go specially with the lower power and heat besides the HDMi 2.0. Keep in mind there will be replacement 900 series for the Titan relatively soon I am sure. That series is way to hot for Nvidia to sit to long without it.

Eric

ADK

Copy link to clipboard

Copied

I am currently doing a purchase with origin, in which their single titan z offering is almost the same price as their sli 980 combo. Regardless of price, wouldn't the additional cuda core count and extra vram help?

Kris