Bitrate setting doesn't work correctly on both CBR VBR on 4K test

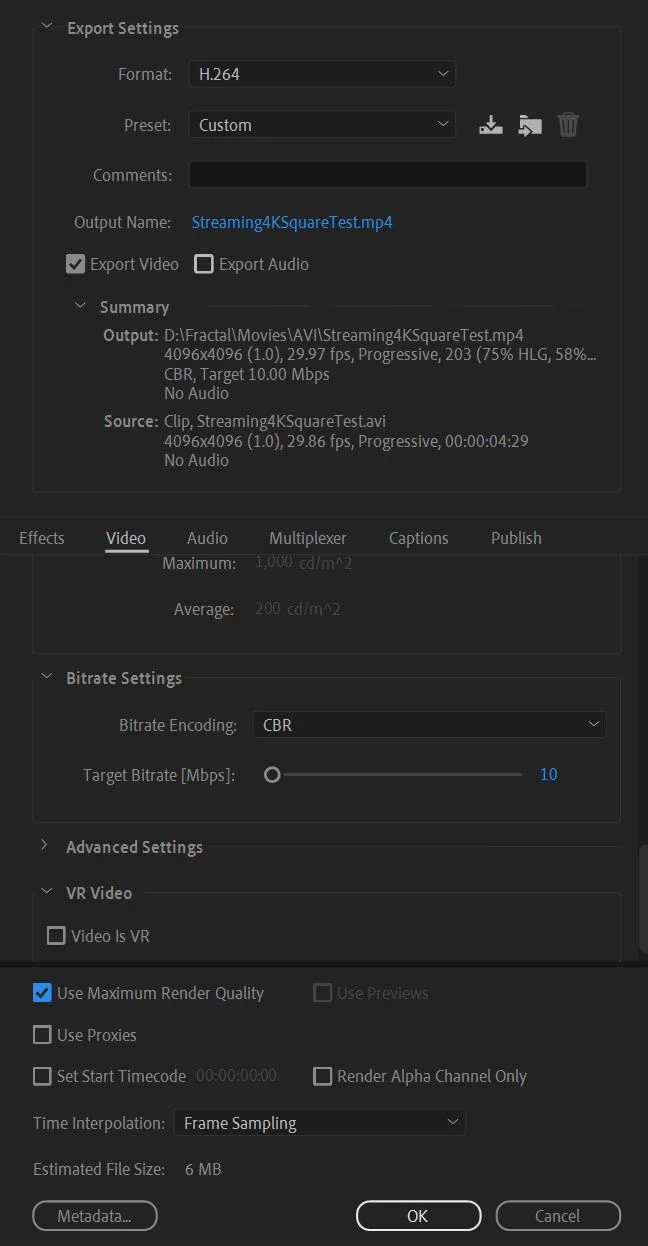

I'm converting simple 4K X4K raw AVI to mp4 with 10Mbps bitrate for streaming test.

When I set CBR 10Mbps with maximum render setting, I get 300 Kbps..

When I set VBR 10Mbps with maximum render setting, I get 1-1.3Mbps..

Maybe it thinks that's enough, but the gradient doesn't look good as the original source.

Also I need accurate size of bit rate data for my test.

Note: When I switch to software encoding, it keeps the set bitrate, but the game engine can't read the file, so I need this hardware encoded.