- Home

- Premiere Pro (Beta)

- Discussions

- Re: DISCUSS : AMD GPU decode for H264 & HEVC

- Re: DISCUSS : AMD GPU decode for H264 & HEVC

DISCUSS : AMD GPU decode for H264 & HEVC

Copy link to clipboard

Copied

With the latest Adobe Premiere Pro Beta build we have enabled Hardware Accelerated Decoding through AMD GPU cards.

If you have AMD GPU card on your Windows machine, you will be able to use this feature.

Feature is enabled by default. If it finds AMD GPU card it will start utilising it for decode.

Feature is enabled in Adobe Premiere Pro Beta , After Effects Beta, Adobe Media Encoder Beta and Adobe Premiere Rush.

Please try it out and give us your Feedback.

We are looking for feedback on playback, seeking, scrubbing, reverse playback and export.

Please try out the feature and share your feedback.

Copy link to clipboard

Copied

Comment has been edited, I meant to post this one on the NVDEC thread

Copy link to clipboard

Copied

I'm running a laptop with both Intel integrated graphics and a WX 3200, and I'm not seeing any usage on the Radeon. I saw Enable AMD Decode disabled in the Debug Database, after enabling and restarting the application I'm unable to get any video from even internally generated media.

Copy link to clipboard

Copied

HI John, Can you please check and confirm the build no by checking it in Help>About Premiere Pro

Copy link to clipboard

Copied

Sorry for the delayed response, 14.5.0 Build 17[R]

I'll loop back once I try with the HP laptop forced to Discrete graphics tomorrow

Copy link to clipboard

Copied

Hi John,

Need following information:

Enable hardware accelerated encoding and decoder is enable (the option is under preferences > Media)

Did you notice any usage with Intel Chip?

Driver version of AMD and Intel Chip.

Are you using working with H264 or HEVC media?

Regarsd

Abhishek

Copy link to clipboard

Copied

Hey Abhishek,

Yup, enabled.

3D usage, not video decode. CPU still seems to be doing it.

AMD 26.20.14003.3001

Intel 26.20.7324

H.264 DJI drone footage

It's a ZBook 15u G6 so I'll try forcing it to discrete graphics and see if that helps. It would be nice if auto mode worked but that is sometimes HP's fault.

Copy link to clipboard

Copied

There is no Discrete Graphics option on this model for some reason. Adobe may want to request testing hardware from HP. I can provide initial contact information.

Copy link to clipboard

Copied

Is there a way to turn it on or off to do benchmark testing?

Copy link to clipboard

Copied

I'm guessing one way is for me to just do some tests in current latest version of Premiere and then do the same tests in the beta

Copy link to clipboard

Copied

Yes, that's the correct way to do the benchmarking.

Copy link to clipboard

Copied

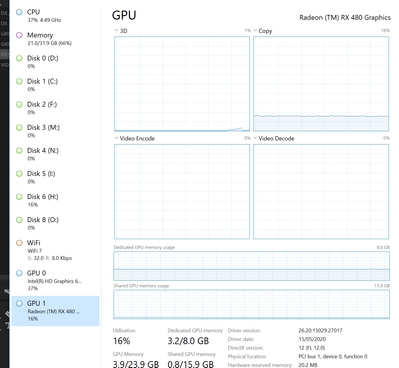

Doing some quick and dirty tests now.

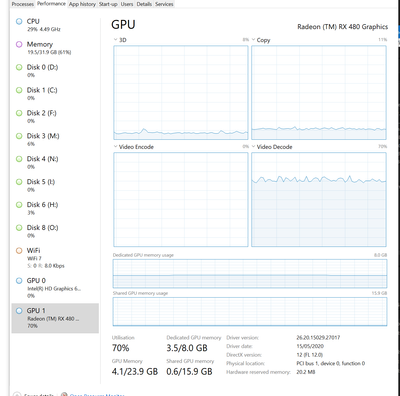

Initial Q - why isn't anything showing int eh Video Encode section of my GPU?

Copy link to clipboard

Copied

Though actually in the current version 14.3.2, nothing shows there during hardware encoding, yet software encoding uses 100% CPU, so it must be using the GPU to encode, it's just not showing in Task Manager for some reason

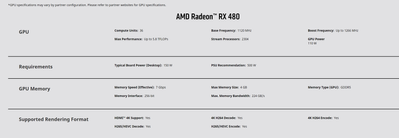

EDIT - It does seem to show GPU Video Encode during H.264 export, but not for H.265 export, even though the RX480 is listed as doing both. https://www.amd.com/en/products/graphics/radeon-rx-480#product-specs

Copy link to clipboard

Copied

My initial tests are not promising for rendering.

H.264 to H.264 hardware encoded is identical in time for me. I also tried turning QSV off by disabling the Intel GPU in device manager and the render times were the same. CPU vs QSV vs AMD GPU H.264 decode all gave the same times.

H.264 to Prores proxy is 8% slower in the beta.

H.265 to H.265 is 40% slower in the beta.

And Task Manager is suggesting that H.265 is not using the GPU to encode in either the beta or the main version. And that for H.264, GPU Video Decode is only using 25%, as opposed to 100% GPU Video Encode. For H.264 Video Decode shows at 80%.

I have the RX480 which is not a powerful GPU by today's standard & doesn't seem to beat the Intel QSV decoding, so I would like to see people test this with a better GPU.

But if this feature comes into the main version & can't be turned off, for me it would mean much longer render times for H.265, not that I use that much.

Copy link to clipboard

Copied

But the new feature added is not encode, it's decode. There is no switch for decode, like there is a hardware or software switch for encoding. Here is the difference between the two.

- Encode: Improve performance of video export for H.264 & HEVC/H.265.

- Decode: Improve the timeline performance when working with H.264 & HEVC/H.265. This will results in less dropped frames and smoother playback performance when timeline playback is at high quality (1:2 or 1:1).

I was seeing a 5x performance improvement during H.264 export, when using an AMD Vega Frontier Edition GPU. When doing playback of 4K 10-bit 24p H.264, I only dropped 68 frames out of 1847 frames when playing back from my NAS over 10 GB connection at the 1:1 playback quality, and that is a significant improvement.

Copy link to clipboard

Copied

Thanks for your feedback.

The new features added is for hardware decoding using AMD GPU cards, so the performance gain is expected when doing playback/scrubbing/seeking/reverse playback in timeline using H264/HEVC files. Using AMD GPU decoding, it is expected to have higher number of strems being played in the timeline without frame drops. Playback performance with high bitrate files, 10 bit files should be improved as well.

Copy link to clipboard

Copied

Yes, sorry I should have been more clear.

As hardware encode was already present, in theory the addition of hardware decode should improve render times as to render from H.264 to H.264 or to Prores, the computer has to decode first and then encode second - and Task Manager does show it doing so in the relevant tab. So I was testing the first part of that, pitting CPU DECODE vs QSV DECODE vs AMD DECODE - all feeding into the AMD ENCODE which is there for all 3 tests. For me I saw no improvement with the RX480, but there should be for more powerful GPUs.

To test this you just need to chuck an H.264/AVC clip into both the current Media Encoder and export as H.264 and then do the same in the beta AME. And then check the log for the encode time.

I haven't had a chance to test timeline playback or scrubbing yet.

Copy link to clipboard

Copied

Thanks for your feedback.

Encode time depends on various factors including different GPU card and machine CPU capabilities. For this new feature, we are focusing on improving the playback and timeline experience. Thanks a lot for your feedback regarding degradation in export time, we will work on fixing it, and make sure there is no impact on encode time. Thanks.

Copy link to clipboard

Copied

Hi Umang

Is there any reason not to focus on both?

A large proportion of pro editors use a proxy workflow for H.264 clips. That means the only times they need to decode H.264 are a) when making proxies (decodes H.264 in order to encode to the proxy format) & b) when doing a final export from a timeline with H.264 clips (decodes H.264 in order to encode to the delivery format). If using the GPU to decode the H.264 (as well as encoding which was a very valuable addition, thank you) could speed these processes up, it would save a lot of time for a lot of people.

Or am I missing something?

Copy link to clipboard

Copied

You are missing something!

I repeat, decode does not increase decrease final export times!!! Decode increases playback performance only!

Additionally, If decode is fast enough, there is no need to run a proxy workflow!

Copy link to clipboard

Copied

Can you explain why you think that, given what I have said above? Why would it not affect export times given that the computer has to decode H.264 into order to export?

Copy link to clipboard

Copied

What is not appraent to us, is that all imported video is converted into an intermediary format behind the scenes in order for timeline playback. This happens with the CPU (if not GPU decode is supported) or assited by the GPU (if GPU decode is supported). This is not necessary for export when exporting video into you final format. I am making an assumption here, as I put my engineering degree to work LOL.

Hardware decoding is the best thing that can happen, as it greatly improves the editing experience. The only reason people run proxies in the first place, is because editing is too painful without an extremely fast CPU. GPU Hardware decode fixes that without having to burn so much time creating proxies before you can go to work.

Copy link to clipboard

Copied

I don't think you're correct about that assumption, though I stand to be corrected by one of the Adobe guys. But if you think about it, for the final export, how could the computer skip the process of decoding? It's the only way it can read the data at all in order to encode it. Plus if you do an export and watch in Task Manager, in the Beta you see the Video Decode section of the GPU at work as well as the Video Encode section. And in the main current version you see the Video Decode part of the Intel GPU being used if you have it.

This is true for both timeline playback and export.

I can think of a couple of instances where decode is not needed, where frames or GOPs are just copied.

1) Smart Render with a supported codec like Prores

2) Some apps can copy H.264 directly like FFMPEG. But it wouldn't be practical for Premiere to do this and if it were done, the bitrate would be greyed out in the export dialog.

Copy link to clipboard

Copied

Thanks for your feedback.

Encode and Decode performance is dependent on GPU and CPU card capabilities. For older or less powerful cards, CPU performance could be better than GPU performance. For now, we are focused on improving the playback performance by taking advantage of GPU decoding. This should improve overall playback experience (reducing the need for proxy creation), on the machines having supported GPU cards. This again would be dependent on the hardware and software resources available. We have noted your feedback regarding encode time, we will ensure that there is no degradation in encode time from previous release, for upcoming releases we will continue to focus on improving encode time as well.

Copy link to clipboard

Copied

2) Premiere already does this with MXF wrapped footage

-

- 1

- 2

Find more inspiration, events, and resources on the new Adobe Community

Explore Now