Why system run graphics card when export video and Premier Pro not use graphics card

Hi All!

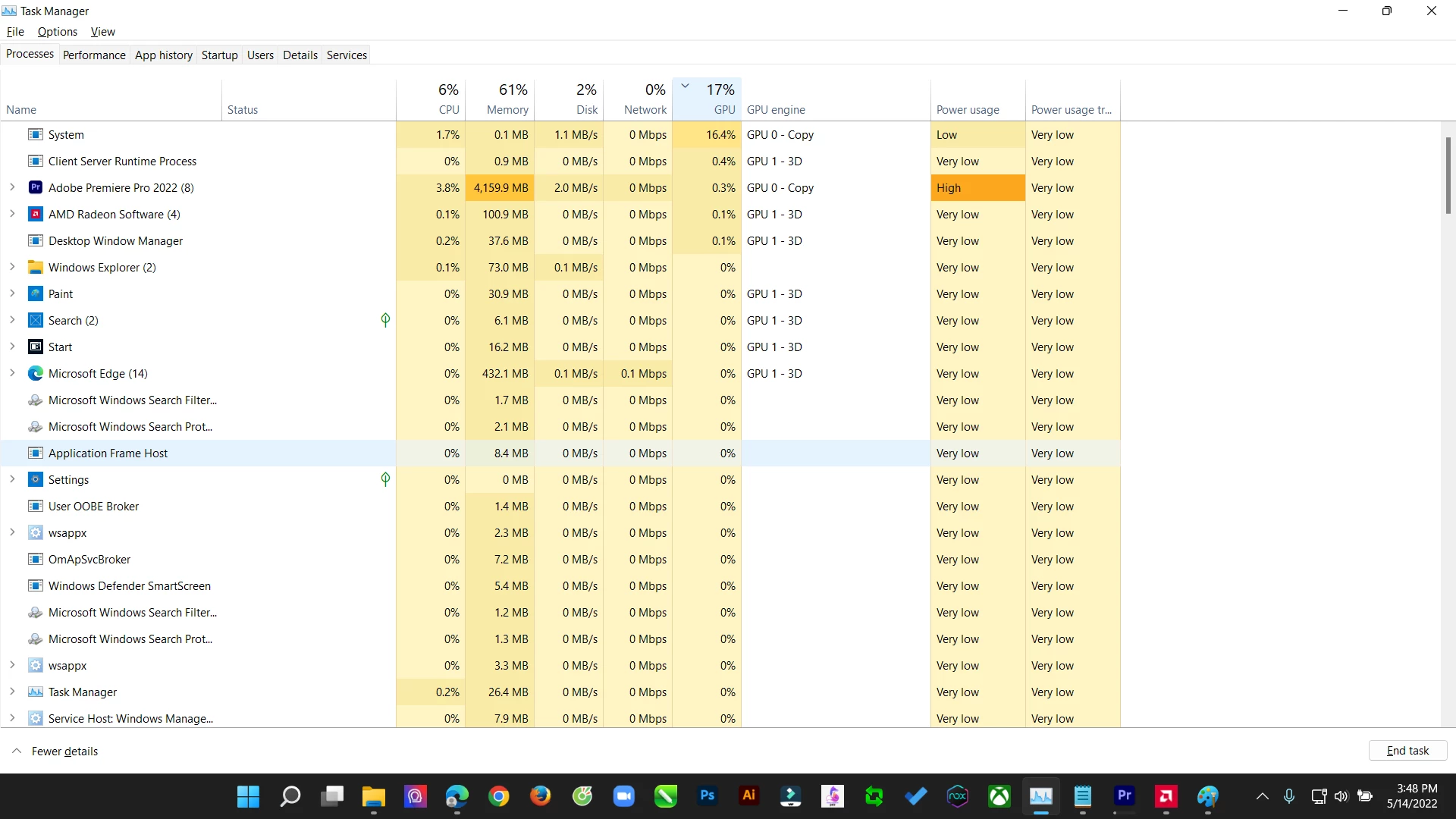

I have a problem. When i render or export video, system run graphics card when export video and Premier Pro not use graphics card

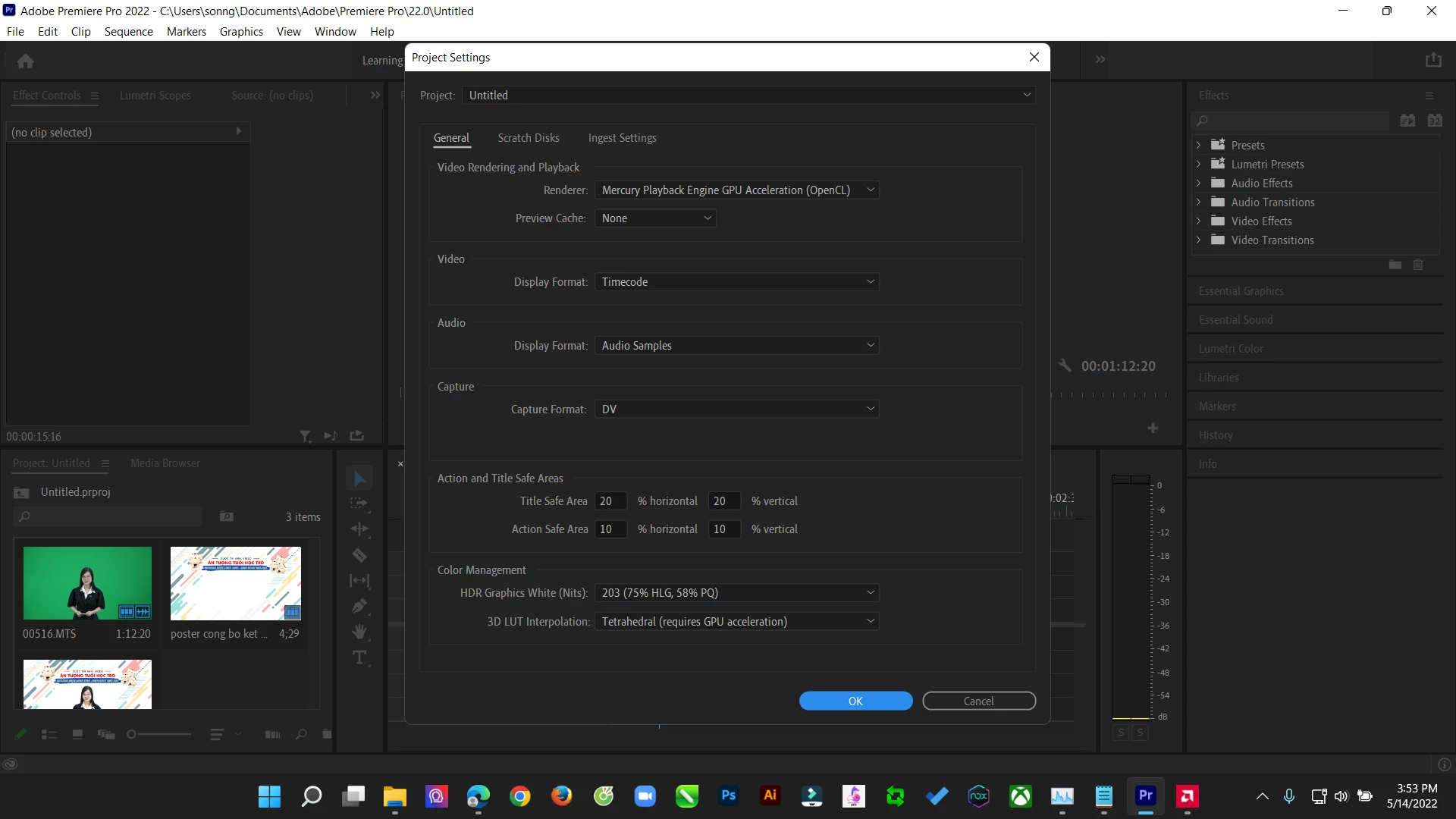

I enabled Mecury Playback Engine GPU (OpenGL)

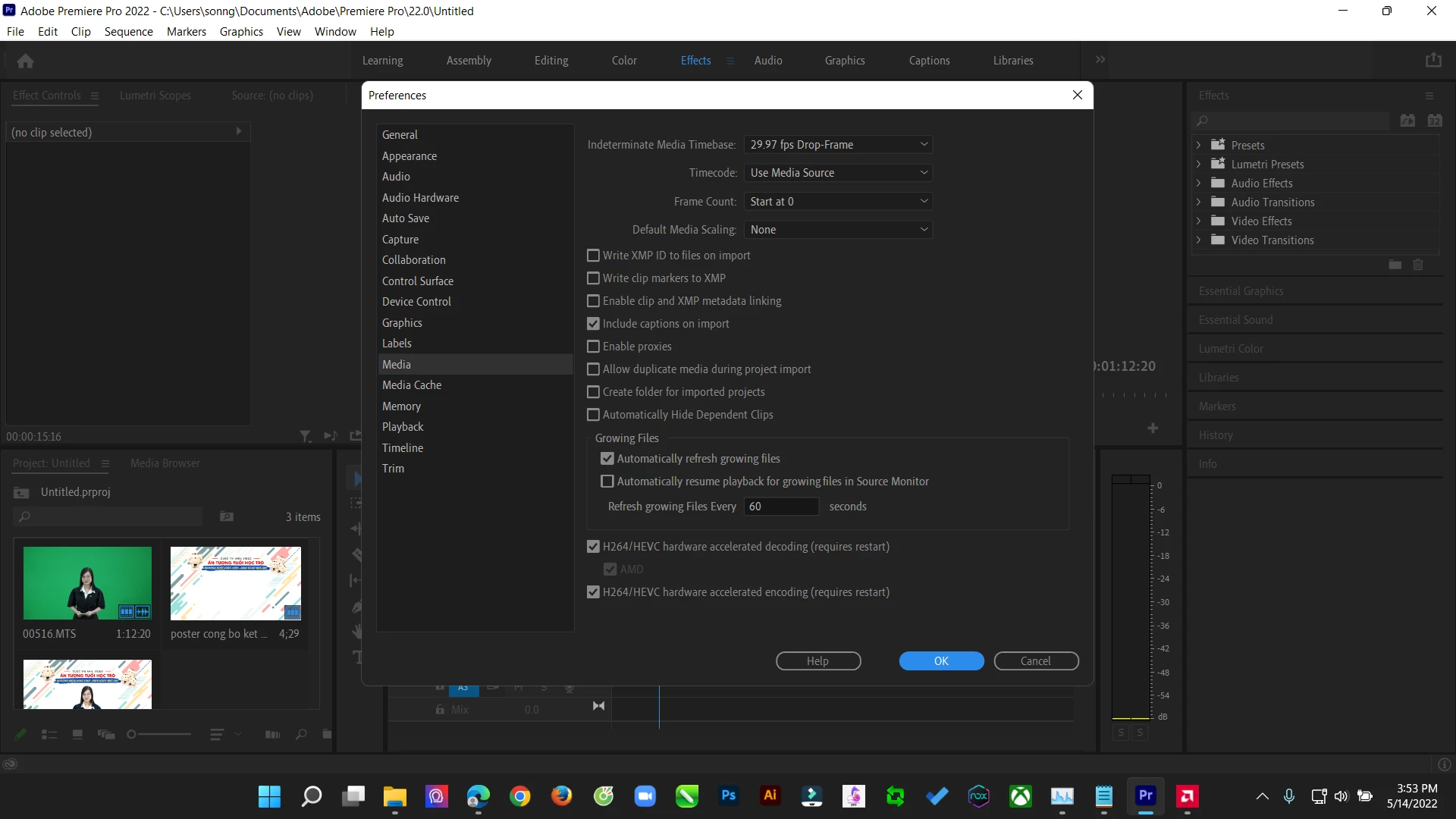

And I enabled hardware in Media

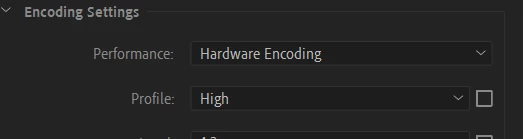

And I enabled hardware when export video

BUT NOTHING HAPPENS! PLEASE HELP ME.

VIDEO AFTER EXPORTING ERROR AT 10th SECOND

My video: My Drive - Google Drive

PremierePro

AMD R7 5800H

RX 5500M

Ram 16GB

My English very bad, sorry and appologize all!