Answered

This topic has been closed for replies.

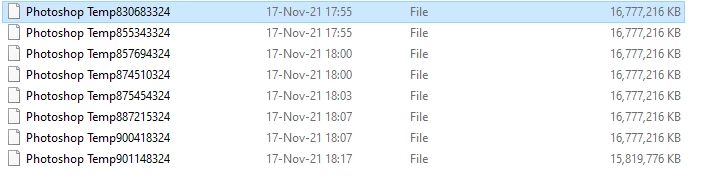

Yes, this is all perfectly normal and fully expected, and a good illustration of real world disk space requirements.

This will only grow as you accumulate history states, each of which potentially up to the full uncompressed file size. And if you have more than one file open, each of them needs their own disk space, again according to how many history states are active. And then on top of that, smart objects have a lot of overhead adding to the original file size.

If you're working with big files, you should consider 500 GB a realistic minimum. If you want to have good working headroom, 1 TB or more.

Sign up

Already have an account? Login

To post, reply, or follow discussions, please sign in with your Adobe ID.

Sign inSign in to Adobe Community

To post, reply, or follow discussions, please sign in with your Adobe ID.

Sign inEnter your E-mail address. We'll send you an e-mail with instructions to reset your password.