- Home

- Lightroom Classic

- Discussions

- Re: Enhance Details - Using Wrong GPU

- Re: Enhance Details - Using Wrong GPU

Enhance Details - Using Wrong GPU

Copy link to clipboard

Copied

On MacOS, just updated to Lightroom Classic CC 8.2

In my Lightroom settings I have the "Use Graphics Processor" option set, and it is using Metal: Vega Frontier Edition. It is an external GPU connected to my Mac and pretty much every other piece of software is accelerated using it.

However, Lightroom does not seems to be using the right GPU for the Enhance Details function, and instead uses my internal graphics card that is much weaker and not the one that it says it is using in preferences.

This seems like a bug.

Copy link to clipboard

Copied

What MAC OS version?

Copy link to clipboard

Copied

Also, in preferences, in performance, if you turn in use GPU, does LR report the correct GPU?

Copy link to clipboard

Copied

As I mentioned, I have the "Use Graphics Processor" set and it is reporting the Vega Frontier Edition. However the graphics card used by the Enhance Details tool is my Intel UHD Graphics 630.

I am on MacOS 10.14.3 (the latest at the time).

Copy link to clipboard

Copied

Interesting, caused me to get off my rear and turn my Mac Book on. Mind you, no external GPU.

Probably should have asked what shows up in System Information (in LR), internal or external.

because, now I wonder what leads you to know that the enhance details is using the internal GPU.

Also, I have a curiosity around external GPU and what LR reports and how it knows to use the better external GPU.

That link I provided does state LR should be capable of using the external GPU. Nit greatly helpful however.

ahh, this link on how to setup a eGPU on MAC so programs see it as the primary Use an external graphics processor with your Mac - Apple Support

Copy link to clipboard

Copied

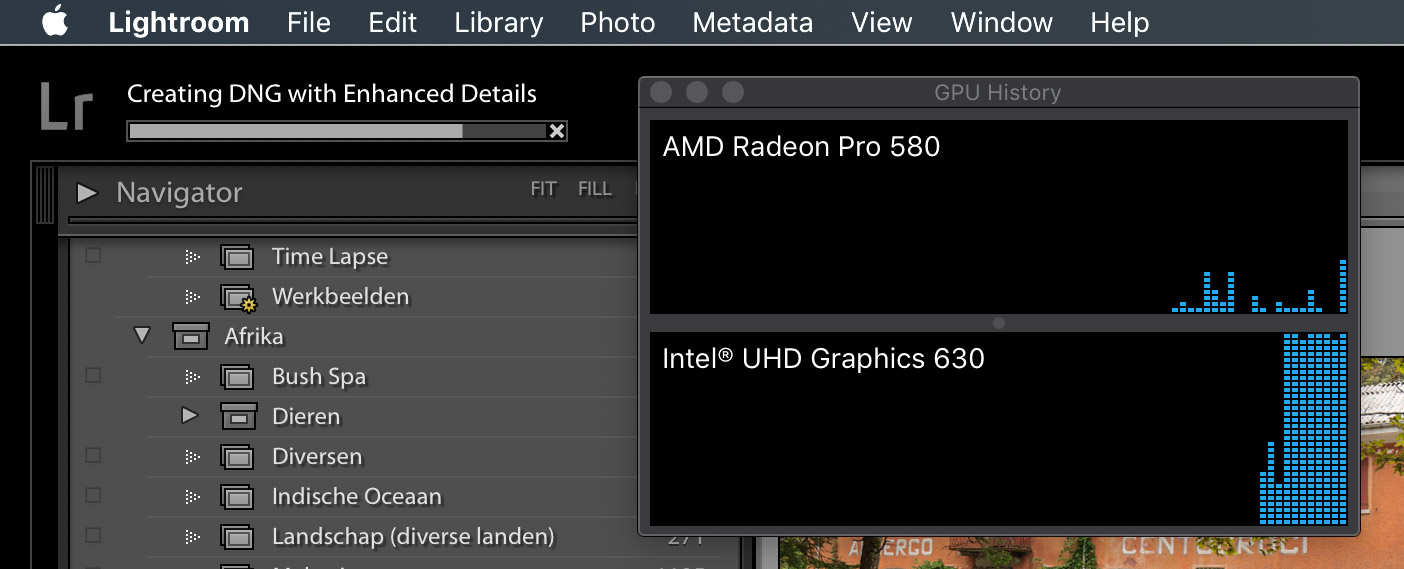

I just tested it and I can confirm that this is indeed what happens. The AMD Radeon Pro 580 is my BlackMagic eGPU, the Intel UHD Graphics 630 is the built-in GPU of the Mac Mini.

Copy link to clipboard

Copied

could you post your system information from Lightroom?

In Lightroom click on Help, click on System Information, click on copy, then paste in reply?

Copy link to clipboard

Copied

davidg36166309 wrote

could you post your system information from Lightroom?

In Lightroom click on Help, click on System Information, click on copy, then paste in reply?

No, I have already filed a bug report and prefer to let Adobe technical staff look at this. If they want further information, I'm happy to give it to them. This is not the place for bug reports, so I leave it with this. BTW, it seems that Camera Raw has the same bug.

Copy link to clipboard

Copied

Hi,

I'm facing the same exact issue! MacBook Pro 15" 2018 connected to an eGPU AMD Vega 64, Lightroom with "Use Graphic Processor" on and recognising the Vega 64 but when performing the "Enhance Details" task it uses the Intel Graphics GPU (not even the AMD Radeon of the macbook) instead of the eGPU!

Copy link to clipboard

Copied

mrdoodlebugg wrote

On MacOS, just updated to Lightroom Classic CC 8.2

In my Lightroom settings I have the "Use Graphics Processor" option set, and it is using Metal: Vega Frontier Edition. It is an external GPU connected to my Mac and pretty much every other piece of software is accelerated using it.

However, Lightroom does not seems to be using the right GPU for the Enhance Details function, and instead uses my internal graphics card that is much weaker and not the one that it says it is using in preferences.

This seems like a bug.

Please post your own discussion, that will work better.

Copy link to clipboard

Copied

davidg36166309 wrote

mrdoodlebugg wrote

On MacOS, just updated to Lightroom Classic CC 8.2

In my Lightroom settings I have the "Use Graphics Processor" option set, and it is using Metal: Vega Frontier Edition. It is an external GPU connected to my Mac and pretty much every other piece of software is accelerated using it.

However, Lightroom does not seems to be using the right GPU for the Enhance Details function, and instead uses my internal graphics card that is much weaker and not the one that it says it is using in preferences.

This seems like a bug.

Please post your own discussion, that will work better.

Huh? This is indeed exactly the same bug, so there is absolutely no need to post yet another thread about this bug. In fact, because this is a user-to-user forum, there is little need to continue this discussion at all. The bug report is filed, so it's up to Adobe to do something about it. Nothing more we can do here.

Copy link to clipboard

Copied

Well, I clearly fouled my last comment up, replayed to wrong member.

Copy link to clipboard

Copied

I have the exact same issue. Mac Mini 2018, Vega 64 eGPU. Lightroom recognizes and utilizes the Vega eGPU for the Develop module, but for Enhance Details task it ignores the eGPU and uses the onboard intel. Such a disappointment, as this is the first and only task where Lr is actually putting GPUs to good use.

Copy link to clipboard

Copied

As mentioned above this is a known bug and will be fixed in a future release....

Copy link to clipboard

Copied

Um... Where above does it state here that it's a known bug and will be fixed? Either I'm blind (entirely probable!) or nowhere here does it state that.

Copy link to clipboard

Copied

Refer to post #7

Copy link to clipboard

Copied

Post 7 does not have any information stating that this bug will be fixed in a future update.

Copy link to clipboard

Copied

It’s a bug and it has been reported to Adobe. Adobe will never tell you if or when it will be fixed, until it is fixed.

Copy link to clipboard

Copied

FYI new versions of Lightroom CC and Lightroom Classic CC just came out today. The bug is NOT fixed.

I have a Radeon RX580 in an eGPU enclosure and "enhance details" only uses my integrated Intel GPU. I guess we'll find out in another two months when the next release comes out.

Copy link to clipboard

Copied

This could be a bug in Mac OS. Enhance Details uses the Core ML API of Mac OS, and there are reports that Core ML doesn't use an AMD Radeon eGPU, as of last July: Can't use eGPU in Image Classification · Issue #883 · apple/turicreate · GitHub . The Apple developer in that thread said he would update the thread when the problem was fixed, and the thread hasn't been updated (which doesn't mean a lot either way).

If indeed it's a Mac OS bug, then LR is waiting on Apple.

You might file a bug report in the official Adobe feedback forum, where Adobe wants all product feedback: Lightroom Classic | Photoshop Family Customer Community . Even though it's been reported that there is an internal bug report filed on this, posting a bug report in the feedback forum has some benefits: Adobe product developers read everything posted there and sometimes do reply, giving more information on the status of a bug (developers rarely participate here). Also, other users can click Me Too on the bug report and provide additional information, giving Adobe a better sense of how users are being affected. And it provides a central place for users to share information.

Copy link to clipboard

Copied

Good point.... I didn't realize LR used CoreML for this.

Copy link to clipboard

Copied

From June 21 on the GitHub issue (Can't use eGPU in Image Classification · Issue #883 · apple/turicreate · GitHub) it seems that the latest version of MacOS maybe has fixed this. So perhaps we can wait until Catalina, or if there are any Beta testers who can try?? ![]()

Copy link to clipboard

Copied

Still has not been fixed on Catalina 10.15.1 and the latest Lightroom CC Classic (9.0).

Any updates from Adobe support team on this?

Find more inspiration, events, and resources on the new Adobe Community

Explore Now