Copy link to clipboard

Copied

Hello everyone,

I have a question to ask. When I use this script to change the target folder of links, if there are many linked files, the entire script takes a long time to complete. However, if I rename the source directory first and then use InDesign's Links panel to replace the target folder for finding missing files, this method is much faster, only a fraction of the time it takes for the script to run.

What could be the reason for this significant difference in speed?

Is there any way to optimize this script?

Thank you all.

var originalFolderPath = "F:/source";

var targetFolderPath = "e:/target";

var doc = app.activeDocument;

var links = doc.links;

var updatedLinksCount = 0;

for (var i = 0; i < links.length; i++) {

var link = links[i];

var originalLinkPath = link.filePath;

if (originalLinkPath.indexOf(originalFolderPath) === 0 || originalLinkPath.indexOf(originalFolderPath.replace(/\//g, "\\")) === 0) {

var originalFileName = originalLinkPath.substring(originalLinkPath.lastIndexOf('/') + 1);

if (originalFileName == originalLinkPath) {

originalFileName = originalLinkPath.substring(originalLinkPath.lastIndexOf('\\') + 1);

}

var targetFilePath = targetFolderPath + "/" + originalFileName;

//$.writeln("Target File Path: " + targetFilePath);

var targetFile = new File(targetFilePath);

if (targetFile.exists) {

link.relink(targetFile);

updatedLinksCount++;

}

}

}

if (updatedLinksCount > 0) {

alert("Changed: " + updatedLinksCount + " links to files with the same names in the target folder!");

} else {

alert("No links to update found!");

}

1 Correct answer

1 Correct answer

Maybe try upating the links by page so you avoid such large arrays. This was 6x faster for me on a doc with 40 links per page:

vs. all doc links

var st = new Date().getTime ()

var targetFolderPath = "~/Desktop/target/"

var p = app.activeDocument.pages.everyItem().getElements()

var updatedLinksCount = 0;

var pg, targetFile;

for (var i = 0; i < p.length; i++){

pg = p[i].allGraphics

for (var j = 0; j < pg.length; j++){

if (pg[j].itemLink != null) {

targetFile =Copy link to clipboard

Copied

Hyperlink panel was closed. I don't remember every detail, this was about 10 years ago, but I always ahve all panels closed anyway, so that one I'm sure of.

Copy link to clipboard

Copied

Yes @Peter Kahrel I can see you understand much more about this than I do, in fact I don't know how this works at all! I've just noticed that if I run my first script—that will happily (and SLOWLY) process 15000 Links (71 unique files)—but force it to bail out after the first 71 (in other words just process the unique linked files) it has the exact same result on the document after 3 seconds as waiting for 350-600 seconds for the full 15000 links. They are all completely updated. This may be due to using Link.reinitLink(). Anyway, I have a lot to learn here. Getting the links via, say, Page.allGraphics is better because the script for some reason won't be fooled into processing every link—it just does the unique links and stops. But again, because I don't understand this I might be talking nonsense.

And this raises the question of what if you want to reinit all the links on page 1, but not page 2, even though page 2 was a duplicate of page 1? This may be where Link.relink() is mandatory. I'll have to test when I get time.

- Mark

Copy link to clipboard

Copied

@rob day @Robert at ID-Tasker @leo.r @m1b @Peter Kahrel This topic may be the most lively in the InDesign community recently. Thanks to all the teachers for their participation. I will test various solutions promptly and provide detailed test data.

Copy link to clipboard

Copied

Thanks @Aprking, I'm interested in hearing your results!

Copy link to clipboard

Copied

rob day Robert at ID-Tasker leo.r m1b Peter Kahrel This topic may be the most lively in the InDesign community recently. Thanks to all the teachers for their participation. I will test various solutions promptly and provide detailed test data.

By Aprking

You are welcome.

Any chance you could share your file - whole package - on priv? So the tests would be more comparable.

Copy link to clipboard

Copied

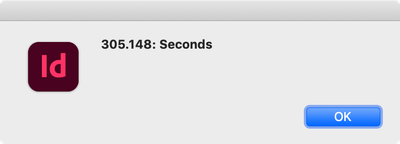

Final statistical report: Document size 752,016KB, 365 pages, with 40 links per page, totaling 14,595 links. As per guidance from @Peter Kahrel , among these, 729 are actual links, while the remaining 13,866 are pointers.

=======================================================================

Using @m1b method, due to the ineffectiveness of directly using link.update() in the code, the reinitLink method was first employed to change the links, taking 26.133s. Then, updating the links using link.update() in a subsequent loop took 295.888s, totaling 322.021s, occupying 1GB of disk space. Since direct use of link.update() in the code wasn't possible, the method had to be abandoned after two script executions. Although this method was fast, I couldn't fully grasp the technical details, rendering it impractical.

=======================================================================

I employed a method of splitting links.length:

var links = doc.links.everyItem().getElements(); var batchSize = 100; var totalLinks = links.length; for (var i = 0; i < totalLinks; i += batchSize) { var batchLinks = []; var endIndex = Math.min(i + batchSize, totalLinks); for (var j = i; j < endIndex; j++) { batchLinks.push(links[j]); } for (var k = 0; k < batchLinks.length; k++) { var link = batchLinks[k];

This method took 275.817s and occupied approximately 1GB of disk space.

=======================================================================

Using @rob day code:

var p = app.activeDocument.pages.everyItem().getElements()

This method successfully reduced the processing time from over an hour to 280.275s and occupied approximately 1.1GB of disk space.

=======================================================================

@rob day solution had the shortest processing time and minimal disk space usage, making it the champion and the most technically superior solution.

=======================================================================

My solution is experimental and requires extensive testing to ensure there are no bugs. However, its advantage lies in its flexibility in splitting links.length and adjusting batchSize according to different document requirements.

=======================================================================

In conclusion, the three solutions differ mainly in specific details, but their techniques are similar, all involving dividing the entire document into smaller chunks for processing. The observed differences in processing time are not stable and should be taken as reference only.

Once again, I extend my gratitude to @rob day @m1b @Robert at ID-Tasker @leo.r and @Peter Kahrel for their guidance and participation.

Thank you.

Copy link to clipboard

Copied

Here's another thing that might speed up things: A script can open a document without showing it. This makes a difference because InDesign's screen writes are disabled (among other things). Use this code:

// Close all documents, then:

var doc = app.open (myIndd, false);

// Now work on doc

Copy link to clipboard

Copied

I have already attempted this method in another script called "InDesign Batch Export PDF". It noticeably improves speed but also has limitations, such as the inability to use Preflight Check.

Copy link to clipboard

Copied

Then you should disable preflight check: it too slows things down. You can enable preflight check after running the script. Should make a difference.

Copy link to clipboard

Copied

Batch exporting PDFs cannot disable preflight checks, otherwise quality accidents may occur.😅

Copy link to clipboard

Copied

Fair enough. But for the linker you can disable it, if only to check whether it makes a difference.

Copy link to clipboard

Copied

Batch exporting PDFs cannot disable preflight checks, otherwise quality accidents may occur.😅

By Aprking

If you batch export PDFs - INDD files should already be checked & "accepted" ??

Copy link to clipboard

Copied

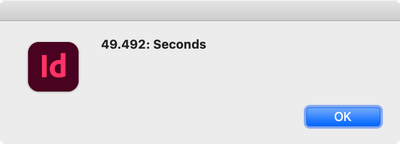

I’m not sure about this but it seems like a bottleneck is the size of the array, so instead of using var links = doc.links.everyItem().getElements(), which might create an intial 12,000+ item array of links, look at doc.links.itemByRange(n,n). This was almost 2x faster than my by page script:

var st = new Date().getTime ()

var r = 50; //batch array length

var si = 0; //start index

var li = r-1 //end index

var ln = app.activeDocument.links.lastItem().index + 1; //total numer of links

var bn = Math.floor(ln/r); //number of batches

var lo = ln%r //left over number of links

var links, targetFile;

var targetFolderPath = "~/Desktop/target/";

//relink by batch

while (bn--) {

links = app.activeDocument.links.itemByRange(si,li).getElements()

for (var i = 0; i < links.length; i++){

targetFile = File (targetFolderPath + links[i].name);

links[i].relink(targetFile);

};

li = li + r

si = si + r

}

//relink the left over links

if (lo > 0) {

li = si + lo - 1;

links = app.activeDocument.links.itemByRange(si,li).getElements()

for (var i = 0; i < links.length; i++){

targetFile = File (targetFolderPath + links[i].name);

links[i].relink(targetFile);

};

}

var et = new Date().getTime ()

alert((et-st)/1000 + ": Seconds")

Copy link to clipboard

Copied

That's a good one. I remember that years ago some people noticed that large arrays processed slowly and that chopping them up improved things greatly.

Copy link to clipboard

Copied

@rob day always has updated and better desserts (methods) in his pocket, thank you for your reply. But why don’t you just take them all out at once? Now I have to stay up late testing again… 🤣

Copy link to clipboard

Copied

Hi @rob day, that is terrific! That was the missing piece for me—I had never thought of using .itemByRange for this purpose (although it's obvious now that you've done it!).

It works, but I don't understand why. I mean, the slow part is the actual call to Link.relink or Link.reinitLink—if you comment out that part it speeds through checking the 15000 link's paths (in the single massive Array) in about 1 second. So accessing the Array—and even accessing some properties of the DOM object (eg. the path)—are not the slowing things down. So it's hard to imagine how using smaller Arrays speeds things up. As Robert mentioned, it might be something to do with the destroying of the old link and creating a new one—maybe this destroy/create causes a change to every existing link reference and thereafter accessing any existing references must travel through an journey behind-the-scenes to get to the "new" object. The fact that the slow-down is non-linear (maybe even exponential) suggests that these hypothetical extra steps are cumulative for each existing reference so it gets worse everytime any link is relinked. Maybe I can test this possibility.

- Mark

Copy link to clipboard

Copied

It works, but I don't understand why.

I can only guess that it takes some time to traverse the array—it takes longer to go get item 11,123 of a 12,000 item array than item 35 of a 50 item array?

Copy link to clipboard

Copied

I suspect it’s related to undo. If you try Link.reinitLink first and then manually update all the links at once, you’ll find that disk usage gradually increases to astonishing levels, eventually causing a freeze. Segmentation isn’t due to array size, but rather due to memory usage during the relinking process, and it’s not waiting for memory usage to become too high before resorting to using inefficient disk space as cache. That’s why I keep testing frantically and come to the conclusion that the array needs to be segmented for processing. I can analyze the reasons, but I don’t have @rob day knowledge base, so I can only suggest ideas and wait for @rob day to pull out the desserts from his pocket time and time again…

As for @m1b speculation that traversing the array takes some time, although it’s very small, it adds up when there are over ten thousand links, which has a noticeable impact, but it’s still not as significant as not segmenting the array

Copy link to clipboard

Copied

Copy link to clipboard

Copied

Yes, the UNDO history is like a Ouroboros, ultimately leading to system crashes. Even when using InDesign’s built-in link panel to update a large number of links, it freezes. I think the discussion of our topic should serve as a reference for Adobe developers.

Copy link to clipboard

Copied

Yes, the UNDO history [...]

By Aprking

Maybe this will help - description of how UndoModes.FAST_ENTIRE_SCRIPT works:

This should eliminate any slowdowns caused by building up UNDO history...

https://www.indesignjs.de/extendscriptAPI/indesign-latest/#UndoModes.html

Copy link to clipboard

Copied

Speaking of UNDO... you might speed things up a tiny bit more by using

app.doScript(main, ScriptLanguage.JAVASCRIPT, undefined, UndoModes.FAST_ENTIRE_SCRIPT, "Update Links");In my quick test it reduced total time by 5-10% compared to using UndoModes.ENTIRE_SCRIPT. I don't remember what the ramifications of using that mode are—it relates to undo, so in this case might not be an issue.

- Mark

Copy link to clipboard

Copied

@Aprking that's very plausible. An example, nothing to do with scripting: I can delete 20 pages of my 30 page test document (500 links per page) faster by deleting the last page again and again clicking delete button in Pages Panel, than to select all 20 pages and click delete button once. And the time difference between those two approaches seems to roughly match the non-linear function we noticed with the scripts.

Copy link to clipboard

Copied

[...] As Robert mentioned, it might be something to do with the destroying of the old link and creating a new one[...]

By m1b

InDesign is probably rebuilding index of all the links - and maybe doing something else, like checking all the "effects" - transparencies, shadows, overlaps, etc. - that's why Links collection should be processed backwards.

Copy link to clipboard

Copied

I’m not sure about this but it seems like a bottleneck is the size of the array, so instead of using var links = doc.links.everyItem().getElements(), which might create an intial 12,000+ item array of links, look at doc.links.itemByRange(n,n). This was almost 2x faster than my by page script:

[...]

By rob day

Most likely because when you were going through pages - InDesign had to extract links that are on specific pages - extract them by accessing by-page-index - AND when you go through links from the beginning - Links collection has to be constantly updated AND by-page-index as well.

When you are going through whole list of links - and just request (N,M) elements from this list / collection - InDesign doesn't have to index it - just returns part of the list - indexed by their IDs...

On the other hand - you are still going First to Last - so it should have negative effect anyway - skipping some links... Unless something has changed recently...