Lightroom Classic not using GPU for processing and also not saturating CPU

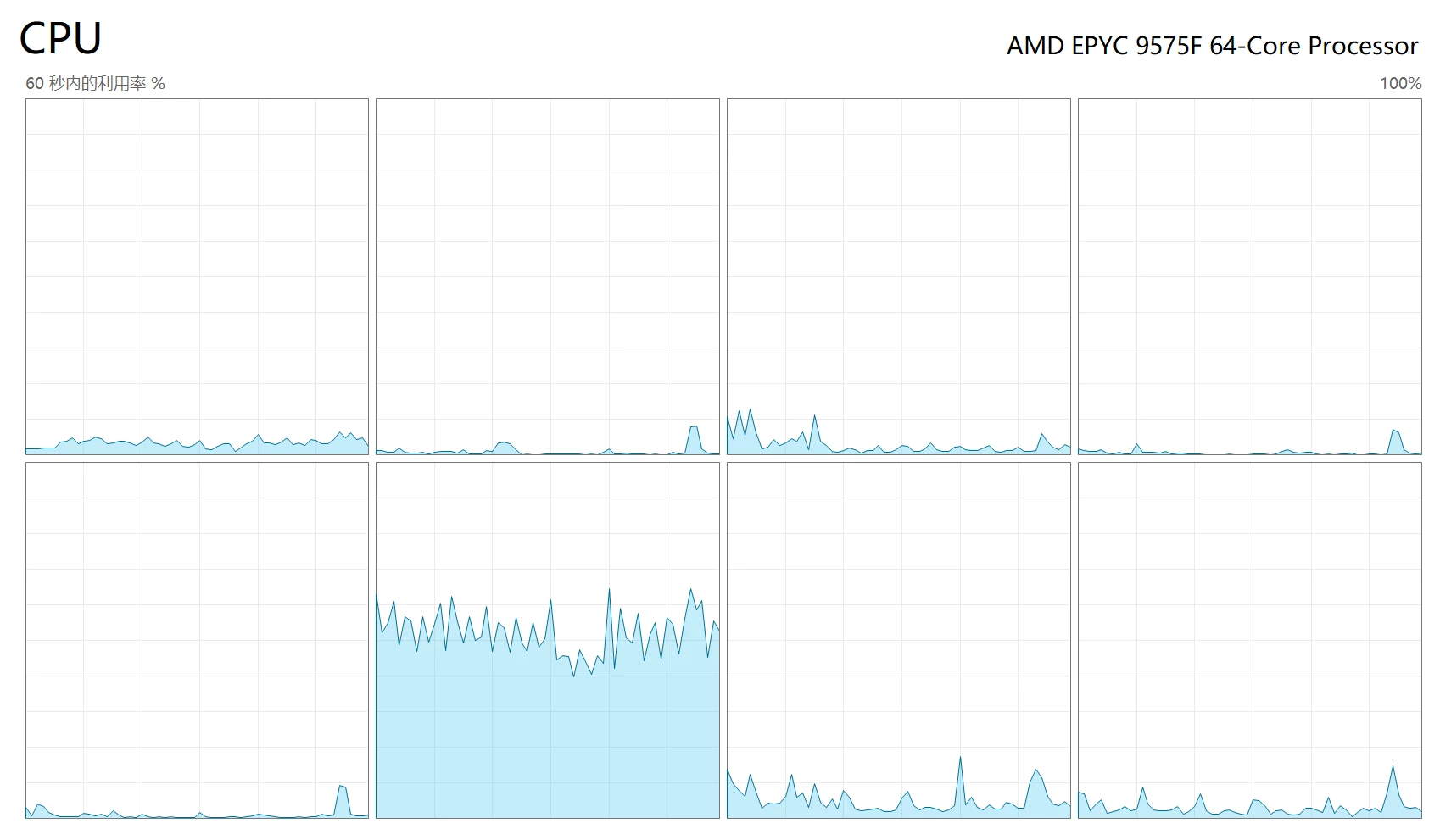

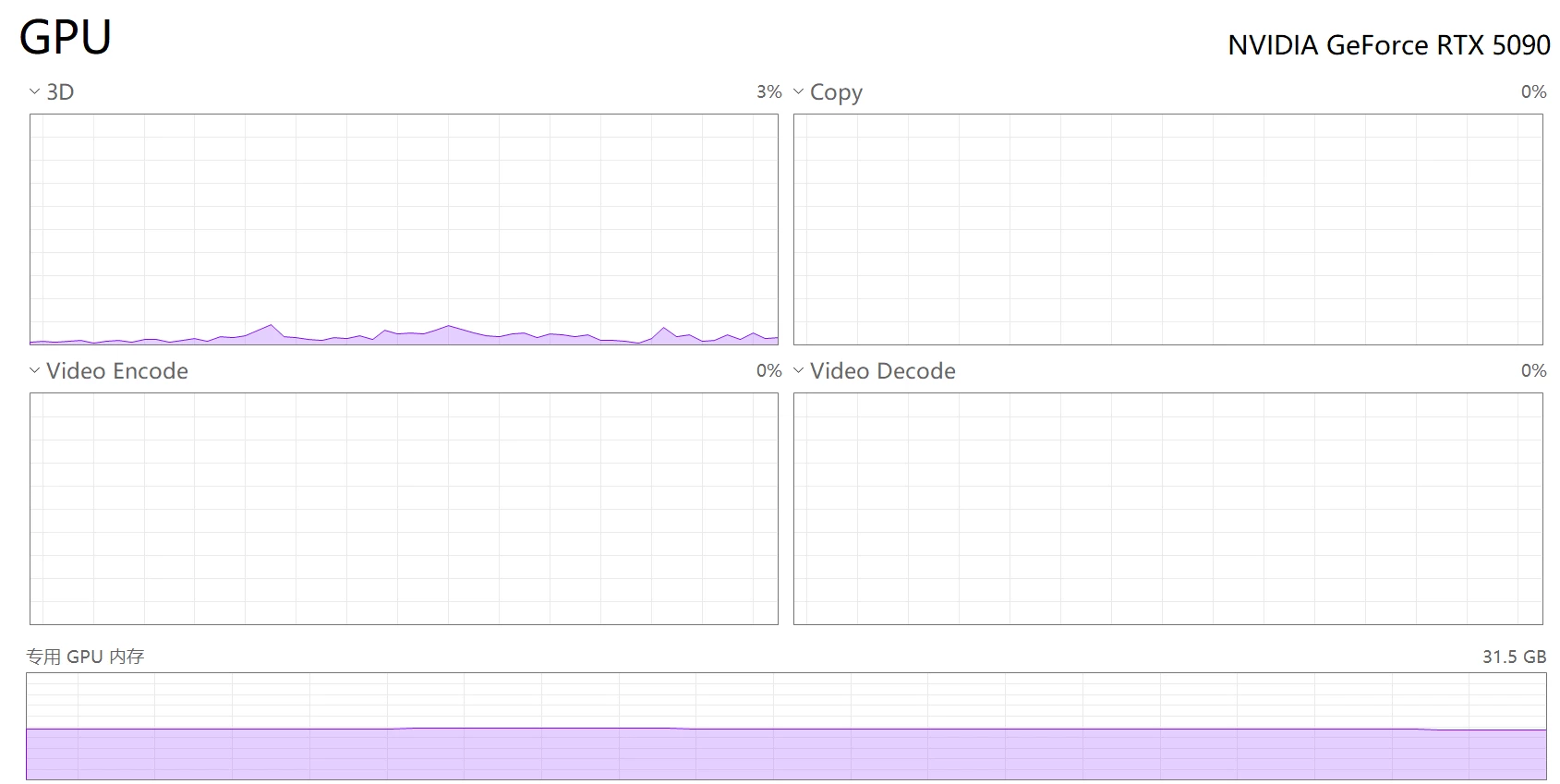

I'm using a powerful Epyc 9575F+RTX5090 workstation, but I find that Lightroom classic's performance is way underwhelming. The example is doing a batch enhance detail for 500 images. This is AI based so it should run under GPU and yes it run under GPU on my old computer and Mac. But I find that in here it runs on CPU, and more disappointingly not fully saturating the CPU. I enabled CCD as virtual NUMA node so task manager will show 8 graphs, and each graph present 8 cores. You can see that only one CCD is used (likely to because of Windows's scheduler trying to bin on one NUMA node aka CCD, which is the reason of enabling virtual NUMA to enhance L3 cache hitrate), and only half is saturated. There's VRAM allocated but GPU not computing. Except for AI functionality speed the overall performance is even worse than my M4 Pro, which is so disappointing. The system information also shows only 6 maximum camera raw threads. Full GPU acceleration is manually enabled and system information proving this with DirectX engine. However in indexing after import, the CPU can be fully saturated, every CCD work and will saturate the whole CPU to about 80%, which is good. Also, in saving XMP and exporting, it's also the same sense. Very slow and only CPU ultulization. It even seems to be serial, not parallel at all. I think this is a bug, does anyone have any idea why and how to solve it?