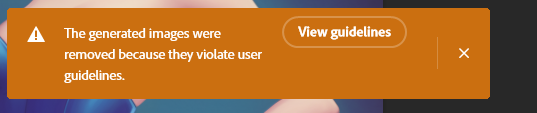

So as you can see, it's a PG-13 relatively inoffensive image of a woman in a bunny outfit. The top worked fine, and I was able to complete the top ear, which is cool. When I tried to extend the bottom with generative fill, though, I got this warning. They're just a pair of legs wearing stockings, and I wanted to extend it.

It feels like a false flag - though I could be wrong? I find myself thinking it would do the same for women in swimsuits.

Figured I'd share here.