- Home

- Photoshop (Beta)

- Discussions

- Generative Fill in Photoshop Beta

- Generative Fill in Photoshop Beta

Generative Fill in Photoshop Beta

Copy link to clipboard

Copied

Dream Bigger with Generative Fill - now in the Photoshop (beta) app

This under-construction, revolutionary new AI-powered Generative Fill allows you to create/generate new content in your image or remove objects like never before!

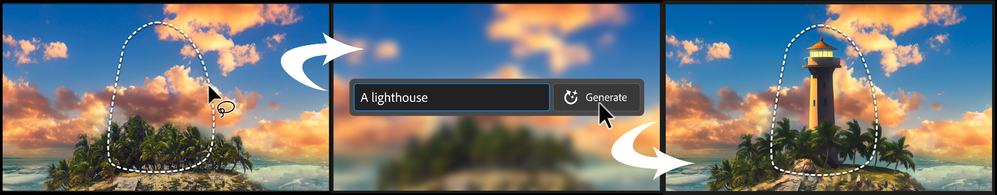

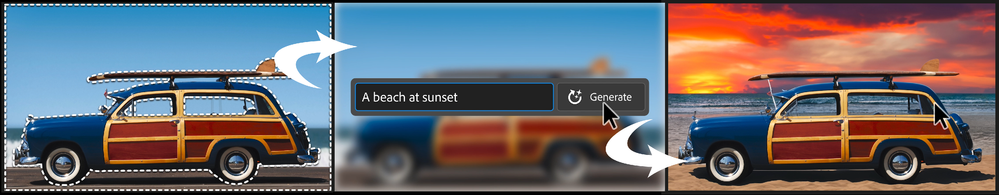

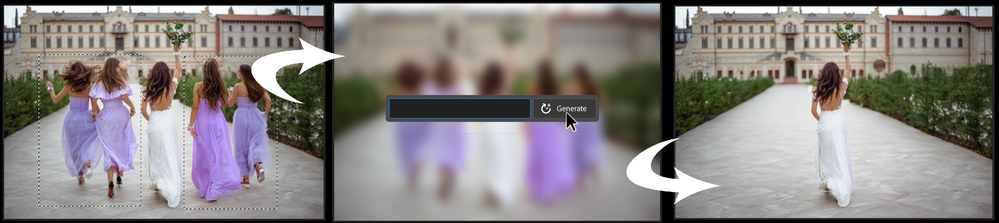

The process is simple: make a selection – any selection, then tell Photoshop exactly what you want placed there. The GenAI models will return an object or scene you described. You can:

Generate objects: Select an area in your image, then describe what you’d like to add.

Generate backgrounds: Select the background behind your subject, then generate a new scene from a text prompt.

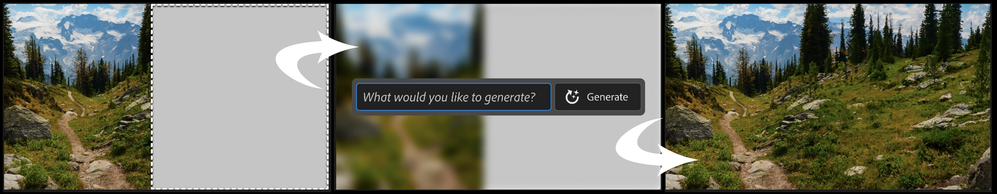

Extend images: Expand the canvas of your image, then make a selection of the empty region. Generating without a prompt will create a seamless extension of your scene.

Remove: Select the area you want to remove, then let the GenAI technology make it disappear.

And more… Generative fill is incredibly versatile. Discover new ways to use this powerful feature.

How to use Generative Fill

Full instructions and helpful links are here: https://helpx.adobe.com/photoshop/using/generative-fill.html

Rate the results!

Releasing this amazing new technology as a public beta allows Adobe to hear from you!

Let us know if the Generative Fill feature is meeting your expectations!

As you use Generative Fill, please rate each variation image.

Simply hover over the [•••] on the thumbnail and give it a thumbs up, or thumbs down, then follow the link to tell us more.

Report Result option

Prompts may also unintentionally generate problematic or offensive images; in such cases you can use the Report Result option to let us know.

Provide Feedback and help shape Generative Fill

If you would like to provide feedback on the overall experience, report any bugs, or suggest new features please let us know in this thread! If you prefer Discord, you can find us there too!

Helpful Tips

- Use simple language

Leave out commands like “add” or “remove” - Example: asking to "remove the red boat" will generate more red boats.

Try using 3-8 words to describe what you want to see.

Include a subject with descriptive language.. Example: A red barn in a field of flowers. - Select part of the original image when extending

Make sure you select part of the image along with the empty part of the canvas. This will give a better result that will blend in with the rest of your image. - Get inspired and inspire others

Share what you create with the beta community!

Check out the Adobe Firefly Gallery to see what others have created with Adobe Firefly and Generative Fill. Click on an image to see the prompt that was used.

Related Links:

Learn about and install Photoshop (beta) app

Experience the future of Photoshop with Generative Fill

Make selections in your composites

1 Pinned Reply

1 Pinned Reply

Hey all,

We have seen reports that turning VPN off allows some customers to download the newest beta.

We also have released a NEW 24.6 beta today,

- open CCD and click Check for updates and you should be offered the new version: Photoshop Beta 24.6 20230524.m.2185 (you will see this full version in Help/System info)

- In this new update: we fixed a top crasher, tool tip issue for under 18 users, and localization issues.

Copy link to clipboard

Copied

I wanted to add a "zepplin" in the sky and that gave a violation of guidlines. Seems like I mispelled the word (it should have been "zeppelin" but then I got a plane...). AI should regognise that it is a spelling mistake and either generate what asked for or suggest the correct spelling (like MS Word does e.g.) 😕 Seems like Adobe really needs to work on this (generate the right image + allowing/correcting spelling mistakes) if they want to catch up with AI...

Copy link to clipboard

Copied

Copy link to clipboard

Copied

it's a lot worse now than it's been. It's horrible seeing faces so mutated

Copy link to clipboard

Copied

Hiya folks here's a few pics I've created and spent hours tweaking. Worth the effort though.

Maybe admin could move to the correct board if I'm in the wrong place ?

Copy link to clipboard

Copied

Copy link to clipboard

Copied

Finally this is Edinburgh castle. The original image had dozens of people & objects, delighted with the way this turned out

Copy link to clipboard

Copied

Myself as a farmer 🤣

Copy link to clipboard

Copied

Now a wrestler 😅

Copy link to clipboard

Copied

Scottish island....

Copy link to clipboard

Copied

Lakeside house

Copy link to clipboard

Copied

Cuando pides, por ejemplo, que rellenes con un cartel en el que esté escrita una frase, la frase siempre aparece con caracteres erróneos, incluso entrecomillando.

En el ejemplo que envío quiero escribir "Come with Us" y lo cambia a "Come Wwiit UJS"

----

When you ask, for example, to fill in a sign with a phrase written on it, the phrase always appears with the wrong characters, even between quotation marks

In the example I send I want to write "Come with Us" and change it to "Come Wwiit UJS"

Copy link to clipboard

Copied

Hi @ANTONIO31009072adxl Generative AI does not accurately convey text at this time - its an issue with ALL AI models available, not just Adobe. Its a limitation of the technology.

Copy link to clipboard

Copied

As Kevin mentioned, the Generative Fill is not able to display text that you specify, well not consistently. You can try a few times and by chance you may get something. Most of the results will not display the text accurately.

Your best approach would be to get the AI to generate the graphic behind the text and then add the text later.

Firefly (https://firefly.adobe.com/) does has Text Effects that does take your characters and display them with a particular look and feel. Perhaps that will come to PS at some point.

Copy link to clipboard

Copied

It's incredible how good the AI is. It started from nothing, and this is the result. The AI knows itself what environment it is in, etc. Perfect.

Copy link to clipboard

Copied

I'm writing a book for authors using AI. Here is a pdf showing how I'm explaining Photoshop's generative infill in this book

[personal information removed as per forum guidelines]

Copy link to clipboard

Copied

In an article on Photoshop (Beta) Generative AI I've just completd, I provide seven Gen AI examples with prompt - all based on one photograph I took of F1 cars racing at the Canadian Formula1 Grand Prix in June and I analyze how effectively Gen AI interpreted the prompt.

This is the original image I shot of the F1 cars coming out of the short straight just before the S curve where we were seated at the Canadian Formula 1 Grand Prix in Montréal in June. Two of the seven (total) AI Generative Fill images below are derived from this one.

Here’s the prompt I used: “make cars appear to drive on water going through tropical forest.” I’m impressed! Gen AI did a great job suggesting reality in an unreal situation, the displacement and splashing of the water create a convincing sense of movement and speed in an entirely different medium of water. The color, leaves and contour on both sides of the forest look realistic. In addition, the image shows one of Gen AI’s strengths - its ability to render nature well. Note the perspective, lighting and scale - excellent. A+

The prompt was to make it appear as if the cars were out in space driving through the solar system and the Starship Enterprise was in the background - but I don’t have it verbatim. I think I see the Starship Enterprise in the mid- right border but I think it’s a fun, fantasy of space, although the cars look as if they are floating rather than racing. I suspect others have discovered a Must Fix in Adobe’s next version that doesn’t exist in Beta - find a way to save the prompt with the image. B+

Copy link to clipboard

Copied

On strange thing in generative fill is if I want to increase crop the image and add a missing arm or leg I get the not allowed message. I found a work around, just use the word suntan. Sometimes it gives a good result on the first try, then again it may take many. Sometimes it will only put a large sun hat in place of the limb. From distored grossly under or oversized to believable. Sometimes no matter how many times you try the results in bad, especially with feet. It wanted to put the sole up many times before it was right.

Copy link to clipboard

Copied

Hello, I'm here to share ideas and suggestions for GenFill.

I work on complex psd files with lots of photos and layers, like sometimes 50/80 layers. I don't start from zero or from a simple image, I prepare and do a lot of images research and work on complex photomontages for video game background. All this to say that I used several sewing methods, including aware content, and now I also use GenFill in my work. And there's a slight problem with using it on psds when you're trying to do what I do. Let me explain.

To begin with, I'd like to remind you that content aware works on the specific layer selected. And that until now, GenFill works globally on the image, not on the layers. I find the 2 tasks interesting, depending of what is for. I've become accustomed to aware content and find that the fact that it works on a specified layer makes it quite noticeable and easy to use. The only thing is that it doesn't allow you to manage or generate randomized patterns. Improving it would reduce your genfill server load.

The fact that GenFill works on the overall image poses a number of problems in terms of quality of life.

The first thing is that it doesn't allow you to direct correctly on specific intentions. If, for example, you want to work on the background mountain only, which is on a specific layer, it will necessarily make the join of his choice not your with the rest. This obviously means disabling all layers to use only the specific object.

A second constraining factor is when certain layer adjustments such as color, level etc. are used.

The problem is that genfill takes a layer adjustment into consideration when it shouldn't, so it ends up applying it twice, once to the generated image + the layer adjustment that's still there. This generates serious chromatic problems, forcing all layer adjustments to be deactivated.

You'll tell me that it would be enough to display only the layer by alt-clicking on it and then alt-clicking again to display all the layers. But as genfill has generated a new layer, this handy function is not usable here. But I'm not a Photoshop expert, so maybe another solution exists.

In any case, the 2 combined are quite problematic. They make life difficult to use with many layers and when you're in the middle of work. An option to choose global image or specific layer would make things a lot easier.

Thanks for reading.

Copy link to clipboard

Copied

I ran into this trying to use generative fill on some old industrial photos I had taken of a now demolished coke plant. Coke is a coal product made from heating coal without oxygen, similer to how charcoal is made from wood. It has no relation to the soft drink and the images were not of a Cocacola company facility. I started out being specific with my prompts and included this term, which resulted in a violation with each generation. The prompts all included coal, industrial, plant, etc. so it was not without context. It was not clear how or why I was violating guidlines. No guidence was given. When I began to suspect the word "coke" might be the culprit I eliminated it from the prompt and had no more issues.

This was a niche scenario, but the fact that real words are being blocked accross the board because they are also used by big brands is a serious issue. I can understand wanting to filter against recrating brand logos, but this should not be done by filtering out brand associated words regardless of context. The fact that this happens without any guidence as to what caused the "violation" makes it an even deeper issue.

Copy link to clipboard

Copied

The current ai did generate randome people for the person I chose instead of removing the person from the backdrop. Even though I left the text field empty.

Copy link to clipboard

Copied

Hey, i think there's a thing in your cloud since i can't use a prompt "short hair" anymore instead the ai always give me a long hair. so for example my model is a male with long hair but when i try to change it into a short hair using generative fill they keep giving me another long hair again, i keep repeating the same prompt again and again but still the ai keep giving me a long hair. previously in june/july i can do that change the hair but for this month i can't do that anymore and also the ai quality of the hair is decreasing like it's looks weird and didn't look real at all

Copy link to clipboard

Copied

this good

Copy link to clipboard

Copied

That's weird, as I tried changing a womans short hair to long, it kept giving me short hair. AI Seems to know the difference between blonde & red, but that's about it

Copy link to clipboard

Copied

Copy link to clipboard

Copied

Post install, all actions from the non-beta version were missing - I had to re-save all other actions and then re-import. It would have been nice for them to migrate naturally. Also, I have 4 Topaz Lab Filter plugins. All but PhotoAI showed up in the beta - but PhotoAI did not. I found it in the prior version's folder and copied it over - so everything was fixed - but finding out where everything lived, what was missing, what needed to be copied over and where it should be placed was a bit of a pain.

No additional help is needed - this is just feedback on a frustation.