Confused about Pixel Count (simple question)

Hi All,

I am somewhat new to photo and video and have a question that may be easy for someone to answer.

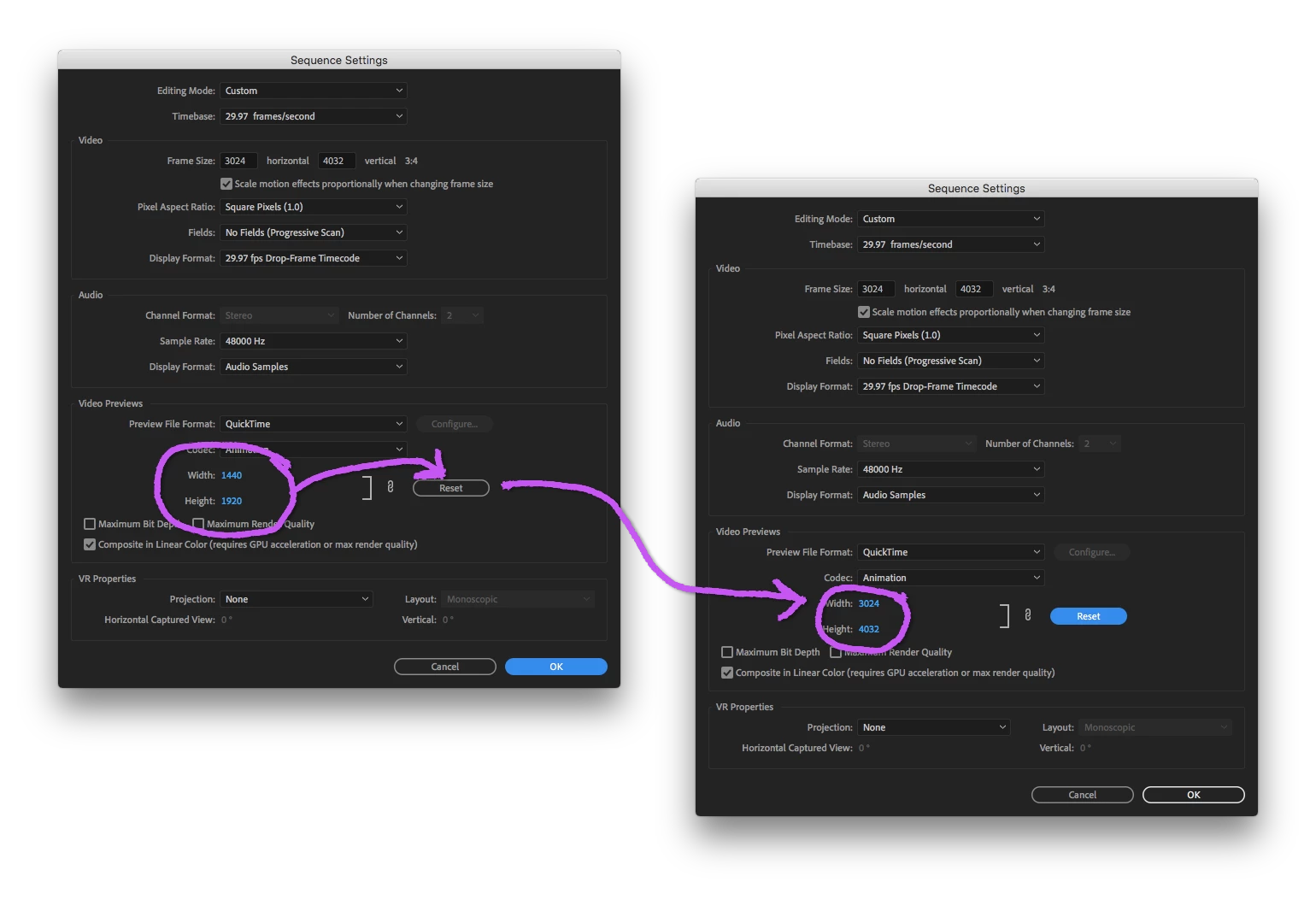

I typically work with videos shot with my iPhone , which I know the pixel count is 1920 by 1080, however, I recently made a video from a photo sequence entirely. The photos are 3024x4032 (standard iphone photo size), so when i first rendered the project out it was really blurry and quite massive, however, I resized the sequence to be 1440 by 1080 which is the same ratio but much less pixels. I then had to scale the images down by to about 50 percent and that seemed to work.

I am really looking for some clarification as to why an image has so many more pixels than a video and also when making the sequence 1440 by 1080 is the overall quality going down now because it is so much less than the original photos which are 3024 by 4032?

I appreciate help!

Matt