Opacity mask reducing image quality?

Hoping someone can explain what the cause is here to me, because it's driving me nuts. I have some static B&W png images in a timeline. I am applying a normal-blended opacity mask as a "crop", as it allows me to feather the edges as I overlay it over a background. Pretty straight forward stuff. But I am noticing that applying that mask- even without applying any kind of special blend mode or having any actual background element for it to even blend with- seems to be inherently decreasing the embedded .png image's quality.

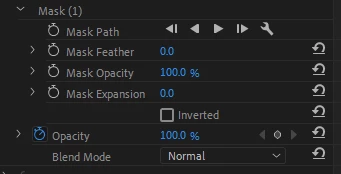

Here's the settings I'm using:

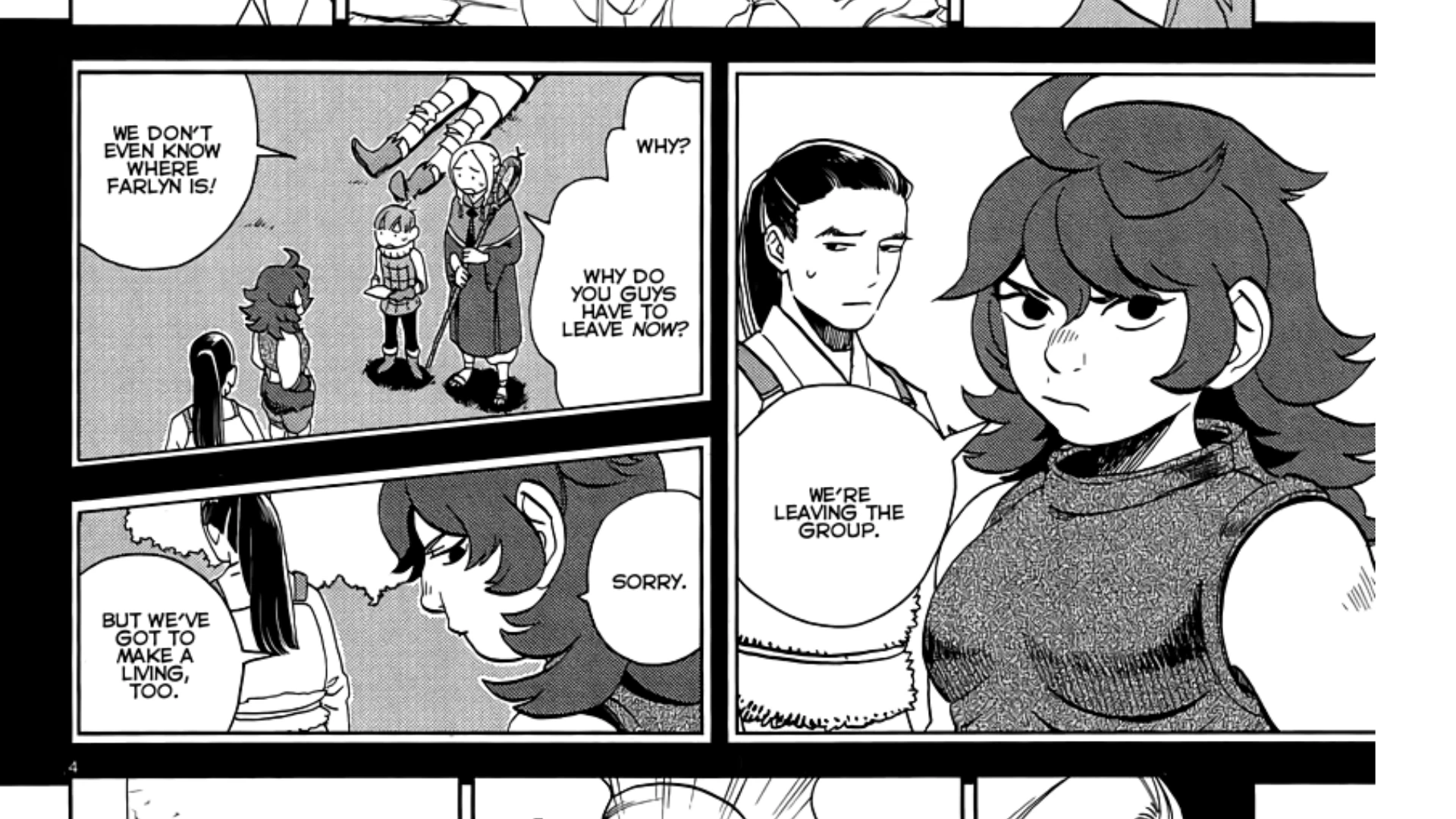

Here's the "unmasked" still:

And here's the masked version of the still:

If you open the two images and back-and-forth between the two, you'll see that the masked version is applying some kind of adjustment that the mask settings don't describe to me. Given it's a black and white comic image with text that's already somewhat upscaled, this is rather a problem for me.