Adobe Community

Adobe Community

- Home

- Premiere Pro

- Discussions

- Re: Mercury Playback Engine drops CUDA acceleratio...

- Re: Mercury Playback Engine drops CUDA acceleratio...

Copy link to clipboard

Copied

For unknown reasons, Premiere Pro occasionally drops CUDA GPU acceleration during edit sessions, blacking out video playback. Switching to "software only" is not a fix — just an added inconvenience. The problem can be rectified by restarting Premiere with CUDA acceleration selected. Everything is fine until the next time it happens, once or more times a day, sometimes a few days without incident, regardless of the project. When active, CUDA acceleration works perfectly. Has anyone found a real solution?

Windows 10 1909, build 18363.1198, 64-bit

Premiere Pro 14.6

AMD Ryzen Threadripper 1950X, 16 core, 64GB RAM

2 x Nvidia GTX 1080, SLI configuration, latest driver installed

Nvidia custom options for Premiere are set for CUDA-GPUs: All

1 Correct answer

1 Correct answer

Guess what? SLI is not supported at all in any NLE program. And you do not add the amount of RAM at all when two GPUs are used. In fact, the total amount of available RAM, when two GPUs are used, is equal only to the single GPU that has the lesser amount of VRAM. For example, if you have two GTX 1080's, you will have only 8 GB total of VRAM available. Not 16 GB. That greatly increases the risk of a GPU running depleted of available VRAM in the middle of a render. And when that does, Premiere Pro

...Copy link to clipboard

Copied

Guess what? SLI is not supported at all in any NLE program. And you do not add the amount of RAM at all when two GPUs are used. In fact, the total amount of available RAM, when two GPUs are used, is equal only to the single GPU that has the lesser amount of VRAM. For example, if you have two GTX 1080's, you will have only 8 GB total of VRAM available. Not 16 GB. That greatly increases the risk of a GPU running depleted of available VRAM in the middle of a render. And when that does, Premiere Pro will get slammed into the software-only mode with no indication or warning whatsoever.

Copy link to clipboard

Copied

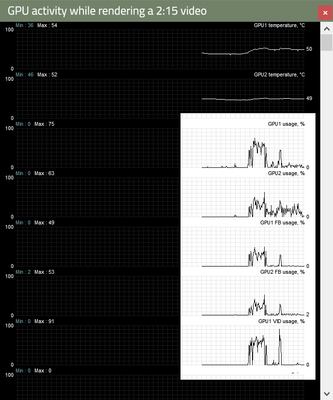

That's intersting. Still, I don't understand a couple of points. An Adobe post from April indicates that Premiere uses one graphics card for playback, and multiples when rendering and exporting. MSI Afterburner confirms multiple GPU activity while performing these functions, with each card showing 4522mb of memory usage, apparently backing up your report

However, CUDA-dropping has not occured while rendering or exporting. It can happen during basic, low-impact editing — positioning five 10-second clips in a single line in a new project crashed CUDA today, resulting in black playback screens. Also, these twin GPUs can handle the most intensive immersive video effects and transitions. I have not encountered "unable to render" or "error compiling" notices.

It seems to me that something other than memory shortfall may be causing the CUDA faults I'm encountering. If it's memory-related, could Premiere memory handling be a cause? Would that explain why a CUDA crash does not reoccur when returning to the same project after a relaunch?

Copy link to clipboard

Copied

Both of the GPUs must be unlinked in order for this NLE multi-GPU support to work. That means no SLI.

By enabling SLI, you will force both GPUs to perform the exact same task simultaneously. This can, and will, cause problems with NLE programs, especially if one of the GPUs is not quite equal in condition or performance to the other.

In other words, SLI itself is extremely finicky.

Copy link to clipboard

Copied

This is not a correct answer. It speaks to CUDA fail that occurs during rendering or exporting. This is not what I am experiencing — Premiere switching to Mercury software-only acceleration during routine timeline assembling. I have no issues during render or export. This issue is still unresolved. Did RjL190365 choose his own answer as correct for an issue I am not experiencing?

Copy link to clipboard

Copied

I did not select a correct answer. Someone else did.

And what I stated did not explain the crashes. If the crashes occur with one of the GPUs, then I would physically remove each of the GPUs one by one to determine if one of them is failing.

Copy link to clipboard

Copied

Must you operate in SLI config? Can't you unlink them as is the case in other multiple GPU systems? What happens when you do so?

Let us know, Michael.

Thanks,

Kevin