AE CC 2017: DUAL GPU Support?

Copy link to clipboard

Copied

Hi,

Does anyone know for certain if AE CC 2017 supports dual GPUs for rendering? Specifically, dual graphics cards. I've had good success in AE, Premiere, PS and AME with my single EVGA GTX 760 SC graphics card and my thought is to add a second GTX 760 in the hopes AE would make use of the additional CUDA cores.

I would have preferred to simply swap out the GTX 760 for a new GTX 1050 FTW card, but neither the 900-series nor the 1000-series boards from nVidia are compatible with my ASUS Rampage III Extreme motherboard (EVGA Support told me they believe the issue lies with the X58 chipset.)

Thanks!

ASUS Rampage II Extreme

i7 980x Extreme @ 3.7GHz

24GB RAM

SSD RAIDs

850W PS

Windows 7 Ultimate 64

Copy link to clipboard

Copied

Long and short: No. That's simply not how it works.

Mylenium

Copy link to clipboard

Copied

So you're saying yes, AE CC 17 does make use of a single graphic card's GPU/CUDA cores (which it does) but, the GPU/CUDA cores of an identical, additional graphics card are ignored

Copy link to clipboard

Copied

Correction: The MB is a Rampage III Extreme - not Rampage II

Copy link to clipboard

Copied

Don't obsess over GPUs for AE. A single card is barely used in the first place, so getting a second GPU card is just a waste of good money.

Can you use two cards for other applications? You can? That's great! That's what you should get it for. Not for AE.

Copy link to clipboard

Copied

Hi Dave, That was true for older versions of AE that relied on CPU cores but beginning with CC 2015 (or 2016) AE moved to relying on the GPU to perform most operations, that includes Premiere and encoding in AME.

Copy link to clipboard

Copied

The cores are not ignored, but it won't scale the performance linearly. AE's infrastructure isn't there yet and even in AME and Premiere you can only do so many parallel operations regardless of how much hardware you have. Most of the time your second card will have nothing to do and to boot, since things like OpenGL drawing functions are indeed only using a single card, you may run into issues with this stuff when using certain plug-ins or in other programs. Just leave it as it is.

Mylenium

Copy link to clipboard

Copied

HI

HI

I HAVE 2 fUJITSU

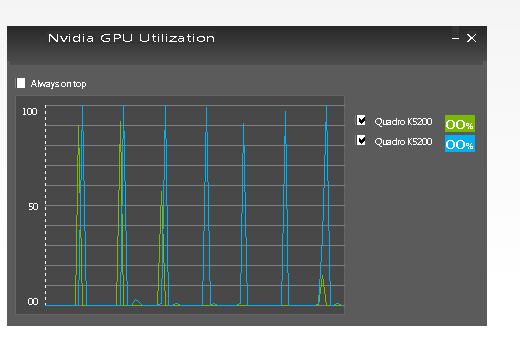

1 CELSIUS R940 WITH 2 NVIDIA QUADRO k5200.

1 celsius r940 with 1 NVIDIA QUADRO k5200

i would make render of 4k project (exoport MOV ).

Time of render as equal on both pc, but i'm waiting that with 2 GPU, I would expect that with two GPUs time would decrease.

there is a solution for MULti -gpu Render with AE???

thanks

Copy link to clipboard

Copied

sebastianor7018409 wrote

there is a solution for MULti -gpu Render with AE???

Nope. AE uses CPU for final render, not GPU.

You might consider actually reading the posts contained in this thread.

The only solution for GPU rendering that I know of is Octane https://home.otoy.com/render/octane-render/

Copy link to clipboard

Copied

These are excellent videos for those unfamiliar with GPUs and how they affect the performance of After Effects. The videos clearly explain how newer GPUs from nVidia and AMD vastly outperform CPUs in many operations within the latest versions of AE, Premiere and AME, including faster previews and rendering:

At 00:02:35 in the Chromacide video you can see in the AE Preferences menu under Previews, Fast Previews, and GPU Information that AE recognizes there are dual GeForce GTX 960 cards installed in the system. In addition, these cards are each fitted with 2GB of VRAM and AE indicates that a total of 4GB of usable video memory is available.

Copy link to clipboard

Copied

But does your workflow actually make use and is tailored to use these GPU features? That's all it comes down to. I'm sure I could easily tell you how to create a (seemingly) simple project that nullifies all GPU acceleration by using a single feature that breaks the accelerated pipeline just as I could impress people by crafting an equally (seemingly) complicated project, exploiting all my 15+ years of AE knowledge to not break the hardware acceleration. See where this is going? Exporting a video straight via AME using a simple keyer or basic color correction is a whole different thing than most "real" compositing tasks, so I'd be wary with any such tech demos. They are meant to impress and tailored accordingly, while at the same time their relevance for your daily work may be totally zero. In any case, it's a typical "your mileage may vary" thing, but as far as AE goes, I still maintain my position that it's simply not worth it to spend lots of money on beefy cards or multiple card setups.

Mylenium

Copy link to clipboard

Copied

Yes. My workflow makes heavy use of GPU-accelerated features. And if you're using Windows then testing GPU acceleration is a simple matter with apps like CPUID that can show you what CPU and GPU cores are active in real time. (For example, if I disable GPU acceleration my overclocked 6-core CPU with Hyperthreading will show all 6 cores at 100% during certain operations - re-enabling GPU acceleration not only vastly increases rendering speeds but also drops the CPU core usable to nearly zero.)

FYI, Dismissing facts, deriding "tech demos" and bragging about "15+ years of AE knowledge" isn't impressing anyone. In fact it makes you sound like an arrogant, closed-minded troll. Now please, just go away.

Copy link to clipboard

Copied

FYI It's crady: I would like to thank Mylenium for his well-written, precisely formulated and helpful input.

I hope, he won't go away and I am suggesting he does not take your very rude and ignorant reply to him serious.

And, by the way: I have tested extensivley and I could not find a nanosecond of performance improvement in dual vs. single gpu usage.

Best

Copy link to clipboard

Copied

Hi It's Crady,

You apparent;y know the answer to your own question & you obviously have your heart set on dual graphics cards & seem to be arguing in defense of getting them. So go ahead and get them. You don't need anyone's permission & apparently you don't need anyone's advice either. So, what are you doing here?

Copy link to clipboard

Copied

...Still trying to figure out if After Effects will utilize all my GPU's. I have 5 Asus 1080ti's on a B250 Mining board, NOT in SLI and was wondering if After Effects will pick them up and utilize them. When rendering (.MP4 H264) a 10 minute 1080p video it takes forever to render. And even when doing RAM preview's with not to many effects on each layer take several minutes. I had to reduce the resolution to a qarter and still wait 5 minutes for a RAM preview at 16gb 3200.

Copy link to clipboard

Copied

I use Dual Quadro 6000 6GB GDDR, on HP Z800 Workstation. But AE CC 2017 only use 6GB max as screenshot below

But when doing Render Export, AE uses ALL GPU equally:

It really boost rendering performance.

But when working on a 16Bit Color Depth comp and E3D 4K Texture Ray Traced Engine, I did not feel any significant performance boost. So i work on Fast Preview mode with 1/4 settings.

In PPro: If you choose to Render Queue to AME using CUDA, It's amazingly super duper fast!

Hope this info helps.

Cheers!

[EDIT]

- Don't use SLI for Dual GPU Setup for Editing, Comp, or Grading.

- If you use multi-display, let all display run thru the 1st GPU, also for your Secondary Monitor Preview

- On Multi-display setup, if you put Workspace on the secondary monitor, Set Multi-Display Settings to: Compatibility Mode on Nvidia Settings, to prevent sluggish or lag.

- I leave my 2nd Quadro alone, without attaching any Display

- I have AJA Kona 3G Card to do Real time monitoring Preview

Copy link to clipboard

Copied

Hi Aldy,

Thanks for the information given above!

I have a Quadro K5000 and just considering here if to plug a second Quadro K5000, which I bought by a chance recently on a very good price.

I usually export projects directly from AE and not from Media Encoder. Before days I was even working with CS6 only and have just installed CC 2017.

Still not working with 4K videos, but may do 4K soon after some storage upgrades.

All opinions I have found are that a second GPU is still a waste of money, especially for AE. I was going to build just a second PC configuration with my second Quadro K5000, but now I will test to render some projects with and without dual GPUs installed and will update here.

Aldy, whats is your suggestion if I attach 3 monitors and how to connect them. I already have 2x 1920x1200, but maybe I can add one more 4K monitor or a TV for a 4K preview. K5000 has 2 display ports + 2 DVI ports available.

Copy link to clipboard

Copied

Hi @Stoyanov,

Sorry for late reply.

Every single monitor display attached to the GPU are consuming it's GDDR. it's small though, but if you attached 4K display or 2x 1200px it will takes quite a lot of GDDR on your GPU. Even if it's a Quadro.

My suggestions is:

- Use Dual 1200px Monitors using DisplayPorts. (You'll need solid display signals to 'render' the GUI on your main display and dealing with refresh rates)

- If possible Turn off Vertical Sync on NVIDIA ControlPanel 'Base Profile' (unless your monitor can support broadcast timing, like i use Eizo CGW245W 24-inch 1920x1200 and EIZO CG277 27-inch 2K 10-bit Display Monitor)

- Turn off ECC on 'Workstation Section' on NVCP (Turning on ECC mode will also consume GDDR) Using ECC are best only when you do Simulation or high task analysis or computing.

- Consider to buy Professional Capture card for Real-Time 4K monitoring. Like i've said, i use AJA Kona 3G, To get better monitoring performance and High Resolution, Timing, Color Profile, etc without sacrificing all the loads on your Quadro.

- The cheapest 4K preview card are from BMD, Blackmagic Design. It's support 4K @ 60p thru HDMI, so you can plug any consumer 4K TV on it for Previewing.

- Set Windows Visual Effects settings to 'Best Performance', System will disable all fancy useless graphical UI. (transparency, window animation, cursor shadow, etc) that uses GPU resources which can make your system sluggish during heaving interactions or renders

Also keep these in mind:

After Effects, and other compositing tools (Nuke, Flame, Fusion, etc) mainly use GPU for INTERACTIONs while working with 3D elements or GPU accelerated FX.

Final Rendering still be done on CPU. (same like 3D applications) but there's GPU render nowadays. (Octane, V-Ray, iRay)

PPro use GPU for Encoding, Decoding or Debayer RAW footage (specially from RED R3D). it helps to accelerate Realtime Playback.

And it also boost performance on PPro Export on supported Codecs. Same goes to Media Encoder.

If your system cannot achieve realtime 4K playback, it;s not always about GPU. Check your CPU & Storage performance too.

I have never use Dynamic Link (Queue to AME) from AE. Even on our $70K HP Z840 64GB RAM, Dual M6000 24GB, 14TB Enterprise Fiber channel RAID Storage, AME Renders 7 hours from AE, while i can finish it straight from AE in 2,5hrs! It's 4K Cinema resolution.

Hope this helps ![]()

Have fun!

Copy link to clipboard

Copied

Hi!

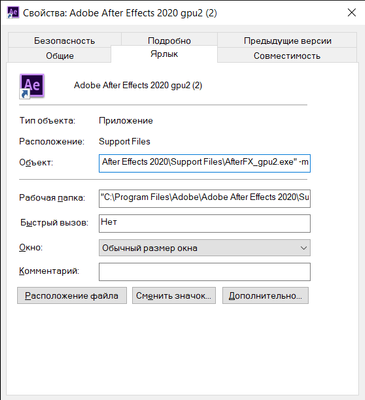

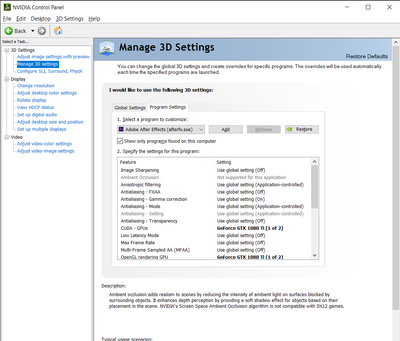

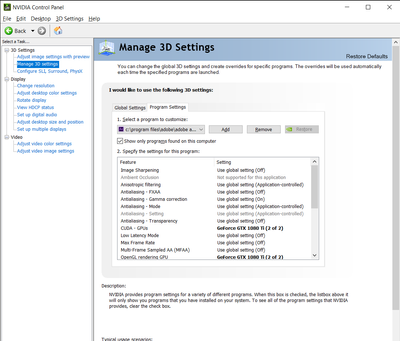

I discovered, that there is an option in Nvidia Control Panel, that allows to define specific GPU for certain app. So, you can go to Adobe After Effects Support Files folder and duplicate "AfterFX.exe" file to "AfterFX_gpu2.exe" (for example).

After that create shortcuts for both files. Then right-click -> Properties -> Target -> and add " -m" at the end.

Then open NVIDIA Control Panel, disable SLI Mode, and in "Manage 3D settings" -> "Program Settings" menu add these exe files. For each of them set its own "CUDA - GPUs" and "OpenGL rendering GPU".

So now you can open 2 copies of After Effects with individual GPU for each of them. Change "Output module" to image sequence, enable Multi-machine "Render Settings" and start render on both AE.

This method might be helpful, when you work with very high resolutions and use a lot of GPU effects, and would like to speed up render time.

I created a short video tutorial how to do this:

Get ready! An upgraded Adobe Community experience is coming in January.

Learn more