Supersampling

Copy link to clipboard

Copied

Hey everyone,

I'm trying to implement something like RE:Map and I pretty much have it working close to perfect.

The issue no is that even using 16 bit UV pass edges stay jagged and I'd like to do some supersampling, but I have no clue on what the best way forward is.

The code was clean and readable until I started hacking away at giving supersampling a go. 😕

This is the mess I've currently going. (Note this is my first time playing around with the SDK.)

static PF_Err

RemapFunc16(

void *refcon,

A_long xL,

A_long yL,

PF_Pixel16 *in,

PF_Pixel16 *out)

{

PF_Err err = PF_Err_NONE;

double tmp_u, tmp_v;

PF_Fixed sample_u, sample_v;

RemapInfo *contextP = reinterpret_cast<RemapInfo*>(refcon);

if (contextP)

{

// Define `in_data` (required for PF_SUBPIXEL_SAMPLE)

PF_InData *in_data = contextP->in_data;

AEGP_SuiteHandler suites(in_data->pica_basicP);

if (contextP->supersamplingMode != 1) // Supersampling!?

{

int ss;

if (contextP->supersamplingMode == 2) ss = 2; // 2x Supersampling!

else if (contextP->supersamplingMode == 3) ss = 4; // 4x Supersampling!

else if (contextP->supersamplingMode == 4) ss = 8; // 8x Supersampling!

double divisor = 1 / ((double)ss + 2.0);

int half = ss / 2;

double src_u, src_v;

int totalSamples = ss * ss;

double sampleWeight = 1.0 / (double)totalSamples;

A_u_short red = 0;

A_u_short green = 0;

A_u_short blue = 0;

A_u_short alpha = 0;

A_long xLF = ((A_long)xL << 16);

A_long yLF = ((A_long)yL << 16);

PF_Fixed sample_src_u, sample_src_v;

//PF_Fixed sample_u, sample_v;

for (int x = -half; x <= half; x++)

{

// Get supersampled u source

src_u = (double)x * divisor;

//src_u += xLF;

sample_src_u = xLF + LONG2FIX(src_u);

for (int y = -half; y <= half; y++)

{

// Get supersampled v source

src_v = (double)y * divisor;

//src_v += yLF;

sample_src_v = yLF + LONG2FIX(src_v);

// Set source point to `sourceLayer`

contextP->samp_pb.src = contextP->sourceLayerData;

// Sample src color

suites.Sampling16Suite1()->subpixel_sample16(in_data->effect_ref,

sample_src_u,

sample_src_v,

&contextP->samp_pb,

out);

// Get UV based on Red/Green from input pixel

tmp_u = ((double)out->red / (double)PF_MAX_CHAN16);

tmp_v = ((double)out->green / (double)PF_MAX_CHAN16);

tmp_u *= (double)contextP->textureLayer->u.ld.width;

tmp_v *= (double)contextP->textureLayer->u.ld.height;

sample_u = LONG2FIX(tmp_u);

sample_v = LONG2FIX(tmp_v);

// Set source point to `textureLayer`

contextP->samp_pb.src = &contextP->textureLayer->u.ld;

// Sample from `map` at UV and set `out` pixel.

suites.Sampling16Suite1()->subpixel_sample16(in_data->effect_ref,

sample_u,

sample_v,

&contextP->samp_pb,

out);

/*

red += (A_u_short)(out->red * sampleWeight);

green += (A_u_short)(out->green * sampleWeight);

blue += (A_u_short)(out->blue * sampleWeight);

alpha += (A_u_short)(out->alpha * sampleWeight);

*/

}

}

//out->red = (A_u_short)red;

//out->green = (A_u_short)green;

//out->blue = (A_u_short)blue;

//out->alpha = (A_u_short)alpha;

}

else { // No Supersampling!

// Get UV based on Red/Green from input pixel

tmp_u = ((double)in->red / (double)PF_MAX_CHAN16);

tmp_v = ((double)in->green / (double)PF_MAX_CHAN16);

tmp_u *= (double)contextP->textureLayer->u.ld.width;

tmp_v *= (double)contextP->textureLayer->u.ld.height;

sample_u = LONG2FIX(tmp_u);

sample_v = LONG2FIX(tmp_v);

// Set source point to `textureLayer`

contextP->samp_pb.src = &contextP->textureLayer->u.ld;

// Sample from `map` at UV and set `out` pixel.

suites.Sampling16Suite1()->subpixel_sample16(in_data->effect_ref,

sample_u,

sample_v,

&contextP->samp_pb,

out);

}

if (contextP->preserveAlpha == TRUE)

{

out->alpha *= (in->alpha / PF_MAX_CHAN16);

}

}

return err;

}

Copy link to clipboard

Copied

hi colorbleed! welcome to the forum!

since i'm not a machine (to the best of my knowledge), it's hard for me to

tell what result your code gives.

can you describe the problem you're getting with the current code? (perhaps

a "print screen" would help)

Copy link to clipboard

Copied

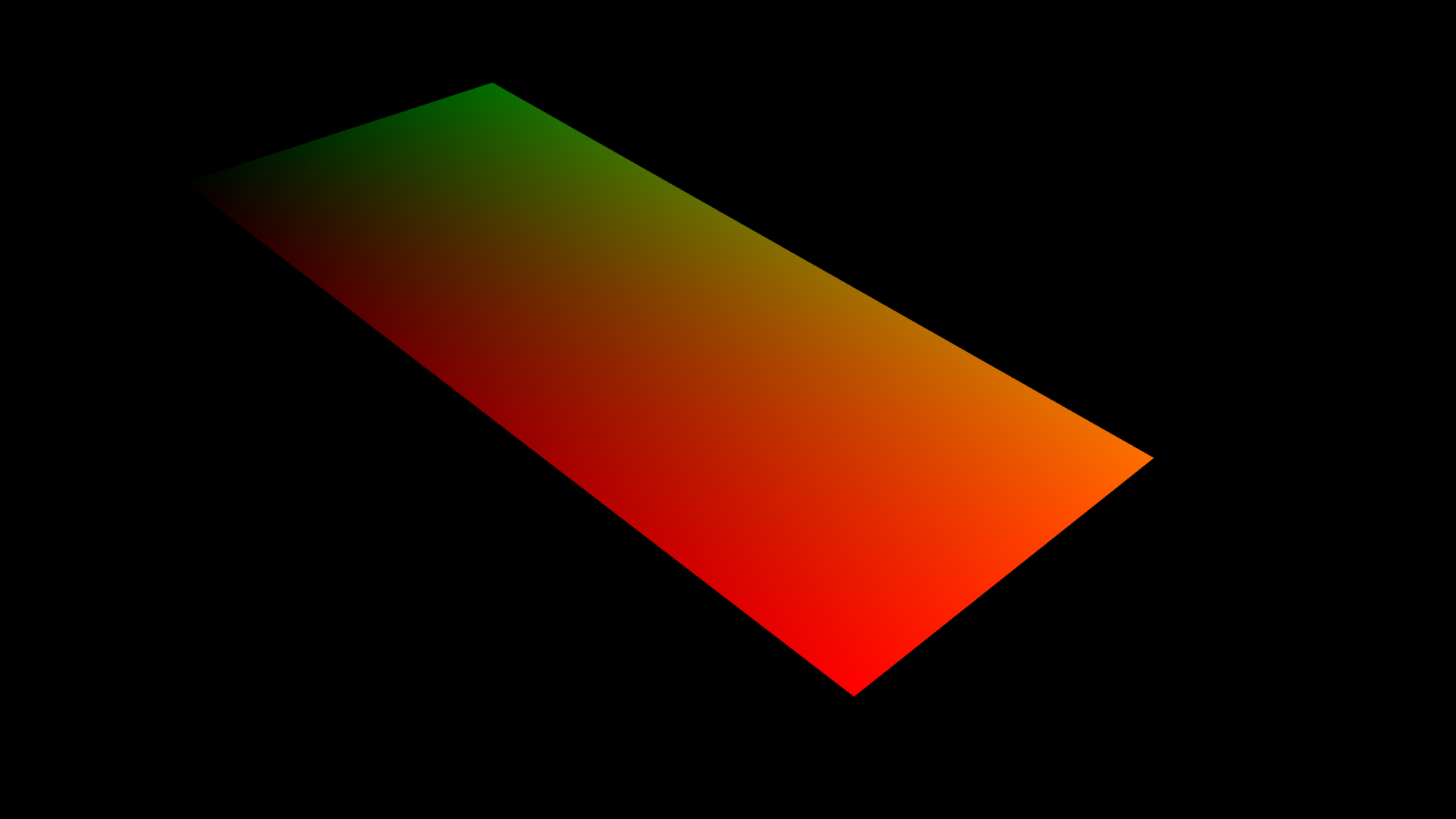

I'm using the above UV pass (though in 16 bit to get higher fidelity) the remap the red/green values to the UV of a texture.

For example like this:

Since each pixel samples a single pixel from the texture there's no anti-aliasing for each sample. So we would need to perform supersampling to make the sampling smoother.

There would be two ways to do that (I think?):

1. For each pixel we sample input image at subpixel positions to get the blended color. Then use this subpixel sampled colors (for example 4x4) to sample the texture and use the weighted result giving a smoother result.

OR

2. Within the effect upscale the source image (eg. 2x, 4x, 8x) with linear interpolation (so we get the subpixels into the image), then perform the effect on this resized version and get a huge output. Then resize the output again to the smaller size so it gets smoothed.

For both of these possible solutions I'm not sure how to do it in the SDK. I've tried number one in my code sample above. (Note that the small code snippet without supersampling is also in there, that's the *else* statement with // NO SUPERSAMPLING ). ![]() That code snippet works fine, but gives the jagged edges. Note that with my supersampling implementation of number one I also get some random colored pixels in my image, also not too sure why that is.

That code snippet works fine, but gives the jagged edges. Note that with my supersampling implementation of number one I also get some random colored pixels in my image, also not too sure why that is.

Copy link to clipboard

Copied

well...

both suggested methods carry their own problems. also, you're currently

seeing the probelms only n the edges, but actually if you processed a

texture instead of a flat colored text, you would have seen problems all

over the image.

just using subpixel sampling will do fine when the texture is enlarged.

that's because every source pixel will be represented in the final image,

and in between "integer" samples you'll get an interpolated value.

when the texture is made smaller in the final image... that's where you'll

have a problem.

why?

consider a texture whole pixels are a pattern of red, green, blue and white

pixels, repeating. now the final image is a 0.5 scale on the original

texture.

if the location of each "destination" pixel will translate to a center of a

"source" pixel, then subpixel sampling will just give you the center of

pixel 1 and the center of pixel 3.

pixels 2 and 4 will not be represented in the final image.

the second suggested method also has it's limits.

if you opt for x4 subsampling, you'll miss pixels when the scale is of the

final texture is less than 0.25.

x8 will have the same problem on a lower threshold.

you can opt for selective subsampling, which means you'll only do that

process where the rgba difference between 2 adjacent pixels is over a

certain threshold.

this is generally the concept of anti-aliasing. a selective process of

subpixel sampling. it's usually faster to process then arbitrarily

subsampling all of the pixels.

another way to go about it, is to calculate the source location of the 4

corers of each pixel in the final image, and then grab the average rgba

value of the gotten polygon on the source texture.

Copy link to clipboard

Copied

The fact that the pattern doesn't show as the same pattern when it becomes to small in the final image is not exactly the issue. I think it would be perfect if for that single pixel in the final image multiple samples would've been made on the texture where each sample partially contributes to the final color. This would mean it would receive contribution from all colors in the pattern and would look similar to a downscaled version of the texture with linear interpolation. If my texture image is too small in size, then so be it... I would have to resize my texture. But the UV pass is made for that shot and wouldn't `provide more information`, so subpixel sampling it should be the 'fine' way going forward. I'm just looking at the best way to implement it.

I'm not looking for solutions to moiree patterns because of high-contrast patterns in a texture, but I'm looking to anti-alias my final output so it interpolates the colors of the sampled texture better. Currently it has no interpolation of the textured image whatsoever, so you get the jagged edges.

There's a couple of things holding me back on figuring out subsampling. The easiest method I could think of is upscale the input footage, sample that and define the bigger sized output and rescale it back to the original size for the real output of the effect. What's the way to perform a 2x, 4x and 8x scale resize of the input image and sampling each row of that to create an intermediate image which I scale down to respectively 0.5, 0.25, 0.125 with a linear interpolation?

Also about the `suites.Sampling16Suite1()->subpixel_sample16()` method what exactly is the fixed point.

- If I want to offset the sampling by half a pixel? What value do I enter?

- If I want to sample the center of an image, what value do I enter?

- If I want to sample the top-left or bottom-right, what value do I enter?

- And then I get to, if I want to sample a grid of 4x4 (supersampling) for a pixel... how would I go about it in the right way?

Thanks for the help so far!

Cheers,

Roy