- Home

- After Effects

- Discussions

- Re: Why not frame blend ALL layers?

- Re: Why not frame blend ALL layers?

Copy link to clipboard

Copied

I'm working on a music video composition that I imported from Premiere. It has lots of quick edits, and about 10% of the shots are time-stretched. Unfortunately, the import from Premiere doesn't keep the frame blending on the the time-stretched layers and I can't tell by looking at the comp which layers are time-stretched and which are not. That means I'd have to click on each one of the thousand layers and go into the time dialogue to figure it out. That would take forever.

I thought a good solution would be to turn on frame blending for all the layers, but the Adobe help site says,

"Don’t apply frame blending unless the video of a layer has been re-timed—that is, the video is playing at a different frame rate than the frame rate of the source video."

Why is that? And does anyone have any suggestions on what to do here?

Thanks!

1 Correct answer

1 Correct answer

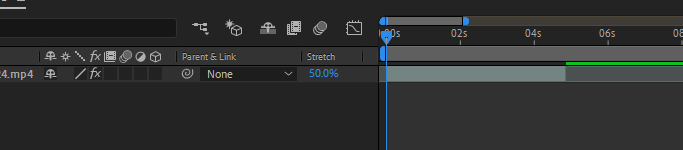

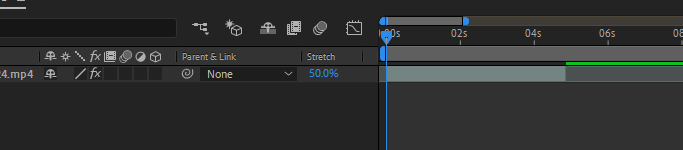

reveal the "stretch" column and you will see all the clips that were stretched if the percentage is other than 100%

having frame blend on layers that don't need it may introduce ghosting on the edges. hey, in most cases that's what it does! that's why in most cases it's not good enough. I have only found frame blending useful when I ramp up the speed of the clip. and even then - it's really for avisual effect kind of thing.

Copy link to clipboard

Copied

reveal the "stretch" column and you will see all the clips that were stretched if the percentage is other than 100%

having frame blend on layers that don't need it may introduce ghosting on the edges. hey, in most cases that's what it does! that's why in most cases it's not good enough. I have only found frame blending useful when I ramp up the speed of the clip. and even then - it's really for avisual effect kind of thing.

Copy link to clipboard

Copied

Thanks! That's exactly what I needed!

Copy link to clipboard

Copied

Phi.Def wrote

... That means I'd have to click on each one of the thousand layers and go into the time dialogue to figure it out. That would take forever.

What in the world are you doing with a thousand layers in an AE comp? If a frame does not need some kind of effect you cannot produce in your NLE then it does not need to be in After Effects. Make sure you are spending your time in After Effects working only on the shots that you need to work on.

If I had a bunch of fast cuts and time-stretched video in a Premiere Pro sequence and I needed to apply some kind of effect to the entire sequence that I could not do in PPro, I would render that sequence of shots to a suitable visually lossless production format and just bring that rendered shot into AE for further processing. Working on a sequence in After Effects that is longer than just a few seconds can easily turn into a nightmare.

If you could explain exactly what you are doing to the shots or sequences you are bringing into After Effects maybe we could help you streamline your workflow. The last project I did had a little more than 20 shots that had to be processed in After Effects, the longest comp was 4 seconds, the shortest was 9 frames.

Copy link to clipboard

Copied

I'm mainly doing noise reduction and a beat reactor across the entire music video. I haven't had any problems with this workflow aside from the question above (which Roei provided a great answer for), but I'd love to hear your suggestion on how to do this more efficiently.

Copy link to clipboard

Copied

I think if you render your PPro project and add the noise reduction (which PPro does very well by the way even using an adjustment layer) then put the rendered visually lossless movie in AE and added your beat reactor (visualizer) you would save a bunch of render time and the project would be way more stable.

Copy link to clipboard

Copied

What you're suggesting is a destructive workflow. While certain compression formats may be "lossless", that doesn't mean such exports from Premiere retain all the data of the original raw.

For example, I do my colour grading in Premiere with Lumetri. If I then export that, even using a "lossless" format, I will have still lost the original colour depth of my raw footage. When I then bring that footage in to After Effects, there won't be as much information available for After Effects to work with.

Taking my edited sequence and replacing it with an After Effects composition is a non-destructive workflow that keeps the all the information from the raw footage, and only processes it once (since my transform settings and Lumetri effects are preserved in the After Effects comp).

Copy link to clipboard

Copied

What I'm suggesting is not Destructive, it's more efficient in the long run, visually lossless and will save tons of time and prevent busted renders.

In your second comment, you mentioned, punching into a shot, scaling up. If that is all you are doing it is more efficient than it is in AE for both speed and quality unless you use some 3rd party plug-ins. If you need to animate that punch in in time with the music, then either do just that shot using dynamic link or forget the punch in using Premiere Pro then the layer of the rendered footage in AE and do the punch in there.

An AE comp with hundreds of layers, even if the comp is nested and the music visualizer is added to the nested comp, is going to take much longer to render and be less stable, and harder to fix if there are problems than a single rendered movie with the same visualizer applied. That is an undeniable fact. Render times will be at least two times as long as rendering directly from Premiere Pro, adding the visualizer in AE, and then rendering the deliverable. There is absolutely no time savings at all. I know this because I keep records and I've tried both methods.

The last sentence in the post below does not make any sense at all. You said "If you're concerned about the quality of your end product, it's always best to process your footage the least number of times possible. Even if you're using lossless formats, you're still degrading your image. Frankly, I'm shocked that you are suggesting such a workflow to people who might not know any better."

Visually lossless mezzanine formats are used by every major studio on every major production. They do it all the time because working with digital intermediates is efficient and has absolutely no effect on the quality of the final product. There is no quality loss no matter how many generations you end up using. I'm only suggesting a single generation. I've worked on projects for major studios and major directors where we were using fifth and sixth generation footage. If you knew anything at all about video compression you would know that. You just have to pick the right format. If you use MP4 as your digital intermediates then you are an amateur.

One last point, which is better? Delivering a project on time and on budget by using efficient production techniques or pushing 1000 shots into an AE comp even though you might only need to work on a few of them, risking a failed render, which can cause days or even weeks to fix. You would be hard-pressed to find any professional, profitable, and productive production company that would send a 1000 shot sequence to After Effects just to denoise and add a music visualizer.

Copy link to clipboard

Copied

Without jumping through hoops to ensure quality control, encoding the processed video from Premiere to import into After Effects for another round of processing is NOT visually lossless. You are mistaken. Furthermore, what you are suggesting would have easily taken me 10x longer than what I ended up doing.

You're suggesting that for every single time I punched in on a shot (probably once every 2 seconds or so over the course of a 4.5 minute music video = 100 different shots), I send the individual shot over to AE (along with the entire bassline soundtrack for each shot to maintain sync) to apply my beat reactor. Not only that, but then I export nearly the entire video (minus those 100 shots) to a lossless format, import that into AE, apply the same beat reactor, then export it from After Effects to reimport it in Premiere so that I can recombine it with the 100 other shots I sent to AE individually. Do you have any idea how long that would take?

What I did was select basically the entire music video (except for a couple of video tracks) sent it to AE, applied a beat reactor (by parenting all layers to an animated null, I ensured that even previously transformed shots maintained their best possible quality), came back to Premiere and exported the whole thing. The render took all of 3 hours on my machine. I didn't experience any stability issues. Aside from building the beat reactor effect, my process involved about 6 clicks of my mouse, compared to the 600 that your method would involve.

Copy link to clipboard

Copied

I was just trying to help you work into a more productive workflow. I've been at this business for a long time, I won't bore you with my resume but if digital intermediates are good enough for Pixar, Disney, Sony Pictures then that workflow is not going to degrade your music video in any way you could possibly measure. I won't argue that point again.

I do have one question though. I can't figure out how 6 mouse clicks added a beat reactor and did a punch in every 2 seconds in a five-minute video. You must have some serious skills.

Some day you will have a five-minute comp with a hundred layers fail four or five times, you'll waste a day or so and miss a deadline, and be stuck with an unhappy client.

Copy link to clipboard

Copied

https://forums.adobe.com/people/Rick+Gerard wrote

I think if you render your PPro project and add the noise reduction (which PPro does very well by the way even using an adjustment layer) then put the rendered visually lossless movie in AE and added your beat reactor (visualizer) you would save a bunch of render time and the project would be way more stable.

Also, for effect, I often "punched in" during a shot. That is, I cut to the same shot just scaled up a bit. If I then export that (even to a lossless format) Premiere interpolates the pixels of the scaled up shots. Importing that into After Effect and applying a beat reactor would transform the previously baked in transformation. It would interpolate new pixels from the pixels that had previously been interpolated once before, significantly reducing the image quality.

If you're concerned about the quality of your end product, it's always best to process your footage the least number of times possible. Even if you're using lossless formats, you're still degrading your image. Frankly, I'm shocked that you are suggesting such a workflow to people who might not know any better.

Find more inspiration, events, and resources on the new Adobe Community

Explore Now